The DTC hosted its annual DTC Science Advisory Board (SAB) meeting on September 14-15, 2016. This annual event provides an opportunity for the DTC to present a review of DTC key accomplishments over the past three years to representatives of the research community and to collect input directed at shaping a strategy for the future.

“The DTC enables and supports a wide variety of research-to-operations and operations-to-research activities,” said SAB Chair Russ Schumacher. “The SAB discussed ways that these activities can be further strengthened, including through model evaluation and maintaining operational model codes. A topic of particular discussion was NOAA and NCEP’s goal to move toward a unified modeling approach. This brings with it some great opportunities to connect the research and operational communities to enhance weather prediction, but will also pose challenges in familiarizing researchers with new modeling systems.”

The agenda included an Operational Partners Session, an opportunity for the new Environmental Modeling Center (EMC) Director Dr. Mike Farrar to present a vision for NOAA’s unified modeling, followed by the Air Force outlook for its modeling suite by Dr. John Zapotocny. DTC task area presentations reviewed key accomplishments with a focus on research to operations, and presented thoughts for possible research to operations activities, risks, and challenges within the coming three years. Break-out task area group discussions were productive, followed by the SAB recommendations session. The recommendations are detailed in the DTC SAB meeting summary, but the following are a few highlights.

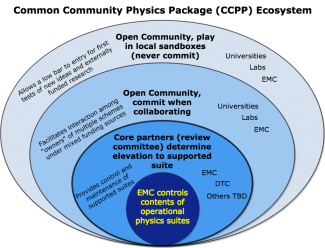

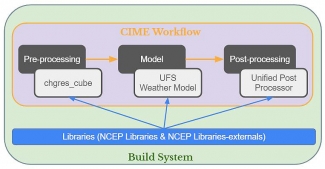

With NCEP’s transition to a unified modeling system centered around the Finite Volume Cubed Sphere (FV3) dynamic core, the SAB voiced their belief that the community will need the DTC’s leadership in supporting FV3 and the NOAA Environmental Modeling System (NEMS) in future years, and the DTC should build up internal expertise in advance of these transitions. They noted no other organization in the U.S. has a core responsibility to be an unbiased evaluator of Numerical Weather Prediction (NWP) models, and advised the DTC to not lose sight of their unique function. As the NGGPS paradigm emerges, the SAB encourages the DTC leadership to keep close attention on where future DTC funding might be anticipated, and make sure future SAB members have expertise in those funding areas.

The SAB recommended the DTC build an interactive research community to hear from active users with fresh ideas and provide a conduit between research now and possible operations in the future. It would be a good forum for interacting with active scientists in other areas of modeling and would be a promising mechanism for getting users involved in the DTC Visitor Program. They also encouraged further engagement with the global ensemble, convection-allowing ensemble, data assimilation, and verification communities.

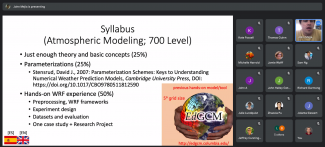

To support DTC-supported code and address the DTC staffing problem, the SAB suggested a graduate student model. This could build and enhance capabilities of upcoming researchers relevant to the needs of operational models. It would also benefit the university community by supporting students with a gap in funding -- a win-win situation for both the DTC and the university.

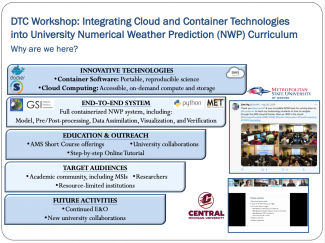

The SAB recommended the DTC to continue their work to put supported codes into Docker containers, an open-source project that automates the deployment of applications inside software containers for community use. This significantly reduces challenges associated with setting up and running code on different platforms, and building the libraries the codes use. They also suggested clarifying the roles of model and code developers, the DTC, and the user community in accessing, supporting, and adding new innovations to reduce possible confusion and redundancy.

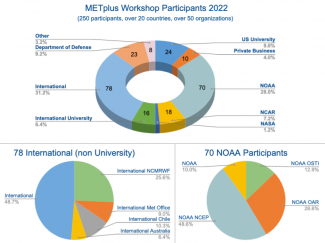

Because all DTC task areas require robust verification tools to achieve their objectives, the SAB recommended strengthening and supporting collaborations that already exist between the verification task and the other task areas. This may involve increasing the flexibility of Model Evaluation Tools (MET) to support the needs of both DTC tasks and communities. The SAB also indicated there needs to be clear pathways for those who develop new verification tools or methods to have those tools incorporated into MET. They also encouraged the DTC to have more year-to-year continuity in testing and evaluation activities to increase productivity and yield more fruitful outcomes, and to continue to thoughtfully balance these activities on a task-by-task basis.

The external community Science Advisory Board acts as a sounding board to assist the DTC Director, and provides advice on emerging NWP technologies, strategic computer resource issues, selection of code for testing and evaluation, and selection of candidates for the visiting scientist program. Members are nominated by the Management Board, and the Executive Committee provides final approval of SAB nominations for a 3-year term. Current members of the DTC Science Advisory Board can be found at http://dtcenter.org under governance.