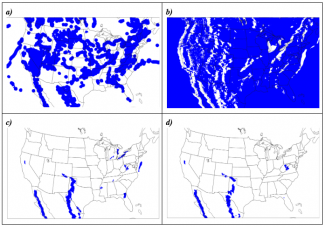

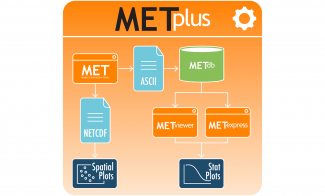

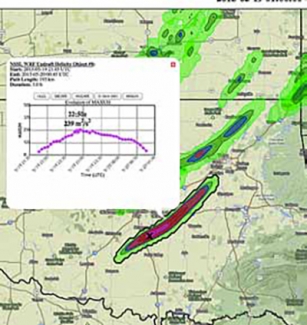

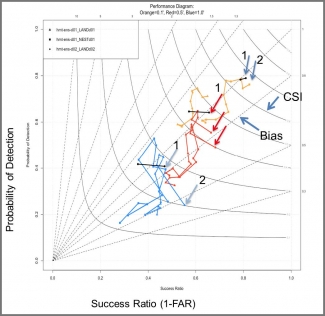

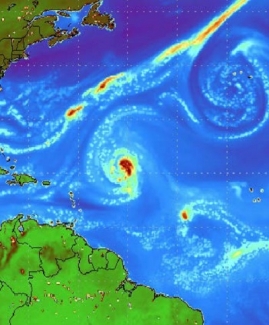

The Method for Object-based Diagnostic Evaluation (MODE), which was originally developed to work with a single forecast and observation input, has been extended to accommodate multiple inputs, known as Multivariate MODE (MvMODE). This extension was first conceptualized to analyze complex objects such as dry lines. MvMODE has been adapted to identify and assess blizzard-like features, aimed at enhancing feature-driven evaluations of high-impact hydrometeorological events. In addition, recent work has improved the flexibility of MvMODE and streamlined its functionality to enhance user accessibility. METplus v5.1.0 documentation includes a use case for identifying blizzard-like objects, using the inputs of precipitation type, visibility, and 10 m winds, as well as details on new configuration parameters added during the enhancements. Output from MvMODE resembles that of single-variable MODE, including ASCII files of contingency table counts and object attributes, a NetCDF file of the objects, and a summary PostScript file. The recent improvements make MODE a more powerful tool for evaluating complex weather phenomena, providing researchers and forecasters with richer insights into spatial accuracy of feature-based events.

More information on MvMODE can be found in the METplus User’s guide. Information on additional configuration options can be found in the config file section of the user’s guide.