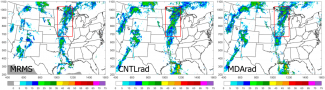

As visitors to the DTC in 2015, Jason Otkin, Chris Rozoff, and Sarah Griffin explored using object-based verification methods to assess the accuracy of cloud forecasts from the experimental High Resolution Rapid Refresh (HRRR) model. Though the forecast accuracy could be assessed using traditional statistics such as root mean square error or bias, additional information about errors in the spatial distribution of the cloud field could be obtained by using more sophisticated object-based verification methods.

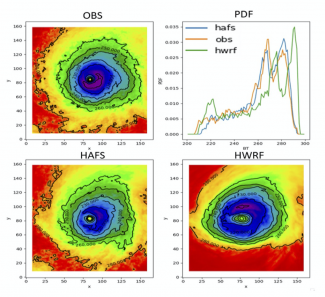

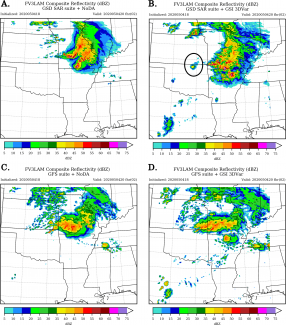

The primary objective of their visit to the DTC was to learn to use the Meteorological Evaluation Tools’ Method for Object-Based Diagnostic Evaluation (MODE). Once they learned how MODE defines single objects and clusters of objects, they could use MODE output of individual objects and matched pairs to assess the forecast accuracy.

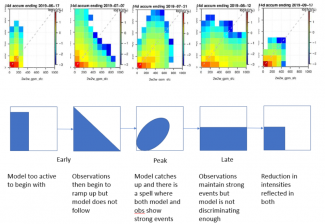

The team also wanted to develop innovative methods using MODE output to provide new insights. For example, they were able to calculate and compare how well certain characteristics of the forecast cloud object, suchs as its size and location, match those of the observed cloud object.

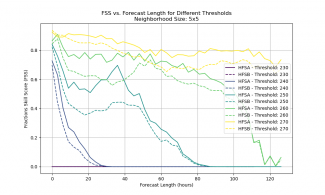

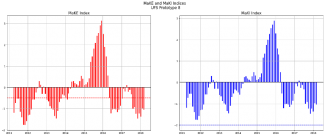

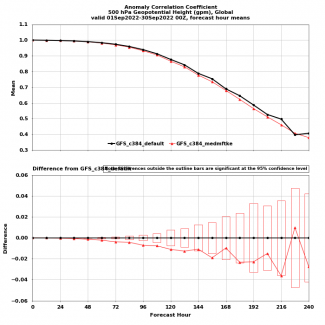

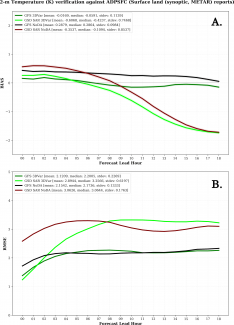

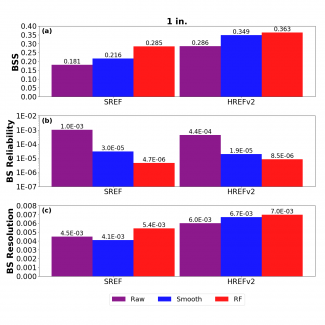

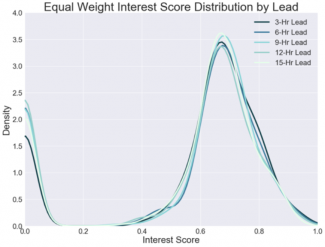

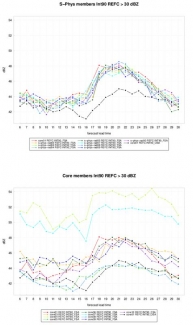

One outcome of their DTC visit was the development of the MODE Skill Score (MSS). The MSS uses the interest val- ues generated by MODE, which characterize how closely the forecast and observed objects match each other, along with the size of the observed object, to portray the MODE output as a single number.

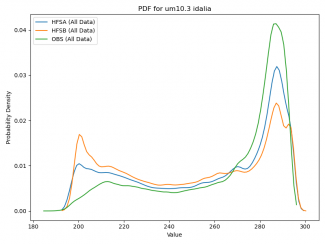

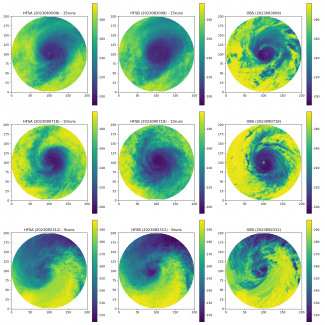

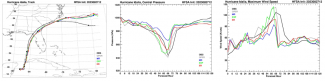

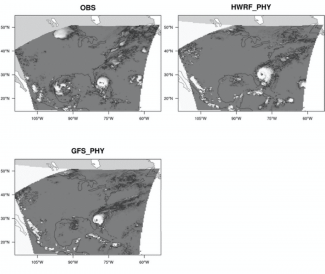

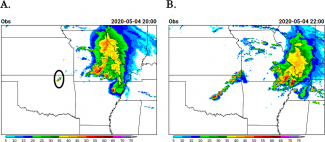

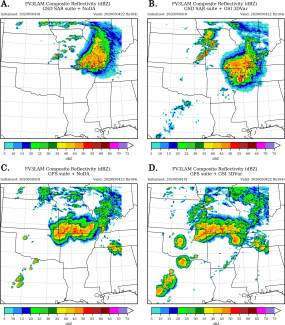

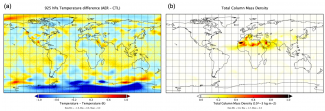

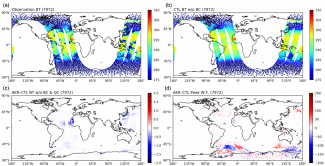

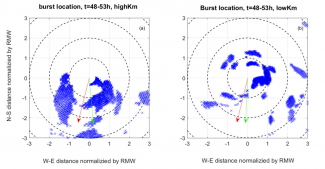

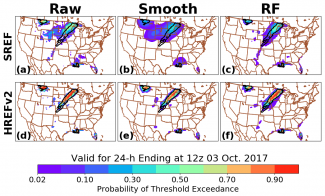

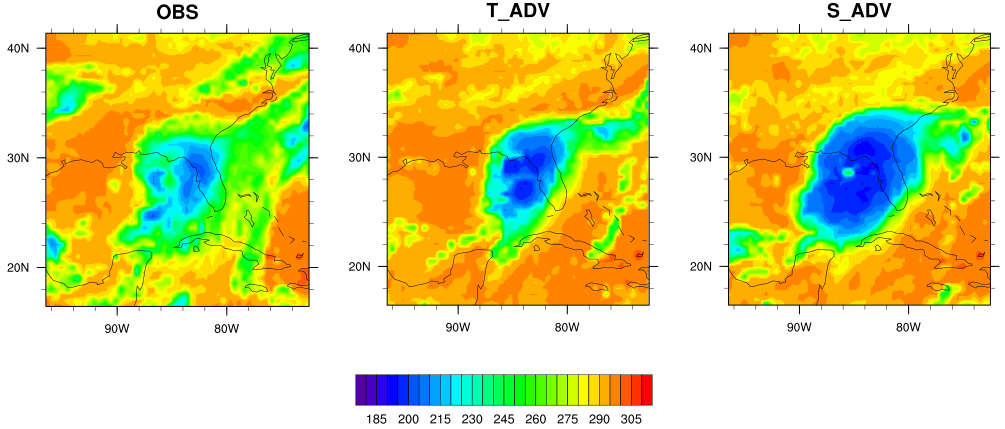

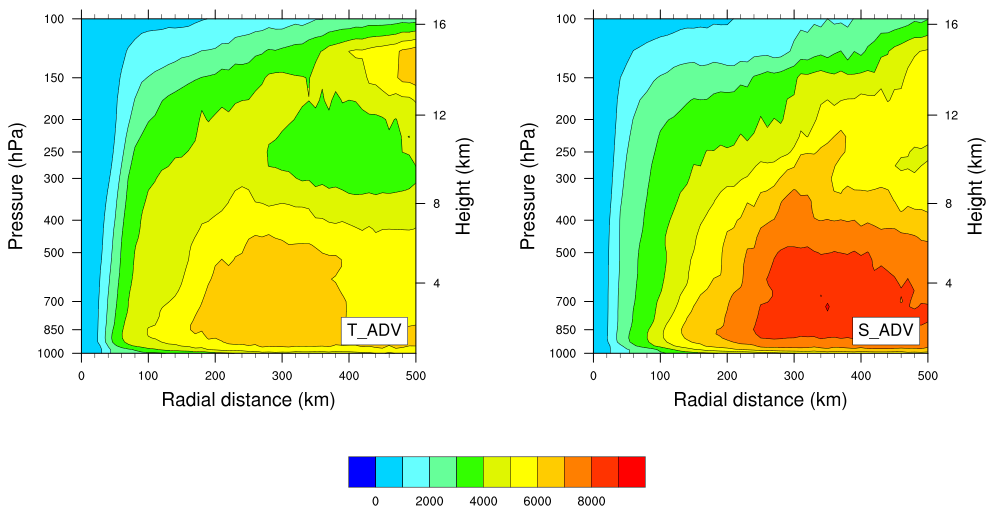

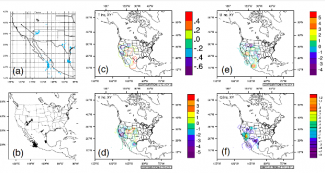

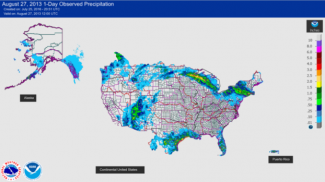

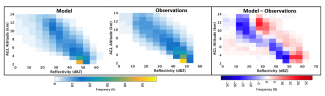

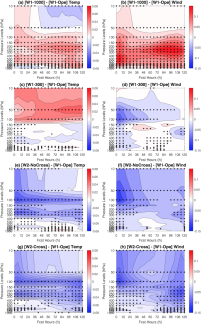

For their project, they assessed the 1-h experimental HRRR forecast accuracy of cloud objects occurring in the upper troposphere, where satellite infrared brightness temperatures are most sensitive. They used simulated Geostation- ary Operational Environmental Satellite (GOES) 10.7μm brightness temperatures generated for each HRRR forecast cycle, and compared them to the corresponding GOES observations. Forecast statistics were compiled during August 2015 and January 2016 to account for potential differences in cloud characteristics between the warm and cool seasons.

Overall, the higher interest value scores during August indicate that the sizes of the forecast objects more closely match those of the observed objects, and that the spatial displacement between their centers’ of mass is smaller. They also found smaller cloud objects have less predictability than larger objects, and that the size of the 1-h HRRR forecast cloud objects is generally more accurately predicted than their location.

The researchers hope this knowledge helps HRRR model developers identify reasons why a particular forecast hour or time period is more accurate than another. It could also help diagnose problems with the forecast cloud field to make forecasts more accurate.

Otkin, Rozoff, and Griffin were visiting from the University of Wisconsin-Madison Space Science and Engineering Center and Cooperative Institute for Meteorological Satellite Studies. They were hosted by Jamie Wolff of NCAR. The DTC visitor project allowed the team to discuss methods, insights, and results face-to-face. The team feels this project scratched the surface of how to use satellite observations and object-based verification methods to assess forecast accuracy, and that the door is open for future collaboration.

Contributed by Jason Otkin, Sarah Griffin, and Chris Rozoff.