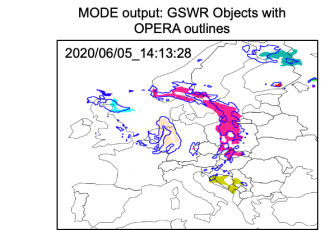

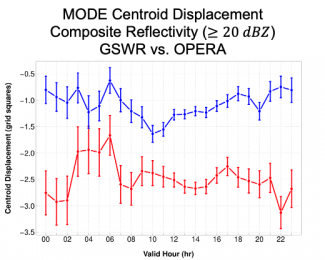

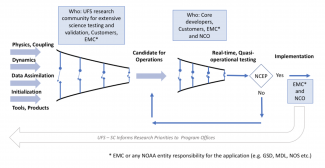

Over the sixteen years the Method for Object-Based Diagnostic Evaluation (MODE) tool has been available within the Model Evaluation Tools (MET), operational forecasting centers have explored its utility for informing the model development process and evaluating operational products in meaningful ways. Through this exploration, MODE has provided insightful information for operational implementation decisions and meaningful data about the performance of operational products.

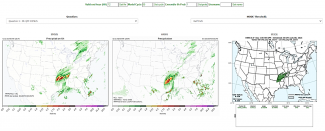

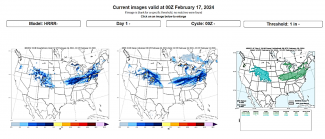

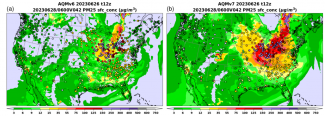

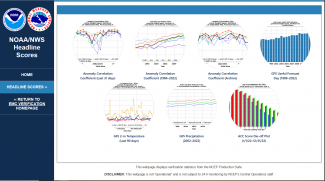

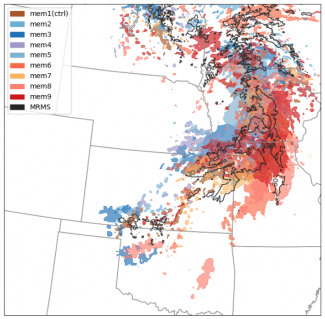

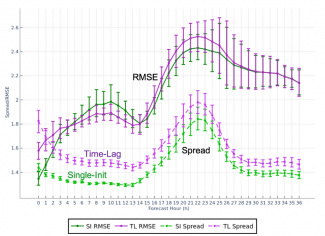

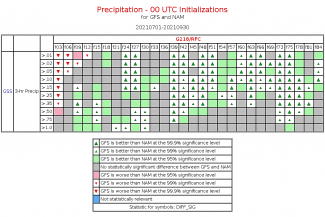

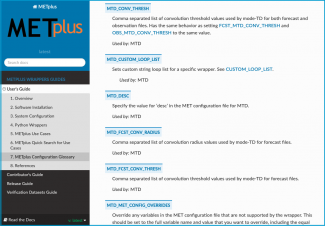

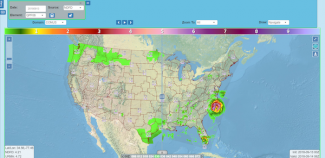

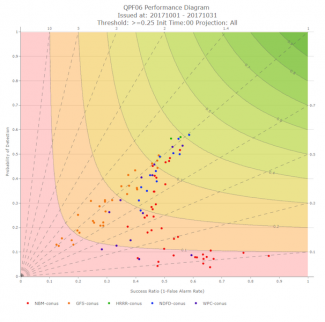

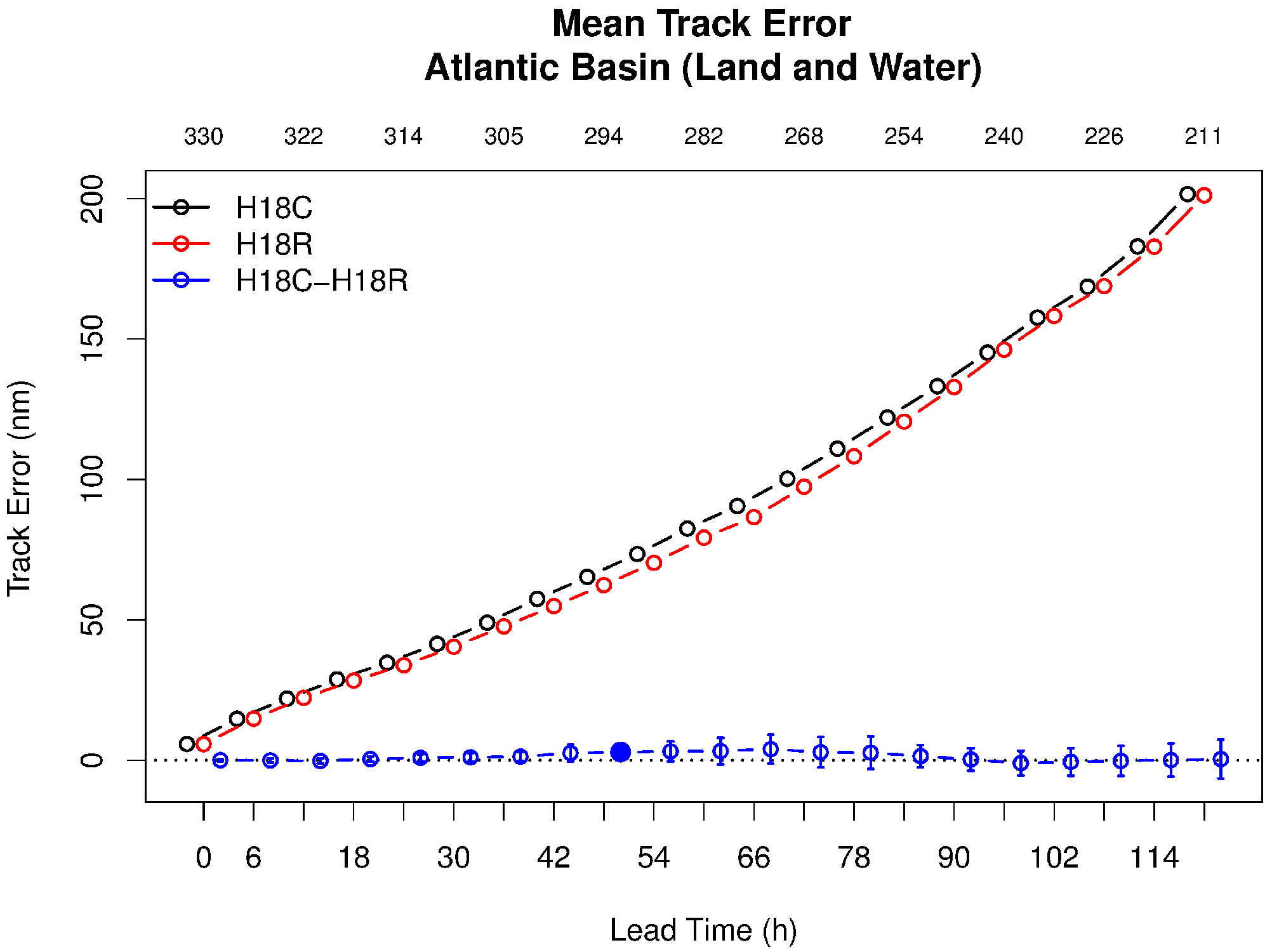

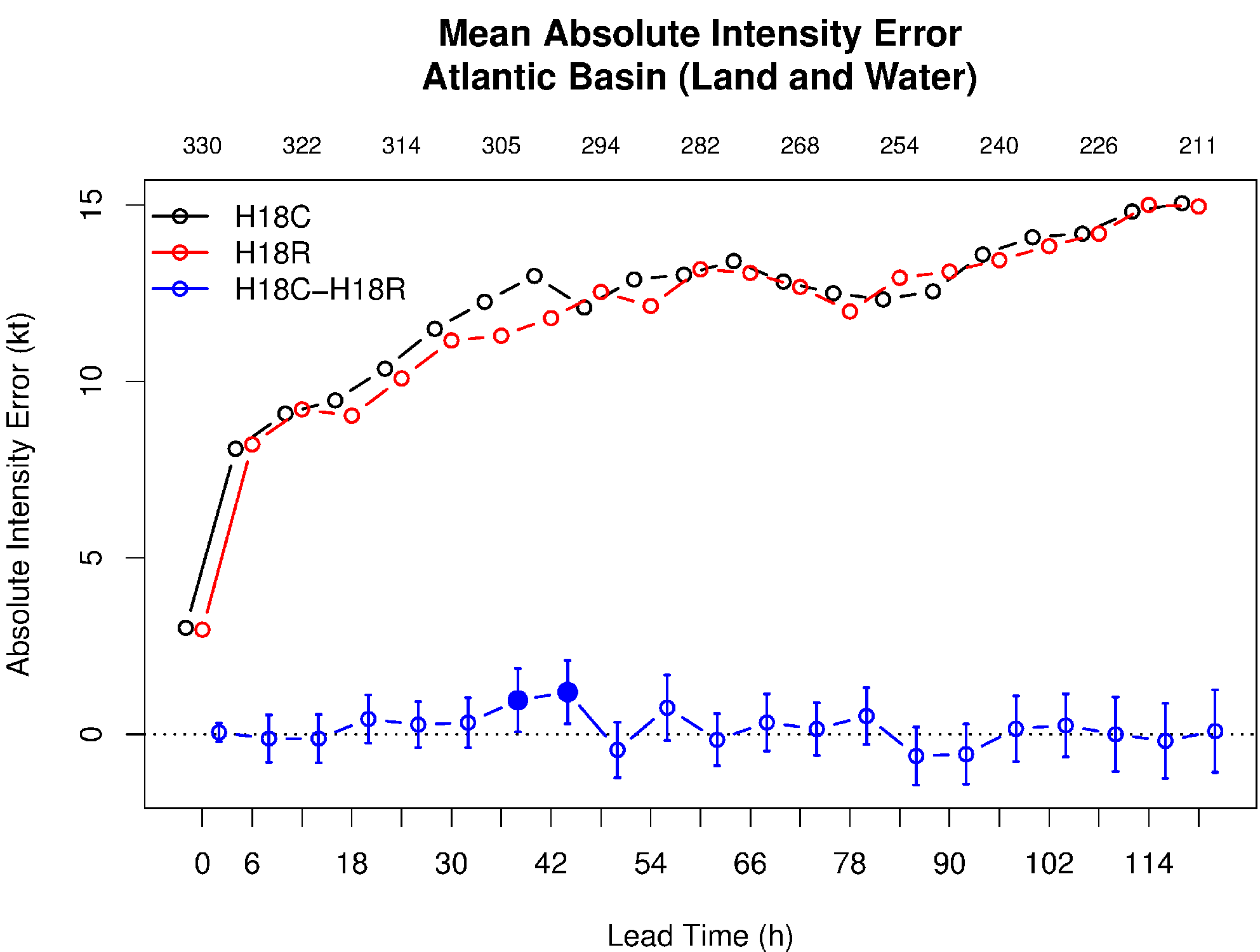

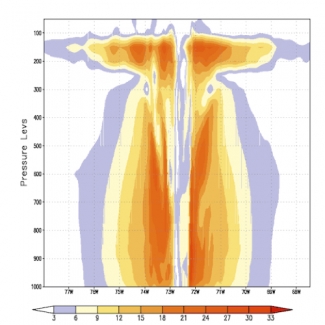

MODE has been applied to many different phenomena; here we describe applications at NCEP’s Weather Prediction Center (WPC). Building on the relationship between DTC and WPC, established through collaborations related to NOAA’s Hydrometeorology Testbed (HMT), WPC and DTC staff worked closely together to incorporate advanced verification techniques available in the enhanced Model Evaluation Tools (METplus) into their verification workflow. Through this deep collaboration, WPC began publicly displaying real-time verification using MODE in the early 2010s. The initial application to quantitative precipitation forecasts (QPF) has matured over the years to include snowfall and more advanced diagnostics. WPC provides publically available real-time displays of its object-based QPF and snowfall verification, and MODE continues to be a key tool for WPC’s HMT Flash Flood and Intense Rainfall Experiment (FFaIR) and Winter Weather Experiment (WWE). After the capability to track objects through time was added, WPC used the MODE Time Domain tool to construct a number of products that are used for real-time model analysis to communicate the uncertainty in the predictions. Focusing on heavy precipitation events, these tools analyze objects on both hourly and sub-hourly timescales to evaluate the positioning, size, and magnitude of snowband objects in real time. WPC also uses MODE internally for unique verification. These applications include determining displacement distance of predictions by the WPC, National Blended Model (NBM), and Hurricane Analysis and Forecast System (HAFS) QPF for landfalling tropical cyclones (Albright and Nelson 2024), and verification of the Mesoscale Precipitation Discussion and Excessive Rainfall Outlook, among other applications currently under development. WPC is also introducing the next generation of scientists to useful applications of MODE through hosting Lapenta interns (Christina Comer, Austin Jerke, and Victoria Scheidt between 2020-2022).

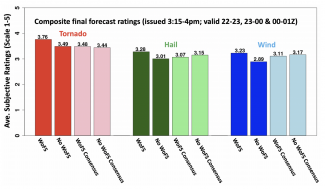

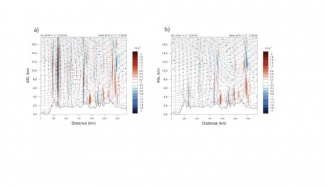

From the FFaIR verification page: