METplus Practical Session Guide (Version 5.0)

METplus Practical Session Guide (Version 5.0)Welcome to the METplus Practical Session Guide

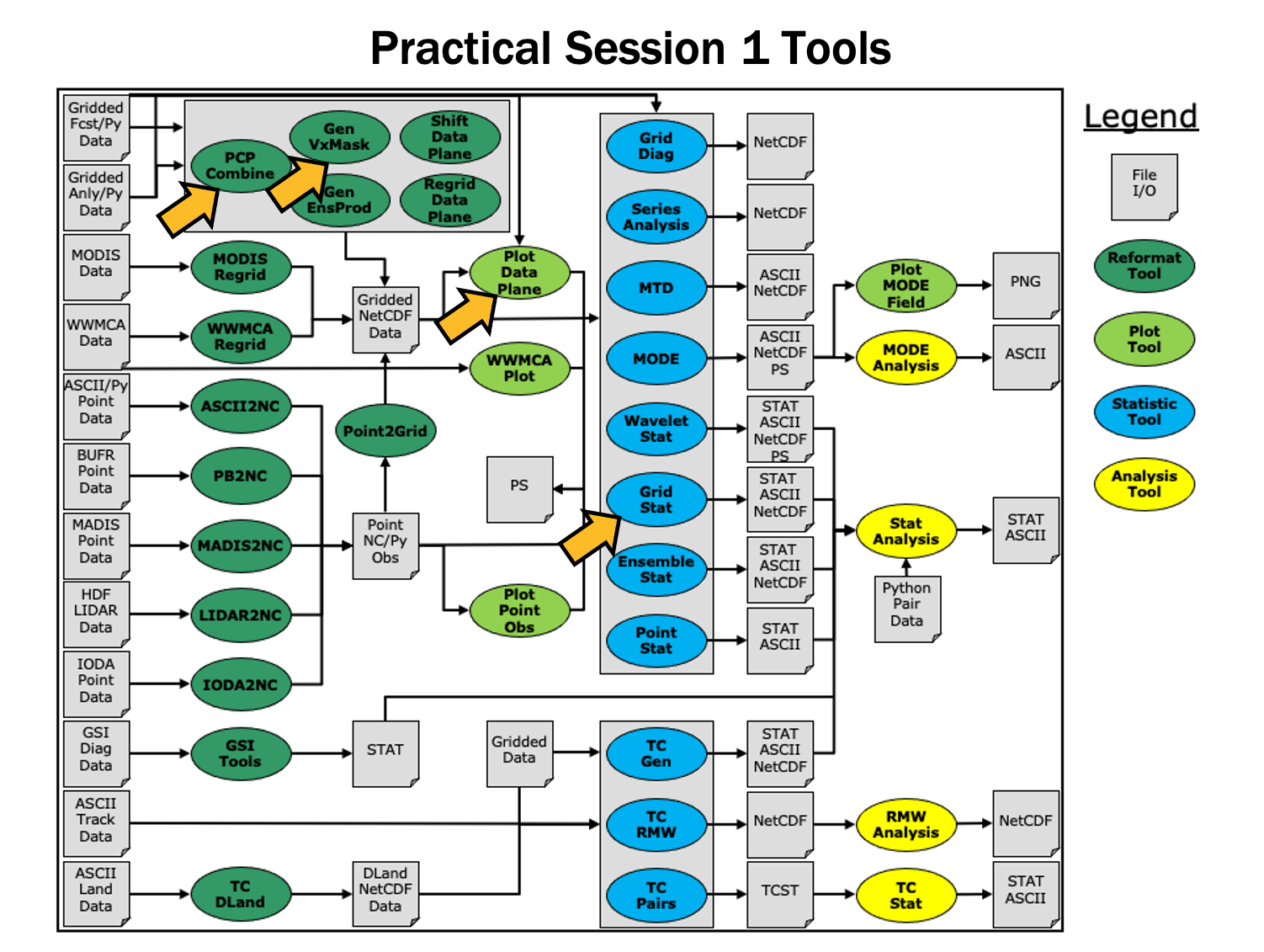

The METplus v5.0.0 practical consists of 11 sessions. The first six sessions contain instructions for running individual MET tools directly on the command line, followed by instructions for running the same tools as part of a METplus use case. The remaining sessions dive into special applications of METplus and the Analysis tools available in the METplus suite.

Please follow the link to the appropriate session:

- Session 1

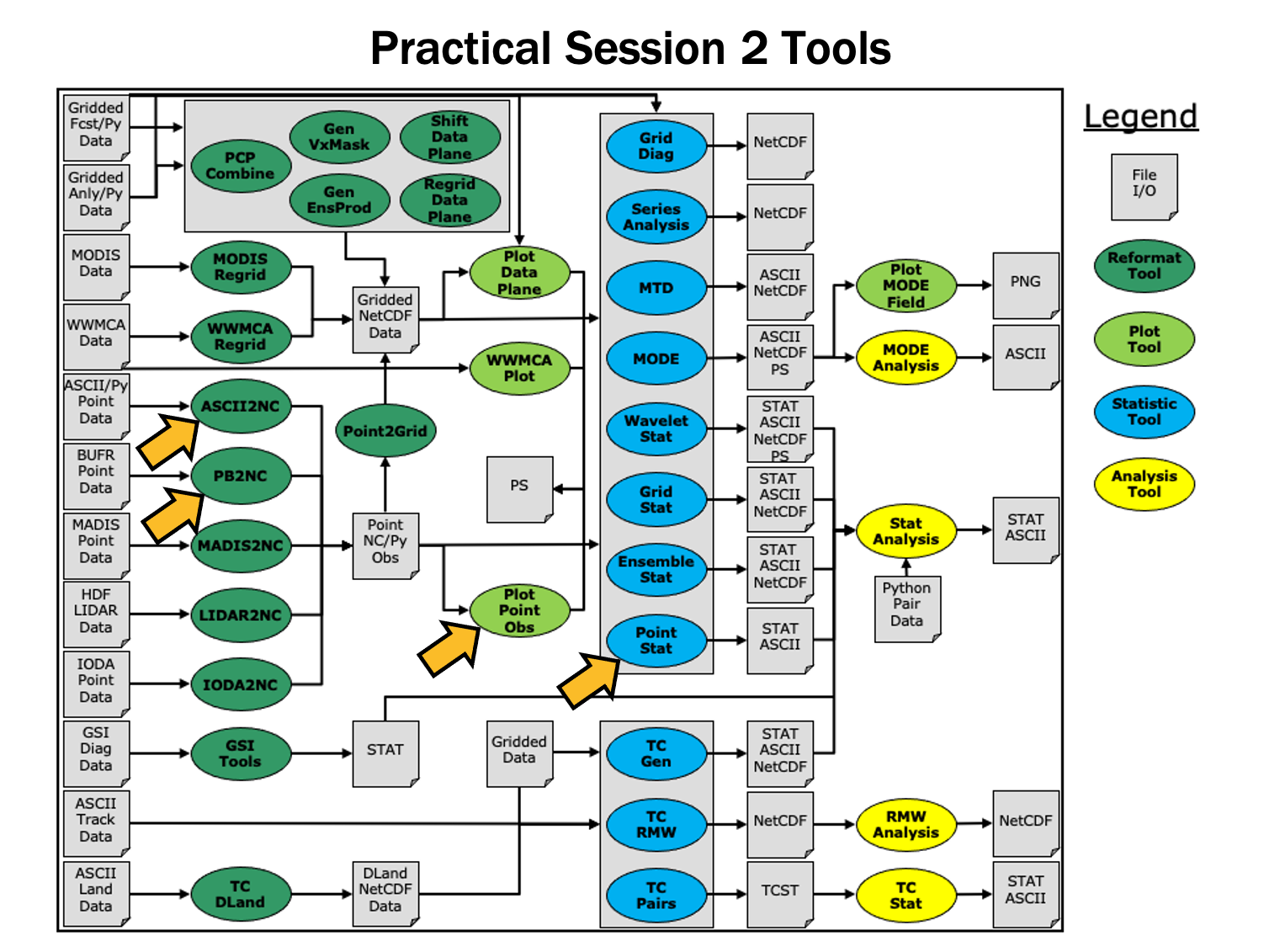

METplus Setup/Grid-to-Grid - Session 2

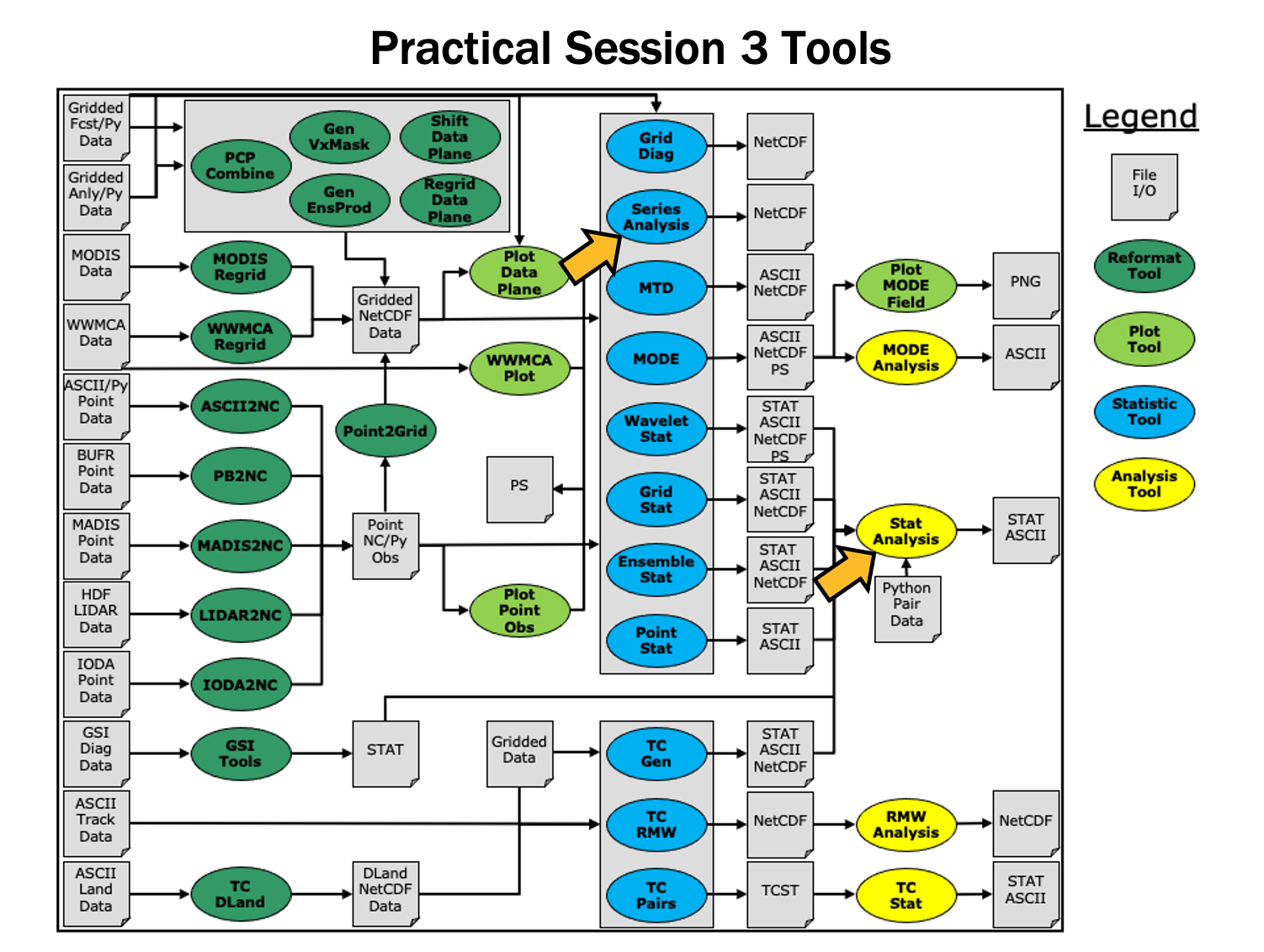

Grid-to-Obs - Session 3

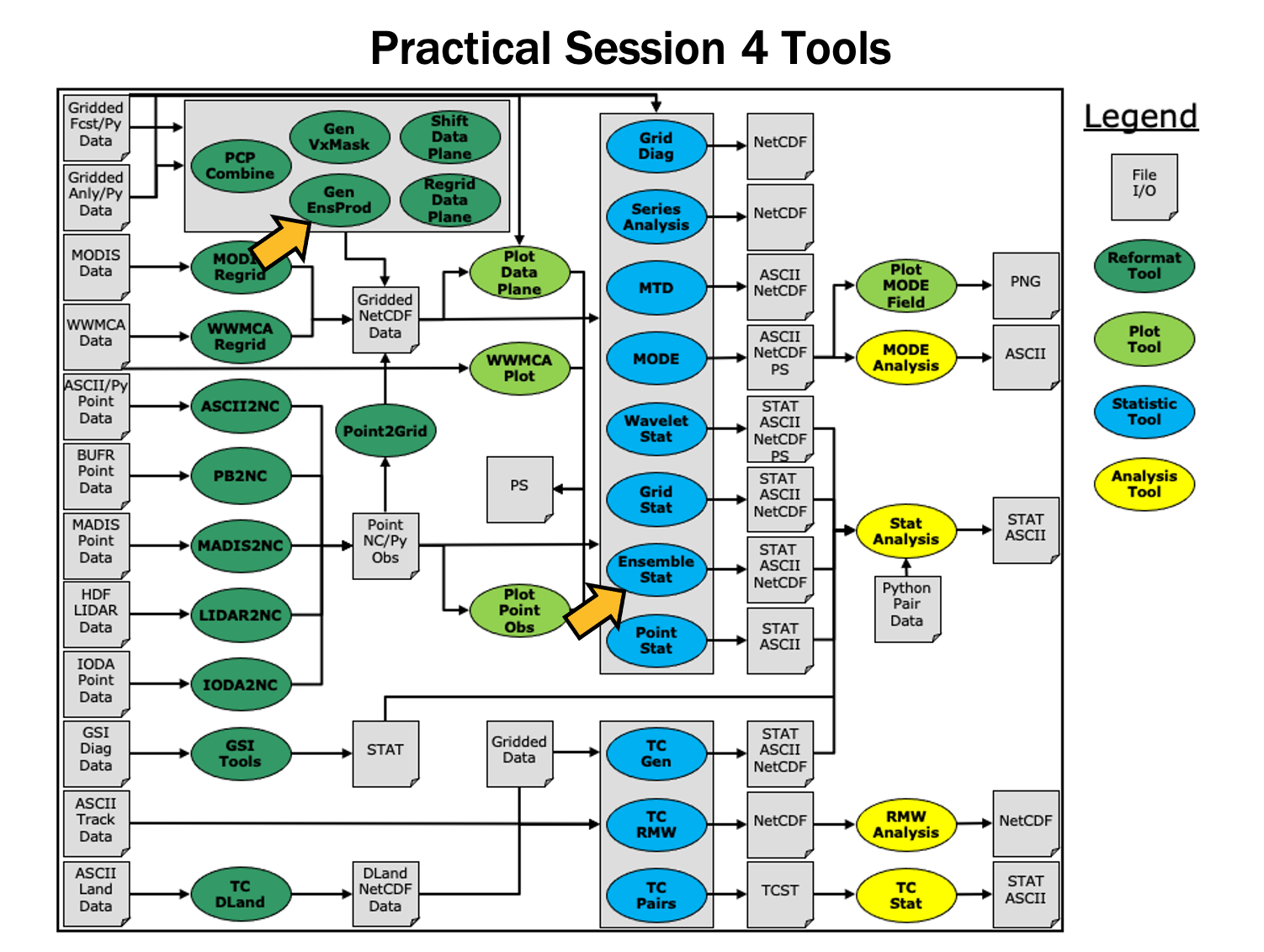

Analysis Tools - Session 4

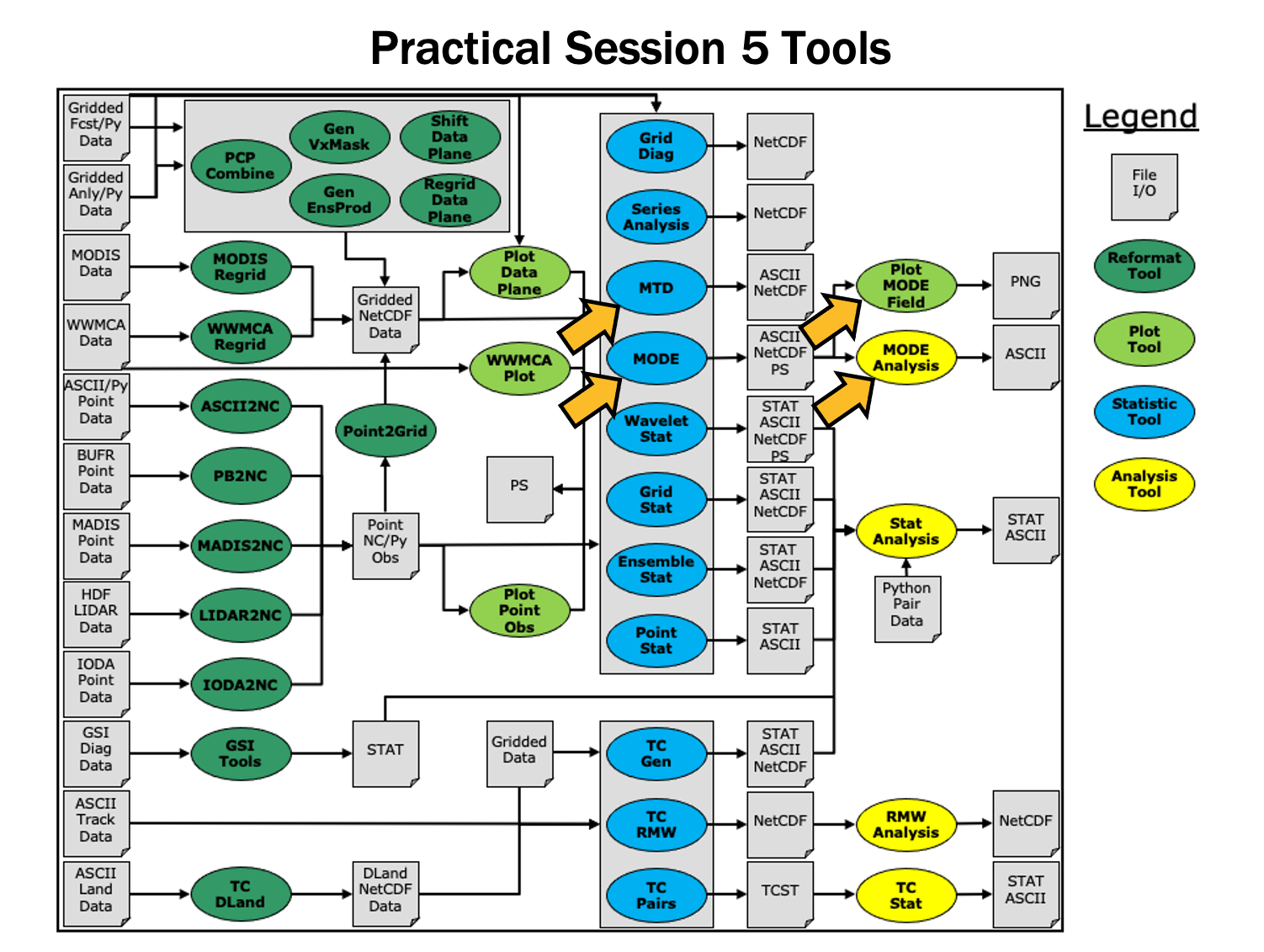

Ensemble and PQPF - Session 5

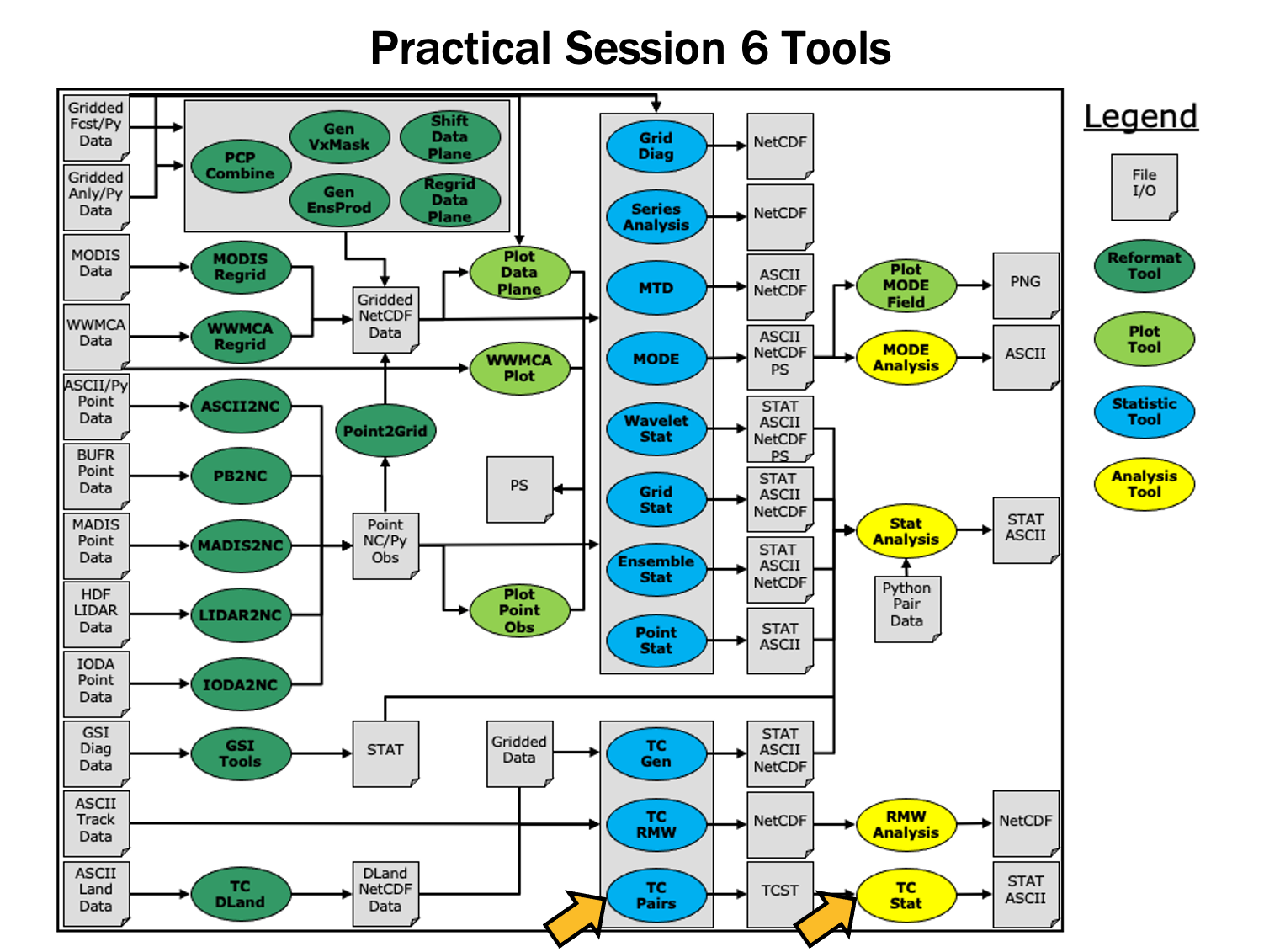

MODE and MTD - Session 6

Track and Intensity - Session 7

Feature Relative - Session 8

METplus Analysis Tools - Session 9

Python Embedding - Session 10

Subseasonal To Seasonal (S2S) - Session 11

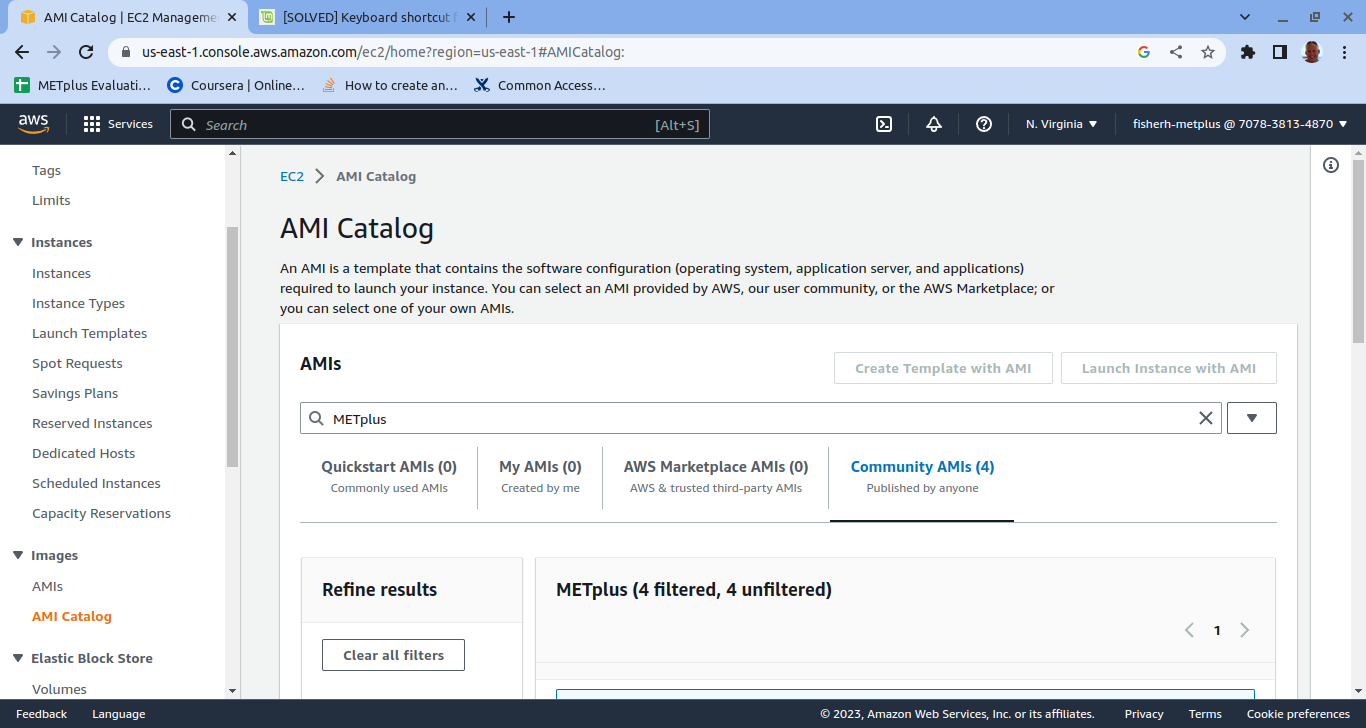

METplus Cloud

Basic Verification Statistics Review

Basic Verification Statistics ReviewIntroduction

This session is meant as a brief introduction (or review) of basic statistical verification methods applied for various classifications of meteorological variables, and to guide new users toward their usage within the METplus system. It is by no means a comprehensive review of all of the available statistical verification methods available in the atmospheric sciences, nor every single verification method available within the METplus system. More complete details on many of the techniques discussed here can be found in Wilks’ “Statistical Methods In The Atmospheric Sciences” (2019), Jolliffe and Stephenson’s “Forecast Verification; A Practitioner's Guide in Atmospheric Science” (2012) and the forecast verification web page hosted by Australia’s Bureau of Meteorology. Upon completion of this session you will have a better understanding of five of the common classification groupings for meteorological verification and have access to generalized METplus examples of creating statistics from those categories.

Attributes of Forecast Quality

Attributes of Forecast QualityAttributes of Forecast Quality

Forecast quality attributes are the basic characteristics of forecast quality that are of importance to a user and can be assessed through verification. Different forecast evaluation approaches will measure different attributes of the quality of the forecasts. Some verification statistics can be decomposed into several attributes, providing more nuance to the quality information. It can be commonplace in operational and research settings to find and settle on one or two of these complex statistics that can provide meaningful guidance for adjustments to the model being evaluated. For example, if a given verification statistic shows that a model has a high bias and low reliability, that can seem to provide a researcher with all they need to know to make the next iteration of the model perform better, having no need for any other statistical input. However, this is an example of the law of the instrument: “If the only tool you have is a hammer, you tend to see every problem as a nail”. More complete, meaningful verification requires examining forecast performance from multiple perspectives and applying a variety of statistical approaches that measure a variety of verification attributes.

In most cases, one or two forecast verification attributes will not provide enough information to understand the quality of a forecast. In the previous example where one verification statistic showed a model had high bias and low reliability, it could have been a situation where a second verification measure would have shown that the accuracy and resolution of the model were good, and making the adjustments to the next model iteration to correct bias and reliability would degrade accuracy and resolution. To fully grasp how well a particular forecast is performing, it is important to select the right combination of statistics that give you the “full picture” of the forecasts’ performance, which may consist of a more complete set of attributes, measuring the overall quality of your set of forecasts.

The following forecast attribute list is taken from Wilks (2019) and summarized for your convenience. Note how statistics showing one of these attributes on their own will not tell you exactly how “good” a forecast is, but combined with statistics showcasing other attributes you can have a better understanding of the utility of the forecast.

- Accuracy – The level of difference (or agreement) between the individual values of a forecast dataset and the individual values of the observation dataset. This should not be confused with the informal usage of “accurate”, which is often used by the general population to describe a forecast that has high quality.

- Skill – The accuracy of a forecast relative to a reference forecast. The reference forecast can be a single or group of forecasts that are compared against, with common choices being climatological values, persistence forecasts (forecasts that do not change over time), and older numerical model versions.

- Bias – The similarity between the mean forecast and mean observation. Note that this differs slightly from the accuracy attribute, which measures the individual value’s similarity.

- Reliability – The agreement between conditional forecast values and the distribution of the observation values resulting from that condition. Another way to think of reliability is as a measure of all of the observational value distributions that could happen given a forecast value.

- Resolution – In a similar thought as reliability, resolution is the measure of the forecast’s ability to resolve different observational distributions given a change in the forecast value. Simply put, if value X is forecast, what level of difference is there in the resulting observation distributions than a forecast of value Y.

- Discrimination – A simpler definition could be considered the inverse of resolution: discrimination is the measure of a forecast’s distribution given a change in the observation value. For example, if a forecast is just as likely to predict a tornado regardless of the actual observation of a tornado occurring, that forecast would have a low discrimination ability for tornadoes.

- Sharpness – This property pertains only to the forecast with no consideration of its observational pair. If the forecast does not deviate from a consistent (e.g., climatological) distribution, and instead sticks close to a “climatological value”, it exhibits low sharpness. If the forecast has the ability to produce values different from climatology that change the distribution, then it demonstrates sharpness.

Binary Categorical Forecasts

Binary Categorical ForecastsBinary Categorical Forecasts

The first group of verification types to consider is one of the more basic, but most often used. Binary categorical forecast verification seeks to answer the question “did the event happen”. Some variables (e.g., rain/no rain) are by definition binary categorical, but every type of meteorological variable can be evaluated in the context of a binary forecast (e.g., by applying a threshold): Will the temperature exceed 86 degrees Fahrenheit? Will wind speeds exceed 15 knots? These are just some examples where the observations fall into one of only two categories, yes or no, which are created by the two categories of the forecast (e.g. the temperature will exceed 86 degrees Fahrenheit, or it will stay at or below 86 degrees Fahrenheit).

Imagine a simplified scenario where the forecast calls for rain.

A hit occurs when a forecast predicts a rain event and the observation shows that the event occurred. In the scenario, a hit would be counted if rain was observed. A false alarm would be counted when the forecast predicted an event, but the event did not occur (i.e., in the scenario, this would mean no rain was observed). As you may have figured out, there are two other possible scenarios to cover for when the forecast says an event will not occur.

To describe these, imagine a second scenario where the forecast says there will be no rain.

Misses count the occasions when the forecast does not predict the event to occur, but it is observed. In this new scenario, a miss would be counted if rain was observed. Finally, correct rejections are those times that a forecast says the event will not occur, and observations show this to be true. Thus, in the rainfall scenario a correct rejection would be counted if no rain was forecasted and no rain was observed.

Because forecast verification is rarely performed on one event, a contingency table can be utilized to quickly convey the results of multiple events that all used the same binary event conditions. An example contingency table is shown here:

Each of the paired categorical forecasts and observations can be assigned to one of the four categories of the contingency table. The statistics that are used to describe the categorical forecasts’ scalar attributes (accuracy, bias, reliability, etc.) are computed using the total counts in these categories.

It is important not to forget the total number of occurrences and non-occurrences, n, that are contained in all four categories. If n is too small, it can be easy to arrive at a misleading conclusion. For example, if a forecaster claims 100% accuracy in their rain forecast and produces a contingency table where the forecast values were all hits but n=4, the conclusion is technically correct, but not very scientifically sound!

Verification Statistics for Binary Categorical Forecasts

Verification Statistics for Binary Categorical ForecastsVerification Statistics for Binary Categorical Forecasts

Most meteorological forecasts would be described as non-probabilistic, meaning the forecast value given is provided with no additional information of certainty in that value. Another term for this type of forecast is deterministic and will be the focus of the verification statistics in this section. For more information on probabilistic forecasts and their corresponding statistics please refer to the probabilistic section. When verifying binary categorical forecasts, the only important factor is whether or not the event occurred: the assumed certainty in the forecast is 100%.

Numerous computationally-easy (and very popular) scalar statistics are within reach without too much manipulation of a contingency table’s counts.

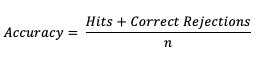

Accuracy (Acc)

The scalar attribute of Accuracy is measured as a simple ratio between the forecasts that correctly predicted the event and the total number of occurrences and non-occurrences, n. In equation format,

This measure (often called “Percent Correct”) is very easily computed and addresses how often a forecast is correctly predicting an event and non-event. As most verification resources will warn you, however, this measure should be used with caution, especially for an event that happens only rarely. The Finley tornado forecast study (1884) is an excellent example of the need for caution, with Finley reporting a 96.6% Accuracy for predicting a tornado due to the overwhelming count of correct negatives. Peers were quick to point out that a higher Accuracy (98.2%) could have been achieved with a persistence forecast of No Tornado! See how to use this statistic in METplus!

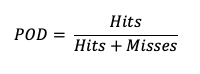

Probability of Detection (POD)

Probability of Detection (POD), also referred to as the Hit Rate, measures the frequency that the forecasts were correct given that the forecast predicts an occurrence. Rather than computing the ratio of the correct forecasts to the entire occurrence and non-occurrence count (i.e., as in Accuracy), POD only focuses on the times the forecast predicted an event would occur. Thus, this measure is categorized as a discrimination statistic. POD is computed as

This measure is useful for rare events (tornadoes, 100-year floods, etc.) as it will penalize (i.e. go toward 0) the forecasts when there are too many missed forecasts. See how to use this statistic in METplus!

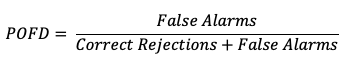

Probability of False Detection (POFD)

A countermeasure to POD is the probability of false detection (POFD). POFD (also called false alarm rate), measures the frequency of false alarm forecasts relative to the frequency that an event does not occur.

Together, POD and POFD measure forecasts’ ability to discriminate between occurrences and non-occurrences of the event of interest. See how to use this statistic in METplus!

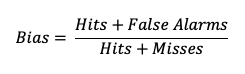

Frequency bias (Bias)

Frequency bias (a measure of, you guessed it, bias!) compares the count of “yes” forecasts to the count of “yes” events observed.

This ratio does not provide specific information about the performance of individual forecasts, but rather is a measure of over- or under-forecasting of the event. See how to use this statistic in METplus!

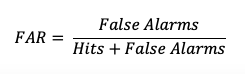

False Alarm Ratio (FAR)

The False Alarm Ratio (FAR) provides information about both the reliability and resolution attributes of forecasts. It computes the ratio of “yes” forecasts that did not occur to the total number of times a “yes” forecast was made (i.e., the proportion of “yes” forecasts that were incorrect).

FAR also is the first statistic covered in this session that has a negative orientation: a FAR of 0 is desirable, while a FAR of 1 shows the worst possible ratio of “yes” forecasts that were not observed relative to total “yes” forecasts. See how to use this statistic in METplus!

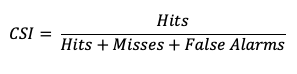

Critical Success Index (CSI)

The Critical Success Index (CSI), also commonly known as the Threat Score, is a second measure of the overall accuracy of forecasts (e.g., like the Accuracy measure mentioned earlier). Accuracy pertains to the agreement of individual forecast-observation pairs, and CSI can be calculated as

Note that by definition CSI can be described as the ratio between the times the forecast correctly called for an event and the total times the forecast called for an event or the event was observed. Thus, CSI ignores correct negatives, which differentiates it from percent correct. A CSI of 1 indicates a highly accurate forecast, while a value of 0 indicates no accuracy. See how to use this statistic in METplus!

Binary Categorical Skill Scores

Binary Categorical Skill ScoresBinary Categorical Skill Scores

Skill scores can be a more meaningful way of describing a forecast’s quality. By definition, skill scores compare the performance of the forecasts to some standard or “reference forecast” (e.g., climatology, persistence, perfect forecasts, random forecasts). They often combine aspects of the previously-listed scalar statistics and can serve as a starting point for creating your own skill score that is better suited to your forecasts’ properties. Skill scores create a summary view of the contingency table, which is in contrast to the scalar statistics’ focus on one attribute at a time.

Three of the most popular skill statistics for categorical variables are the Heidke Skill Score (HSS), the Hanssen-Kuipers Discriminant (HK), and the Gilbert Skill Score (GSS). These measures are described here.

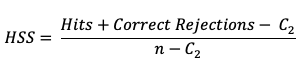

Heidke Skill Score (HSS)

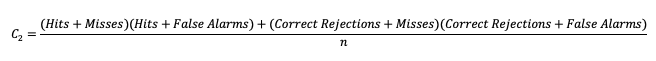

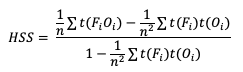

The HSS measures the proportion correct relative to the expected proportion correct that would be achieved by a “reference” forecast, denoted by C2 in the equation. In this instance, the reference forecast denotes a forecast that is completely independent of the observation dataset. In practice, the reference forecast often is based on a random, climatology, or persistence forecast. By combining the probability of a correct “yes” forecast (i.e., a hit) with the probability of a correct “no” forecast (i.e. a correct rejection) the resulting equation is

HSS can range from -1 to 1, with a perfect forecast receiving a score of 1. The equation presented above is a compact version which uses a sample climatology, C2 based on the counts in the contingency table. The C2 term expands to

This is a basic “traditional” version of HSS. METplus also calculates a modified HSS, that allows users to control how the C2 term is defined. This additional control allows users to apply an alternative standard of comparison, such as another forecast or a basic standard such as a persistence forecast or climatology. See how to use these skill scores in METplus!

Hanssen-Kuipers Discriminant (HK)

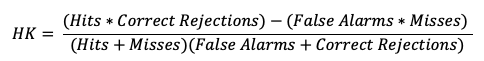

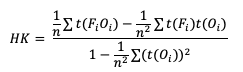

HK is known by several names, including the Peirce Skill Score and the True Skill Statistic. This score is similar to HSS (ranges from -1 to 1, perfect forecast is 1, etc.). HK is formulated relative to a random forecast that is constrained to be unbiased. In general, the focus of the HK is on how well the forecast discriminates between observed “yes” events and observed “no” events. The equation for HK is

which is equivalent to “POD minus POFD”. Because of its dependence on POD, HK can be similarly affected by infrequent events and is suggested as a more useful skill score for frequent events. See how to use this skill score in METplus!

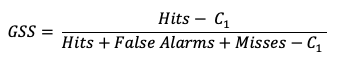

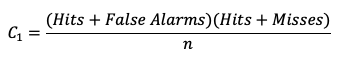

Gilbert Skill Score (GSS)

Finally, GSS measures the correspondence between forecasted and observed “yes” events. Sometimes called the Equitable Threat Score (ETS), GSS is a good option for those forecasted events where the observed “yes” event is rare. In particular, the number of correct negatives (which for a rare event would be large) are not considered in the GSS equation and thus do not influence the GSS values. The GSS is given as

GSS ranges from -1 to 1, with a perfect forecast receiving a score of 1. Similar to HSS, a compact version of GSS is presented using the C1 term. This term expands to

METplus solutions for Binary Categorical Forecast Verification

METplus solutions for Binary Categorical Forecast VerificationMETplus solutions for Binary Categorical Forecast Verification

Now that you know a bit more about dichotomous, deterministic forecasts and how to extract information on the scalar attributes through statistics, it’s time to show how you can access those same statistics in METplus!

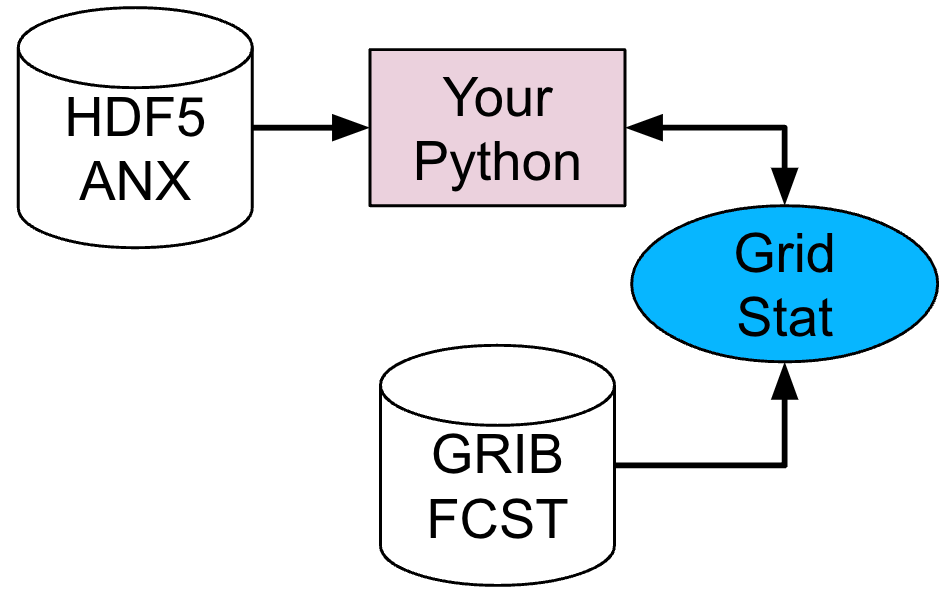

In order to better understand the delineation between METplus, MET, and METplus wrappers which are used frequently throughout this tutorial but are NOT interchangeable, the following definitions are provided for clarity:

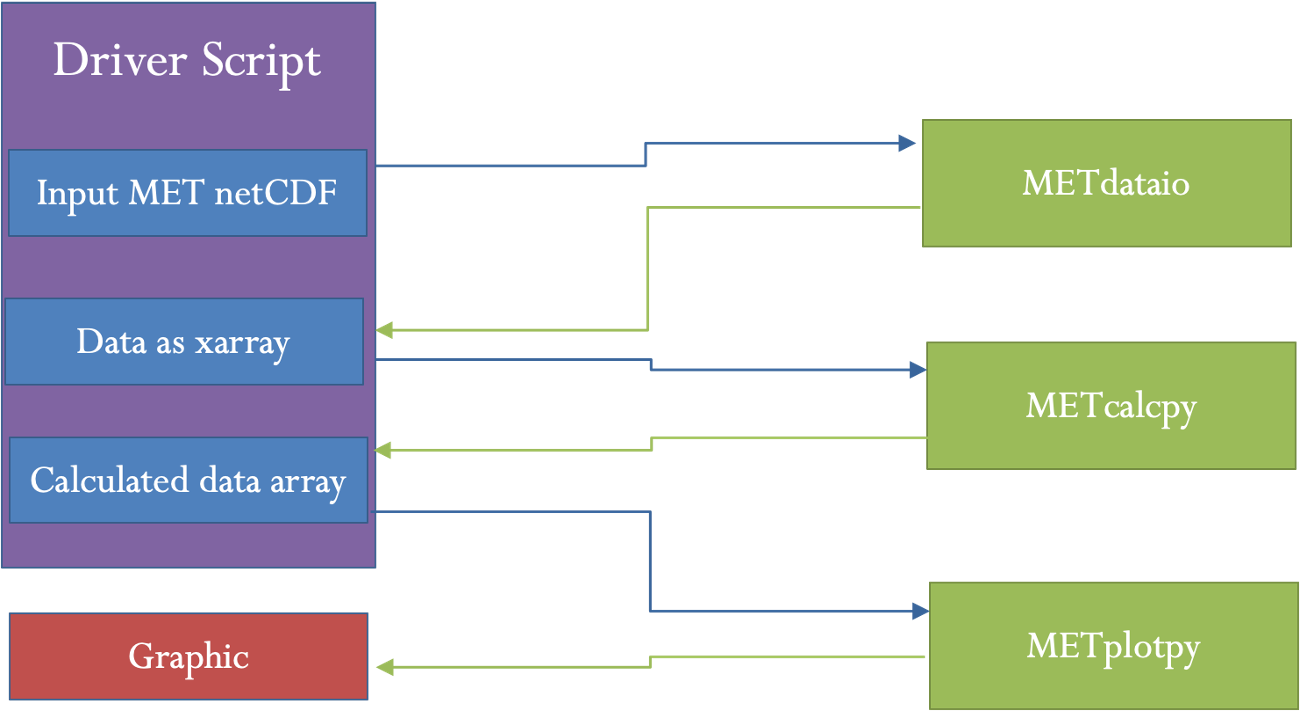

- METplus is best visualized as an overarching framework with individual components. It encapsulates all of the repositories: MET, METplus wrappers, METdataio, METcalcpy, and METplotpy.

- MET serves as the core statistical component that ingests the provided fields and commands to compute user-requested statistics and diagnostics.

- METplus wrappers is a suite of Python wrappers that provide low-level automation of MET tools and plotting capability. While there are examples of calling METplus wrappers without any underlying MET usage, these are the exception rather than the rule.

MET solutions

The MET User’s Guide provides an Appendix that dives into all of the statistical measure that MET calculates. METplus groups statistics together by application and type and makes them available to METplus users via several line types. For example, many of the statistics that were discussed above can be found in the Contingency Table Statistics (CTS) line type, which logically groups together statistics based directly on contingency table counts. In fact, MET allows users to directly access the contingency table counts through the aptly named Contingency Table Counts (CTC) line type.

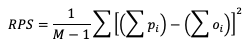

The line types that are output by MET depend on your selection of the appropriate line type using the output_flag dictionary. Note that certain line types may or may not be available in every tool: for example, both Point-Stat and Grid-Stat produce CTS line types, which allow users to access the various contingency table statistics for both point-based observations and gridded observations. In contrast, Ensemble-Stat is the only tool that can generate a Ranked Probability Score (RPS) line type, which provides statistics relevant to the analysis of ensemble forecasts. If you don’t see your desired statistic in the line type or tool you’d expect it to be in, be sure to check the Appendix to see if the statistic is available in MET and which line type it’s currently grouped with.

As for the categorical statistics that were just discussed, here’s a link to the User’s Guide Appendix entry that discusses their use in MET:

Remember that for categorical statistics, including those that are associated with probabilistic datasets, you will need to provide an appropriate threshold that divides the observations and forecasts into two mutually exclusive categories. For more information on the available thresholding options, please review this section of the MET User’s Guide.

METplus Wrapper Solutions

The same statistics that are available in MET are also available with the METplus wrappers. To better understand how MET configuration options for the selection of statistics translate to METplus wrapper configuration options, you can utilize the Statistics and Diagnostics Section of the METplus wrappers User’s Guide, which lists all of the available statistics through the wrappers, including which tools can output particular statistics. To access the line types through the tool, select your desired tool and use this page to view a list of all available commands for that tool. Once you do, you’ll see that the tool will include several options that contain _OUTPUT_FLAG_. These options will exhibit the same behavior and accept the same settings as the line types in MET’s output_flag dictionary, so be sure to review the available settings to get the line type output you want.

METplus Examples of Binary Categorical Forecast Verification

METplus Examples of Binary Categorical Forecast VerificationThe following two examples show a generalized method for calculating binary categorical statistics: one for a MET-only usage, and the same example but utilizing METplus wrappers. These examples are not meant to be completely reproducible by a user: no input data is provided, commands to run the various tools are not given, etc. Instead, they serve as a general guide of one possible setup among many that produce binary categorical statistics.

If you are interested in reproducible, step-by-step examples of running the various tools of METplus, you are strongly encouraged to review the METplus online tutorial that follows this statistical tutorial, where data is made available to reproduce the guided examples.

In order to better understand the delineation between METplus, MET, and METplus wrappers which are used frequently throughout this tutorial but are NOT interchangeable, the following definitions are provided for clarity:

- METplus is best visualized as an overarching framework with individual components. It encapsulates all of the repositories: MET, METplus wrappers, METdataio, METcalcpy, and METplotpy.

- MET serves as the core statistical component that ingests the provided fields and commands to compute user-requested statistics and diagnostics.

- METplus wrappers is a suite of Python wrappers that provide low-level automation of MET tools and plotting capability. While there are examples of calling METplus wrappers without any underlying MET usage, these are the exception rather than the rule.

MET Example of Binary Categorical Forecast Verification

This example demonstrates categorical forecast verification in MET.

For this example, let’s examine Grid-Stat. Assume we wanted to verify a binary temperature forecast of greater than 86 degrees Fahrenheit. Starting with the general Grid-Stat configuration file, the following would resemble the minimum necessary settings/changes for the fcst and obs dictionaries:

field = [

{

name = "TMP";

level = [ "Z0" ];

cat_thresh = [ >86.0 ];

}

];

}

obs = fcst;

We can see that the forecast field name in the forecast input file is named TMP, and is set accordingly in the fcst dictionary. Similarly, the Z0 level is used to grab the lowest (0th) vertical level the TMP variable appears on. Finally, cat_thresh, which controls the categorical threshold that the contingency table will be created with, is set to greater than 86.0. This assumes that the temperature units in the file are in Fahrenheit. The obs dictionary is simply copying the settings from the fcst dictionary, which is a method that can be used if both the forecast and observation input files share the same variable structure (e.g. both inputs use the TMP variable name, in Fahrenheit, with the lowest vertical level being the desired verification level).

Now all that’s necessary would be to adjust the output_flag dictionary settings to have Grid-Stat print out the desired line types:

fho = NONE;

ctc = STAT;

cts = STAT;

mctc = NONE;

mcts = NONE;

cnt = NONE;

…

In this example, we have told MET to output the CTC and CTS line types, which will contain all of the scalar statistics that were discussed in this section. Running this set up would produce one .stat file with the two line types that were selected, CTC and CTS. The CTC line would look something like:

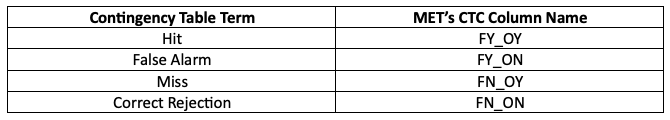

While the stat file full header column contents are discussed in the User’s Guide, the CTC line types are the final 6 columns of the line, beginning after the “CTC” column. The first value is MET’s TOTAL column which is the “total number of matched pairs”. You might better recognize this value as n, the summation of every cell in the contingency table. In fact, the following four columns of the CTC line type are synonymous with the contingency table terms, which have their corresponding MET terms provided in this table for your convenience:

Further descriptions of each of the CTC columns can be found in the MET User’s Guide. Note that the final column of the CTC line type, EC_VALUE, is only relevant to users verifying probabilistic data with the HSS_EC skill score.

The CTS line type is also present in the .stat file and is the second row. It has many more columns than the CTC line, where all of the scalar statistics and skill scores discussed previously are located. Focusing on the first few columns of the example output, you would find:

These columns can be understood by reviewing the MET User’s Guide guidance for CTS line type. After the familiar TOTAL or n column, we find statistics such as Base Rate, forecast mean, Accuracy, plus many more, all with their appropriate lower and upper confidence intervals and the bootstrap confidence intervals. Note that because the bootstrap library’s n_rep variable was kept at its default value of 0, bootstrap methods were not used and appear as NA in the stat file. While all of these statistics could be obtained from the CTC line type values with additional post-processing, the simplicity of having all of them already calculated and ready for additional group statistics or to advise forecast adjustments is one of the many advantages of using the METplus system.

METplus Wrapper Example of Binary Categorical Forecast Verification

To achieve the same outcome as the previous example but utilizing METplus wrappers instead of MET, very few changes would need to be made. Starting with the standard GridStat configuration file, we would need to set the _VAR1 settings appropriately:

BOTH_VAR1_LEVELS = Z0

BOTH_VAR1_THRESH = gt86.0

Note how the BOTH option is utilized here (as opposed to individual FCST_ and OBS_ settings) since the forecast and observation datasets utilize the same name and level information. Because the loop/timing information is controlled inside the configuration file for METplus wrappers (as opposed to MET’s non-looping option), that information must also be set accordingly:

INIT_TIME_FMT = %Y%m%d%H

INIT_BEG=2023080700

INIT_END=2023080700

INIT_INCREMENT = 12H

LEAD_SEQ = 12

Finally, the desired line types need to be selected for output. In the wrappers, that looks like this:

GRID_STAT_OUTPUT_FLAG_CTS = STAT

After a successful run of METplus, the same .stat output file that was created in the MET example would be produced here, complete with CTC and CTS line type rows.

Multicategorical Forecasts

Multicategorical ForecastsMulticategorical Forecasts

In practical applications of forecast verification, it’s often of interest to look at more than two categories and cannot be reduced to a binary, “did the event happen or not, and was the event forecasted or not”. Restricting forecast and observation datasets to binary options leads to the loss of important information about how the forecast performed (e.g., when multiple categories are combined into just two categories). For example, if the forecast called for rain but snow was observed instead, it can be useful to analyze how “good” the forecast was at delineating between rain, snow, and any other precipitation type. Luckily, the transformation of statistics from supporting binary categorical forecasts to the second group of forecasts, multi-category, is fairly straightforward.

Verification Statistics for Multicategorical Forecasts

Verification Statistics for Multicategorical ForecastsVerification Statistics for Multicategorical Forecasts

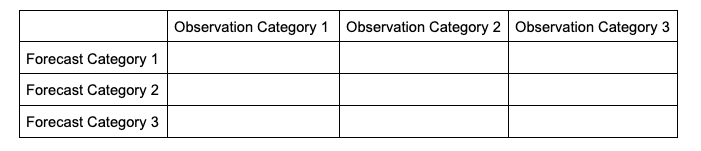

In order to make sense of how the statistics are modified when evaluating multiple categories, it will help to look at how the contingency table changes. The generalized contingency table for a three category table will look like the following:

A similar table could be constructed for comparisons of four, five, six, and so on, categories. Some statistical calculations are more easily computed when forecasts and observations are restricted to two options (as was the case in binary categorical forecasts), so one less common approach for a multi-category contingency table is to process the full table into multiple instances of 2x2 grids, focusing on one forecast category at a time. This way, each forecasted event or category can have scalar attributes calculated, as well as skill scores that reflect the entire forecast’s quality across the multiple categories. This is not a valid approach for calculating, among others, Heidke Skill Score and Gilbert Skill Score, but is considered here for a well-rounded approach to multicategorical verification.

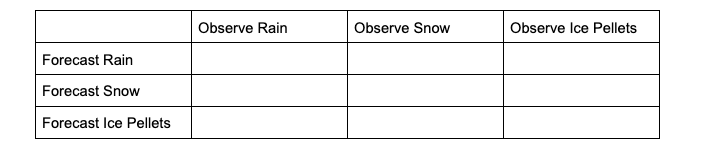

To demonstrate how scalar attributes are calculated for a multicategory forecast, imagine a scenario where the forecast can call for three separate precipitation types: rain, snow, and ice pellets. For this scenario, forecasts and observations of no precipitation are ignored (i.e., this evaluation is conditioned on some type of precipitation both occurring and being forecasted).

The contingency table would look like the following:

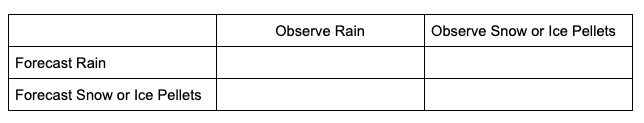

To extract the scalar attributes for rain, the simplified, 2x2 contingency table would look like this:

The same simplification can be done for snow and ice pellets. As this new 2x2 contingency table demonstrates, by reducing the multicategorical options to the binary choices of each forecasted event (e.g., did the forecast predict rain rather than snow or ice pellets, did the forecast predict snow rather than rain or ice pellets, did the forecast predict ice pellets rather than rain or snow) and evaluating the corresponding observations, scalar statistics such as POD, Bias, etc. can be calculated in the same method as the binary categorical forecasts.

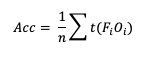

One of the unique scalar statistics that does not need a contingency table simplification is Accuracy (Acc). This is due to its definition, which, when presented in its general format, becomes:

Note that t(FiOi) is the number of forecasts in category i of the multicategory contingency table that had an observation of Oi and n is the total number of occurrences and non-occurrences.

With this general format of Accuracy, essentially we seek to answer the question “how capable was the forecast at selecting the exact category?” To demonstrate how this would look in a 3x3 contingency table, let’s return to the previous scenario of a three precipitation type forecast. A perfect Accuracy forecast would have nonzero values only in the “Forecast Rain, Observation Rain”, “Forecast Snow, Observation Snow”, and “Forecast Ice Pellets, Observation Ice Pellets” cells, with the remaining cells being zero. The same top-left corner to lower-right corner of nonzero cells pattern would exist in the simplified 2x2 contingency table. Because no information about the forecast’s Accuracy is lost for the full count of forecast categories, Accuracy is the only scalar statistic that METplus will calculate from the full multicategory contingency table. See how to use this statistic in METplus!

Multicategorical Skill Scores

Multicategorical Skill ScoresMulticategorical Skill Scores

While some statistics for multicategorical forecasts require simplifying the contingency table to two-categories, and therefore only show the forecast’s quality in respect to the individual category, certain skill scores are designed to evaluate forecasts with multiple categories, allowing the skill score to reflect the quality of the entire forecast spectrum.

Heidke Skill Score (HSS)

HSS has a general form to accommodate multicategory forecasts. While more computationally intense than the two-category equation provided, the multi-category formulation is also based on comparison of the percent correct in the forecast relative to the proportion correct that would be achieved by a “random” forecast. The relative comparison is often with sources other than a “random” forecast, including older versions of a model and climatology. This general form is

Note that t(FiOi) is the number of forecasts in category i of the multicategory contingency table that had an observation of Oi, t(Fi) is the total number of forecasts in category i, and n is the total number of occurrences and non-occurrences.

Hanssen-Kuipers Discriminant (HK)

Similarly, HK is generalized to

Gerrity Skill Score

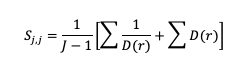

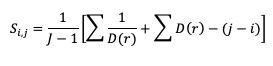

The Gerrity Skill Score is designed specifically for multicategory forecasts. While it is not as easily calculated as HSS and HK, it is useful for demonstrating the ability of the forecasts to delineate the correct event category when compared to random chance. The Gerrity Skill Score properly penalizes a forecast that has more than two options for an event, something not captured in the generalized forms of HSS or HK. This is achieved through the use of weights, sj,j, which correspond to correct forecasts, and sj,i, which represent weights for incorrect forecasts. These weights are given as

and

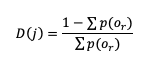

where D(r) is the likelihood ratio using dummy summation index r, calculated using

where p(or) is the probability of a sample climatology.

Finally, the Gerrity Skill Score is computed through summing the product of the scoring weights and their corresponding joint probability distribution. That distribution is found by taking each count of the contingency table cell and dividing it by the total number of occurrences and non-occurrences across all cells, n. See how to use these statistics in METplus!

METplus solutions for Multicategorical Forecast Verification

METplus solutions for Multicategorical Forecast VerificationMETplus solutions for Multicategorical Forecast Verification

One important note regarding how to define multicategorical thresholds within the MET and METplus wrapper configuration files should be discussed. METplus requires that when multiple thresholds are listed using the cat_thresh variable to calculate any multicategorical line types, the thresholds must be monotonically increasing and use the same inequality type. This is done in order to ensure that the thresholds create unique and discrete bins of values, rather than overlapping thresholds that do not provide any sound statistical value. In practice, this means that the following two examples would result in a METplus error:

Example 1.

Example 2.

In example 1, the thresholds decrease with each entry which violates the requirement of monotonically increasing. With a simple reordering of the thresholds, example 1 can use the same thresholds and run with success:

In example 2, the final threshold uses a different inequality than the other two thresholds which violates the requirement that all multicategorical thresholds use the same inequality type. This rewrite of example 2 will provide the same information desired from the original thresholds, but will now successfully run in METplus:

This time, the rewrite changed the final inequality to match the first two while also keeping the final bin of values that METplus will calculate, >=15.5, consistent with the desired information. More information on how MET creates the value bins from multicategory thresholds is provided in the MET example of Multicategorical Forecast Verification.

Now that you know a bit more about verification measures for multicategorical, deterministic forecasts, it’s time to show how you can access those same statistics in METplus!

In order to better understand the delineation between METplus, MET, and METplus wrappers which are used frequently throughout this tutorial but are NOT interchangeable, the following definitions are provided for clarity:

- METplus is best visualized as an overarching framework with individual components. It encapsulates all of the repositories: MET, METplus wrappers, METdataio, METcalcpy, and METplotpy.

- MET serves as the core statistical component that ingests the provided fields and commands to compute user-requested statistics and diagnostics.

- METplus wrappers is a suite of Python wrappers that provide low-level automation of MET tools and plotting capability. While there are examples of calling METplus wrappers without any underlying MET usage, these are the exception rather than the rule

MET solutions

The MET User’s Guide provides an Appendix that dives into statistical measures that it calculates, as well as the line type it is a part of. Statistics are grouped together by application and type and are available to METplus users in line types. To delineate between the calculation method for binary categorical and multicategorical forecast skill scores, METplus has two pairs of separate, but similar line types. As discussed in detail in the Binary Categorical forecasts section, the Contingency Table Statistics (CTS) line type and Contingency Table Counts (CTC) line type are for users who want single category forecast statistics. It’s important to note that the CTS line type must also be utilized by users who want scalar statistics from multicategorical forecasts, except for Accuracy. To accomplish this, simply follow the guidance listed in the Verification Statistics section for Multicategorical Forecasts. The complements to CTS and CTC in the multicategory group are the aptly named Multicategory Contingency Table Statistics (MCTS) line type and Multicategory Contingency Table Counts (MCTC) line type. Similar to the CTC, MCTC allows direct access to each of the counts from the contingency table of multicategorical forecasts. MCTS contains all of the skill scores that were discussed in the Multicategorical Verification statistics section, as well as the scalar statistic Accuracy, which are linked to their appendix description here for your convenience (except for Gerrity, which does not appear in the appendix):

METplus Wrapper Solutions

The same statistics that are available in MET are also available with the METplus wrappers. To better understand how MET configuration options for statistics translate to METplus wrapper configuration options, you can utilize the Statistics and Diagnostics Section of the METplus wrappers User’s Guide, which lists all of the statistics available through the wrappers, including which tools can output which statistics. To access the line type through the tool, find your desired tool in the list of available commands for that tool. Once you do, you’ll see the tool will have several options that contain _OUTPUT_FLAG_, which will exhibit the same behavior and accept the same settings as the line types in MET’s output_flag dictionary, so be sure to review the available settings to get the line type output you want.

METplus Examples of Multicategorical Forecast Verification

METplus Examples of Multicategorical Forecast VerificationThe following two examples show a generalized method for calculating multicategorical statistics: one for a MET-only usage, and the same example but utilizing METplus wrappers. These examples are not meant to be completely reproducible by a user: no input data is provided, commands to run the various tools are not given, etc. Instead, they serve as a general guide of one possible setup among many that produce multicategorical statistics.

If you are interested in reproducible, step-by-step examples of running the various tools of METplus, you are strongly encouraged to review the METplus online tutorial that follows this statistical tutorial, where data is made available to reproduce the guided examples.

In order to better understand the delineation between METplus, MET, and METplus wrappers which are used frequently throughout this tutorial but are NOT interchangeable, the following definitions are provided for clarity:

- METplus is best visualized as an overarching framework with individual components. It encapsulates all of the repositories: MET, METplus wrappers, METdataio, METcalcpy, and METplotpy.

- MET serves as the core statistical component that ingests the provided fields and commands to compute user-requested statistics and diagnostics.

- METplus wrappers is a suite of Python wrappers that provide low-level automation of MET tools and plotting capability. While there are examples of calling METplus wrappers without any underlying MET usage, these are the exception rather than the rule.

MET Example of Multicategorical Forecast Verification

Here is an example that demonstrates multicategorical forecast verification in MET.

For this example, let’s use Point-Stat. Assume we wanted to verify a multicategory forecast of wind speeds over the ocean. Specifically of interest are speed thresholds of near gale force (13.9 m/s), gale force (17.2 m/s), tropical storm (24.5 m/s), and hurricane (32.7 m/s). Starting with the general Point-Stat configuration file, the following would resemble minimum necessary settings/changes for the fcst and obs dictionaries:

field = [

{

name = "WIND";

level = [ "Z10" ];

cat_thresh = [ >=13.9, >=17.2, >=24.5, >=32.7 ];

}

];

}

obs = fcst;

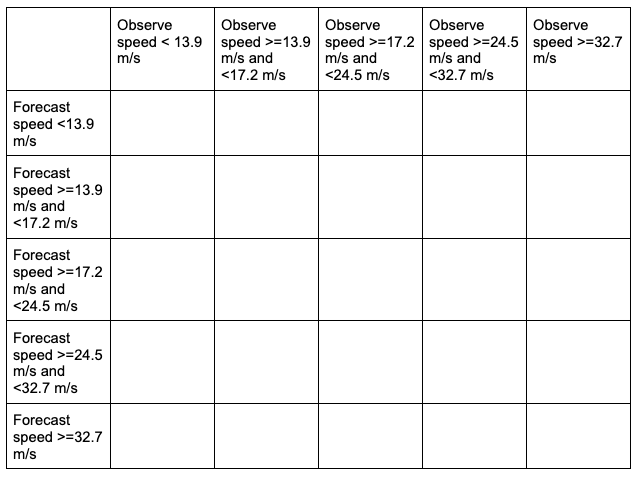

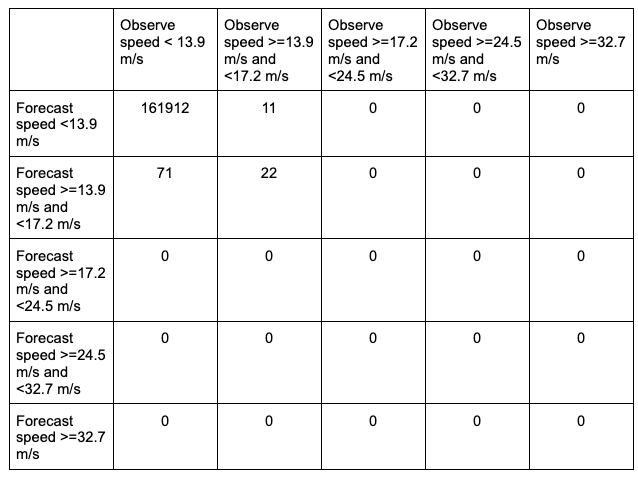

In this example, the forecast field name in the forecast input file is named WIND, and is set accordingly in the fcst dictionary. Wind speed is one of the unique variables in METplus that can be calculated from the u and v components of a grib1 or grib2 file if wind speed is not present in the file. Assuming the input file is in a grib1 or grib2 format, MET will first check if a variable field WIND is present; if it is, MET will use the values in that field for analysis. If not, MET will check for the u-component (UGRD) and v-component (VGRD) fields and if found, compute the wind speed field for analysis. A level of Z10 is used to grab the vertical level of 10 that WIND appears on, which for this input file corresponds to the 10 meter level. Finally, cat_thresh, which controls the categorical threshold used to create the multicategory contingency table, is set to four separate thresholds, with each value corresponding to one of the wind speed thresholds of interest. The example’s chosen thresholds assume that the wind speed units in the file are in meters per second. All of the additional fcst field entries from the general Point-Stat configuration file were removed. Note how MET uses four thresholds to creates five unique, discrete bins of wind speeds with a contingency table that would look like the following:

The table includes a “hidden” bin containing wind speeds less than 13.9 m/s that is not explicitly listed by a threshold in the MET settings, but rather implied: each of these bins is mutually exclusive and together they entail the complete real number line. This is why it is important to remember the “monotonically increasing and same inequality type” requirement when setting multicategorical forecast thresholds in METplus. For more discussion on this, review the METplus Solutions for Multicategorical Forecast Verification section.

The obs dictionary is simply copying the settings from the fcst dictionary, which is a method that can be used if both the forecast and observation input files share the same variable structure and file type (e.g. both inputs use the WIND variable name, in m/s, with the Z10 level corresponding to the 10 meter level).

Now all that’s necessary is to adjust the output_flag dictionary settings to have Point-Stat print out the desired line types:

fho = NONE;

ctc = NONE;

cts = NONE;

mctc = STAT;

mcts = STAT;

cnt = NONE;

…

In this example, we have told MET to output the MCTC and MCTS line types, which will produce one .stat file with the two line types that were selected. The MCTC line would look something like:

While the stat file full header column contents are discussed in the User’s Guide, the MCTC line types are the final columns of the line beginning after the “MCTC” column. The first value is MET’s TOTAL column which is the “total number of matched pairs”. You might better recognize this value as n, the summation of every cell in the contingency table. The following value is the number of dimensions or bins of the contingency table. As discussed above, providing four categorical thresholds creates a 5x5 contingency table. That means that we expect, and receive, 25 cells of data that make up the contingency table. They are listed starting with the lowest forecast and observation threshold pair, with increasing observation thresholds starting first. For the contingency table provided in this example, it would look like the following:

Note that the final column of the MCTC line type, EC_VALUE, is only relevant to users verifying probabilistic data with the HSS_EC skill score.

The MCTS line type is also present in the .stat file as the second row. In this example, the contents would be:

Compared to the statistics available in the CTC line type for dichotomous categorical forecasts, fewer verification statistics can be applied to a multicategorical contingency table, since most of the contingency table verification statistics require a simplified 2x2 contingency table. The columns that are available in the MCTS line type are listed in the MET User’s Guide guidance for the MCTS line type. After the declaration of the line type (MCTS), the familiar TOTAL or n column, and the number of bins created from the thresholds provided, we find Accuracy, HK, HSS, the Gerrity Skill Score, and HSS_EC, all with their appropriate lower and upper confidence intervals and the bootstrap confidence intervals. Accuracy has an additional two columns that give the normal confidence limits in addition to the bootstrap confidence limits. Note that because the bootstrap library’s n_rep variable was kept at its default value of 0, bootstrap methods were not used and appear as NA in the stat file. While all of these statistics could be obtained from the MCTC line type values with additional post-processing, the simplicity of having all of them already calculated and ready for additional group statistics or to advise forecast adjustments is one of the many advantages of using the METplus system.

METplus Wrapper Example of Multicategorical Forecast Verification

To achieve the same success as the previous example but utilizing METplus wrappers instead of MET, very few adjustments would need to be made. Starting with the standard PointStat configuration file, we would need to set the _VAR1 settings appropriately:

BOTH_VAR1_LEVELS = Z10

BOTH_VAR1_THRESH = ge13.9, ge17.2, ge24.5, ge32.7

Note how the BOTH option is utilized here (as opposed to individual FCST_ and OBS_ settings) since the forecast and observation datasets utilize the same name and level information. Because the loop/timing information is controlled inside the configuration file for METplus wrappers (as opposed to MET’s non-looping option), that information must also be set accordingly:

INIT_TIME_FMT = %Y%m%d%H

INIT_BEG=2023080700

INIT_END=2023080700

INIT_INCREMENT = 12H

LEAD_SEQ = 12

Finally, the desired line types need to be selected for output. In the wrappers, that looks like this:

GRID_STAT_OUTPUT_FLAG_MCTS = STAT

With a proper setting of the input and output directories, file templates, and a successful run of METplus, the same .stat output file that was created in the MET example would be produced here, complete with MCTC and MCTS line type rows.

Continuous Forecasts

Continuous ForecastsContinuous Forecasts

When considering a forecast, one of the essential aspects is the actual value predicted by the forecast. For example, a forecast for 2 meter maximum air temperature of 75 degrees Fahrenheit is explicitly calling for one value to occur as the highest recorded temperature value for the entire day for that single point in space. If the maximum observed 2 meter air temperature was 77 degrees Fahrenheit for that point instead, then the forecast was incorrect: some might consider this the end of the story, another “blown” forecast. However, if the entire spectrum of 2-meter air temperature forecasts that could have been forecasted is considered, however, we can ask the question “how good or bad was that forecast, really”? After all, wasn’t the forecast only 2 degrees Fahrenheit off from what was observed? Continuous forecast verification is a category of verification that considers the entire real value spectrum that a forecast variable can take on, rather than individual discrete points or categories as is the case with dichotomous and multicategorical verification.

Verification Statistics for Continuous Forecasts

Verification Statistics for Continuous ForecastsVerification Statistics for Continuous Forecasts

The nature of continuous forecast verification is a direct comparison of the forecast and observed values and measurement of the relationship of those two values over the real number range. We can easily infer that the scalar statistics and skill scores used for these kinds of forecasts will be different from those used for binary and multi-categorical forecasts. In fact many of the statistics that are used as “introductory statistics” are derived for continuous verification along the real number range, as continuous verification is a natural way we look at forecasts (i.e., how close or far the forecast and observed values are from each other).

Mean Errors

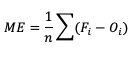

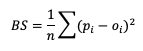

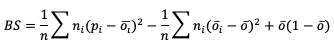

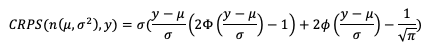

The simplest continuous measure is the mean error (ME), which is the average difference between two groups of data. So when you see “error” it is simply a measure of a difference. ME is calculated as

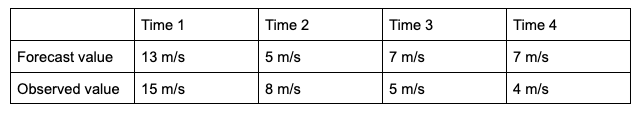

ME can range over the real numbers and has the obvious advantage of providing immediate insight into how far away, on average, the forecast values are from their corresponding observations. It is extremely easy to calculate, with a perfect forecast (i.e., a forecast that exactly corresponds to the observations) characterized by an ME of 0. ME serves as an important measurement of arithmetic bias. As a measure of bias, ME does not include a method for compensating for the magnitude of the differences between the forecast-observation pairs, and a forecast that has compensating errors could receive a mean error of 0. Essentially, ME provides an indication of whether a forecast has errors that - on average - are centered on zero; or if it is overforecasting or underforecasting on average. Consider the following scenario for wind speed forecasts at the same location for four different times:

You’ll find the ME computed with these data equals 0, even though the difference between forecast and observation values for all of the times are non-zero. That’s because those errors that are positive (the forecast was greater than the observed value) are exactly negated by those errors that are negative (the forecast was less than the observed value). As noted earlier, ME is a good measure of bias; however, it is not a measure of accuracy.

This ability to effectively “hide” errors calls for a more robust error calculation to understand the forecasts’ accuracy. To that end, a few additional choices for measuring error are available.

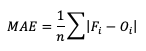

Mean absolute error (MAE), while similar to ME, examines the absolute values of the errors, which are summed and averaged, providing a measure of accuracy as opposed to bias. Examining the absolute values of the errors restricts MAE’s range from zero to positive infinity, with a perfect score of zero:

MAE also differs from ME in that it measures forecast accuracy, while ME measures bias. MAE provides a summary of the average magnitudes of the errors, but does not provide information about the direction (positive or negative) of the error magnitude.

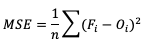

Mean Squared Error (MSE) is another statistic focused on the characteristics of the forecast errors that measures forecast accuracy. In particular, MSE summarizes the squared differences between the forecast and observed values:

This measure has the same limits and perfect score as MAE, but squaring the differences does more than remove the sign of the differences: by squaring the error values, differences greater than one are penalized more severely than those that are less than one, with the penalty increasing at a squared rate. Using MSE, a forecast that over-forecasts temperature by two degrees from the observed value consistently over six time steps will have a smaller MSE than a forecast that over-forecasts temperature from the observed value by three degrees for three time steps and matched the observed value for the remaining three time steps.

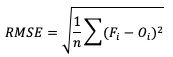

The final error statistic to discuss is Root Mean Squared Error (RMSE). One of the most familiar statistical measures, RMSE is simply the square root of MSE, and has the same units as the forecasts and observations, providing an average error with a square-weight:

Similar to MSE, RMSE penalizes larger error magnitudes than smaller ones, and like MSE, RMSE provides no information on the sign (positive or negative) of the errors. It has the same range of values as MSE and a perfect forecast score would be an RMSE of zero. See how to use these statistics in METplus!

Standard Deviations

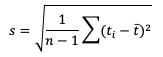

Unlike the family of mean error statistics, standard deviation focuses more on the individual groups of value sources; namely, the forecasts and observations. Standard deviations are a measure of how the variability of the values in a dataset differs from the average of their respective group. So keep in mind the general equation provided below can be applied to both observations and forecasts (and in some cases it may be meaningful to compare the standard deviation of the forecasts to the standard deviation of the observations):

See how to use this statistic in METplus!

Multiplicative Bias

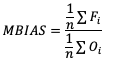

Like some of the other verification statistics for continuous forecasts, multiplicative bias (MBIAS) measures the ratio of the average forecast to the average observation. MBIAS is the ratio between these two averages, calculated using the following formula and has a range of all real numbers:

MBIAS has some of the same drawbacks as ME. In particular, MBIAS does not indicate the magnitudes of the forecast errors, which allows a perfect score of 1 to be achieved if the forecast errors compensate for each other (see the example for ME above for more information). It is recommended that any variable fields that utilize MBIAS contain all the same value signs (e.g. positive or negative) as mixing value signs together (for example, temperatures) would result in strange or unusable MBIAS results that included even more value compensation. See how to use this statistic in METplus!

Correlation Coefficients (Pearson, Spearman Rank, and Kendall’s Tau)

Because continuous forecasts and observations exist on the same real value spectrum, measurements of the linear association of the forecast-observation pairs create a vital foundation for verification statistics. Three measures of correlation (Pearson, Spearman Rank, and Kendall's Tau) measure this relationship in different ways.

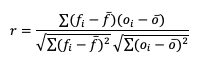

Beginning with Pearson correlation coefficient, PR_CORR, the linear association of the forecast-observation pairs is measured using a sample covariance (e.g. the summed squared anomalies of two groups) and dividing by the product of the same two group’s standard deviations. In forecast verification, these two groups are the forecast and observation datasets and appear as

with r as the typical notation for the PR_CORR outside of METplus. From a visual perspective, PR_CORR measures the closeness of pairs of points, (i.e., representing the forecast and corresponding observation values) corresponding to a diagonal line. More generally these scatter plots can suggest the apparent relationship of any two variable fields, but for the purpose of this tutorial we will stay focused on the forecast-observation comparison. PR_CORR can range from negative one to positive one with either extreme of the range representing a perfect positive or negative linear association between the forecasts and observations. The PR_CORR remains sensitive to outliers and can produce a good correlation value for a poor forecast if that forecast has compensative errors.

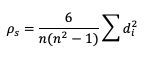

Another way to estimate the linear association between forecasts and their corresponding observations involves ranking the forecast values when compared to the forecast group’s total range, do the same for the observation group, and then compute the correlation values. Spearman Rank correlation coefficient (SP_CORR) takes this approach while utilizing the same equation as PR_CORR. Because the rank values of both the forecast and observation datasets used to calculate SP_CORR are restricted to the integer values of 1 to n (the total number of matched forecast-observation pairs), the equation of SP_CORR can be simplified to

where di denotes the difference in ranking between the pair of datafields.

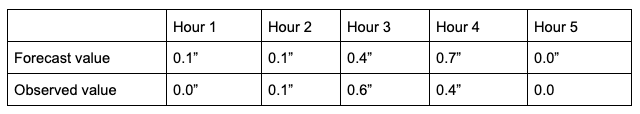

What does ranking of matched pairs look like with actual data? Let’s use the following scenario of rainfall forecasts and the observed rainfall over a five hour period for a set location:

Using a ranking system for the datasets where 0.0” is assigned 1 and everything greater than that value is assigned a value that is integer-based, monotonically increasing by 1, the data table above would be transformed into the following ranks:

It is important that the forecast-observation pairs stay paired together, even when transformed into their rank values. Note the case when there is a tie in the ranks (i.e. a value appears more than once), all of the values receive the same average rank, keeping the total average rank for the number of values (in this scenario, five) consistent with the SP_CORR equation assumption. The next step is calculating the differences between the forecast ranks and the observed ranks, squaring the differences and summing them. After that, apply the rest of the equation and you should find ρ_s =0.825, a strong correlation value.

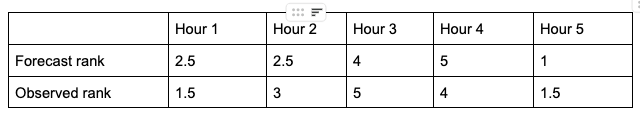

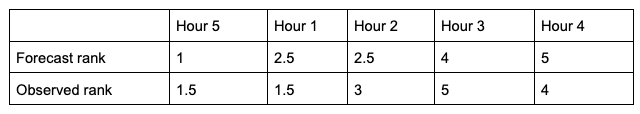

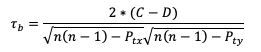

The Kendall’s Tau statistic (𝝉) is a variation on the SP_CORR equation ranking method. While all of the variable field values are ranked as was the case with SP_CORR, it’s the relationship between a pair of ranks and the pairs of ranks that follow, rather than the ranks within a given pair, that constitute 𝝉. To better understand, let’s first look at the equation for 𝝉:

In the equation, N_C is a count of the concordant pairs, and N_D is a count of the discordant pairs. Simply put, a concordant pair is when a reference pair has rank values that are both larger or both smaller than a comparison pair. For example, consider the two pairs (3, 5) and (6, 8). Regardless of which pair is treated as the reference pair, the comparison pair will have both of the larger rank values (the case where (3, 5) is the reference pair) or both of the smaller rank values (the case where (6, 8) is the reference pair). A concordant pair is the complement to this: each pair in the comparison has one value that is bigger and one that is smaller. In the case of (3, 5) and (6, 2) the comparisons are considered discordant. In the case where the compared pairs are identical, there are multiple methods to choose: N_C and N_D can be incremented by ½ each, the tie can be ignored, or the tie can be penalized. For simplicity in counting, it is recommended to arrange one of the datasets in ascending or descending order (being careful to keep the matched pairs together) so that fewer comparisons need to be made.

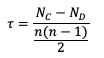

To show how 𝝉 is calculated, let’s return to the previous example used to calculate SP_CORR. Because we’re using the same rainfall values, the rankings of those values will also be the same. For the simplicity of counting concordant and discordant pairs, the forecast ranks have been arranged in increasing order (which explains why Hour 5 is now proceeding Hour 1):

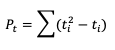

From this small change in the ordering, we see that two of the observed ranks do not increase (going from left to right): between Hour 5 and Hour 1 (they match at 1.5), and between Hour 3 and Hour 4 (5 compared to 4). Additionally, the forecast ranks between Hour 1 and Hour 2 do not increase (they match). In order to account for these ties, a “penalty” will be calculated using the following equation:

Where ti is the number of ranks that are involved in a given tie. Because both the forecast and observed ranks have a tie of two ranks, both will receive a Pt penalty of 2. As a result of this new handling of ties, an alternate form of 𝝉 sometimes referred to as 𝝉b must be used:

Using this slightly modified equation, we find a 𝝉b value of 0.56. As 𝝉 has the same range as SP_CORR (-1 to 1), a value of 0.56 shows some positive correlation between the two datasets. See how to use these statistics in METplus!

Anomaly Correlation

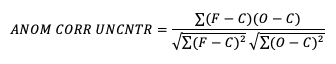

While similar to the traditional correlation coefficient, anomaly correlation is a somewhat different formulation: rather than directly comparing the pairs of forecast and observation values relative to the respective group averages, as is the case in PR_CORR, anomaly correlation is a measure of the deviations of forecast and observation values from climatological averages. Utilizing this third independent dataset for comparisons highlights any pattern of departures from the climatology, a correspondence of the forecast anomalies to the observed anomalies.

There are two widely used versions of anomaly correlation. The first is the centered anomaly correlation, which includes the mean error relative to the climatology. This version is calculated using

If it is not desirable to include the errors, the uncentered anomaly correlation is the better choice:

While there is an added effort required to find the matching climatology reference dataset for this statistic, it remains a highly resourceful statistic to use, especially with spatial verification, and is commonly used in operational forecasting centers. Anomaly correlation has a range from -1 to 1. See how to use this statistic in METplus!

METplus Solutions for Continuous Forecast Verification

METplus Solutions for Continuous Forecast VerificationMETplus Solutions for Continuous Forecast Verification

Now that you know a bit more about continuous, deterministic forecasts and the related statistics, it’s time to show how you can access those same statistics in METplus!

Unsurprisingly METplus requires a slightly different approach to continuous variables and the thresholds that are used on these datasets. Rather than the cat_thresh arrays that are used for categorical variable fields, METplus uses cnt_thresh for filtering data prior to computing continuous statistics. Keep that in mind if you end up with a blank continuous line type file output; you may have not applied the correct thresholds!

In order to better understand the delineation between METplus, MET, and METplus wrappers which are used frequently throughout this tutorial but are NOT interchangeable, the following definitions are provided for clarity:

- METplus is best visualized as an overarching framework with individual components. It encapsulates all of the repositories: MET, METplus wrappers, METdataio, METcalcpy, and METplotpy.

- MET serves as the core statistical component that ingests the provided fields and commands to compute user-requested statistics and diagnostics.

- METplus wrappers is a suite of Python wrappers that provide low-level automation of MET tools and plotting capability. While there are examples of calling METplus wrappers without any underlying MET usage, these are the exception rather than the rule

MET Solutions

The MET User’s Guide provides an Appendix that dives into each and every statistical measure that it calculates, as well as the line type it is a part of. Statistics are grouped together by application and type and are available to METplus users in line types. For example, many of the statistics that were discussed above can be found in the Continuous Statistics (CNT) line type, which logically groups together statistics based on continuous variable fields.

For MET, which line types are output depends on your selection of the appropriate line type using the output_flag dictionary. Note that certain line types may or may not be available in every tool: for example, both Point-Stat and Grid-Stat produce CNT line types, which allows users access to the various continuous statistics for both point-based observations and gridded observations. But Ensemble-Stat is the only tool that can generate a Ranked Probability Score (RPS) line type which contains statistics relevant to the analysis of ensemble forecasts. If you don’t see your desired statistic in the line type or tool you’d expect it to be in, be sure to check the Appendix to see if the statistic is available in MET and which line type it’s currently grouped with.

As for the previous statistics that were discussed, here’s a link to the User’s Guide Appendix entry that discusses its use in MET:

- ME

- MAE

- MSE

- RMSE

- S (note that the linked s is for forecast; the observation s2 is just below it)

- MBIAS

- PR_CORR

- SP_CORR

- 𝝉 or KT_CORR

- ANOM_CORR

METplus Wrapper Solutions

The same statistics that are available in MET are also available with the METplus wrappers. To better understand how MET configuration options for statistics translate to METplus wrapper configuration options, you can utilize the Statistics and Diagnostics Section of the METplus wrappers User’s Guide, which lists all of the available statistics through the wrappers, including what tools can output what statistics. To access the line type through the tool, find your desired tool in the list of available commands for that tool. Once you do, you’ll see the tool will have several options that contain _OUTPUT_FLAG_. These will exhibit the same behavior and accept the same settings as the line types in MET’s output_flag dictionary, so be sure to review the available settings to get the line type output you want.

METplus Examples of Continuous Forecast Verification

METplus Examples of Continuous Forecast VerificationThe following two examples show a generalized method for calculating continuous statistics: one for a MET-only usage, and the same example but utilizing METplus wrappers. These examples are not meant to be completely reproducible by a user: no input data is provided, commands to run the various tools are not given, etc. Instead, they serve as a general guide of one possible setup among many that produce continuous statistics.

If you are interested in reproducible, step-by-step examples of running the various tools of METplus, you are strongly encouraged to review the METplus online tutorial that follows this statistical tutorial, where data is made available to reproduce the guided examples.

In order to better understand the delineation between METplus, MET, and METplus wrappers which are used frequently throughout this tutorial but are NOT interchangeable, the following definitions are provided for clarity:

- METplus is best visualized as an overarching framework with individual components. It encapsulates all of the repositories: MET, METplus wrappers, METdataio, METcalcpy, and METplotpy.

- MET serves as the core statistical component that ingests the provided fields and commands to compute user-requested statistics and diagnostics.

- METplus wrappers is a suite of Python wrappers that provide low-level automation of MET tools and plotting capability. While there are examples of calling METplus wrappers without any underlying MET usage, these are the exception rather than the rule.

MET Example of Continuous Forecast Verification

Here is an example that demonstrates deterministic forecast verification in MET.

For this example, let’s examine two tools, PCP-Combine and Grid-Stat. Assume we wanted to verify a 6 hour period of precipitation forecasts over the continental United States. Using these tools, we will first combine the forecast files, which are hourly forecasts, into a 6 hour summation file with PCP-Combine. Then we will use Grid-Stat to place both datasets on the same verification grid and let MET calculate the continuous statistics available in the CNT line type. Starting with PCP-Combine, we need to understand what the desired output is first to know how to properly run the tool from the command line, as PCP-Combine does not use a configuration file. As stated previously, this scenario assumes the precipitation forecasts are hourly files and need to match the 6 hour observation file time summation. Of the four commands available in PCP-Combine, two seem to provide potential paths forward: sum and add.

While there are multiple methods that may work to successfully summarize the forecast files from these two commands, let’s assume that our forecast data files contain a time reference variable that is not CF-compliant {link to CF compliant time table in MET UG here}. As such, MET will be unable to determine the initialization and valid time of the files (without being explicitly set in the field array). Because the “add” command only relies on a list of files passed by the user to determine what is being summed, that is the command we will use.

With the information above, we construct the command line argument for PCP-Combine to be:

hrefmean_2017050912f018.nc \

hrefmean_2017050912f017.nc \

hrefmean_2017050912f016.nc \

hrefmean_2017050912f015.nc \

hrefmean_2017050912f014.nc \

hrefmean_2017050912f013.nc \

-field 'name="P01M_NONE"; level="(0,*,*)";' -name "APCP_06" \

hrefmean_2017051006_A06.nc

The command above displays the required arguments for PCP-Combine’s “add” command as well as an optional argument. When using the “add” command it is required to pass a list of the input files to be processed. In this instance we used a list of files, but had the option to pass an ASCII file that contained all of the files instead. Because each of the input forecast files contain the exact same variable fields, we utilize the “-field” argument to list exactly one variable field to be processed in all files. Alternatively we could have listed the same field information (i.e. 'name="P01M_NONE"; level="(0,*,*)";') after each input file. Finally, the output file name is set to “hrefmean_2017051006_A06.nc”, which contains references to the valid time of the file contents as well as how many hours the precipitation is accumulated over. The optional argument “-name” sets the output variable field name to “APCP_06”, following GRIB standard practices.

After a successful run of PCP-Combine we now have a six hour accumulation field forecast of precipitation and are ready to set our Grid-Stat configuration file. Starting with the general Grid-Stat configuration file {provide link here}, the following would resemble minimum necessary settings/changes for the fcst and obs dictionaries:

field = [

{

name = "APCP_06";

level = [ "(*,*)" ];

}

];

}

obs = {

field = [

{

name = “P06M_NONE”;

level = [“(@20170510_060000,*,*)”];

}

];

}

Both input files are netCDF format and so require the asterisk and parentheses method of level access. The observation input file level required a third dimension as more than one observation time is available. We used the “@” symbol to take advantage of MET’s ability to parse time dimensions by the user-desired time, which in this case was the verification time. Note how the variable field name that was set in PCP-Combine’s output file is used in the forecast field information. Because the two variable fields are not on the same grid, an independent grid is chosen as the verification grid. This is set using the in-tool method for regridding:

to_grid = "CONUS_HRRRTLE.nc";

…

Now that the verification is being performed on our desired grid and the variable fields are set to be read in, all that’s left in the configuration file is to set the output. This time, we’re interested in the CNT line type and set the output flag library accordingly:

fho = NONE;

ctc = NONE;

cts = NONE;

mctc = NONE;

mcts = NONE;

cnt = STAT;

sl1l2 = NONE;

…

And as a sanity check we can see the input fields the way MET is ingesting them by selecting the desired nc_pairs_flag library settings:

latlon = TRUE;

raw = TRUE;

diff = TRUE;

climo = FALSE;

climo_cdp = FALSE;

seeps = FALSE;

weight = FALSE;

nbrhd = FALSE;

fourier = FALSE;

gradient = FALSE;

distance_map = FALSE;

apply_mask = FALSE;

}

All that’s left is to run MET with a command line prompt:

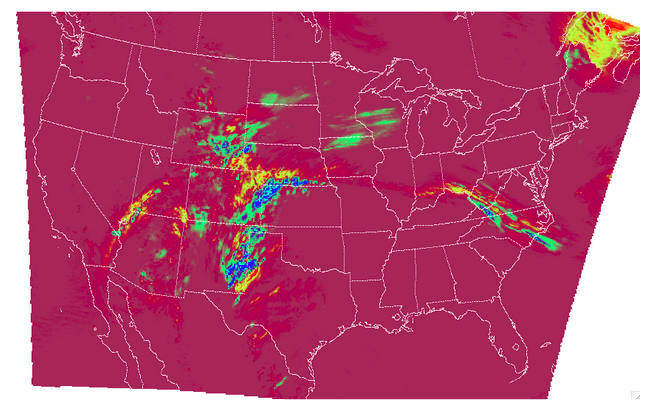

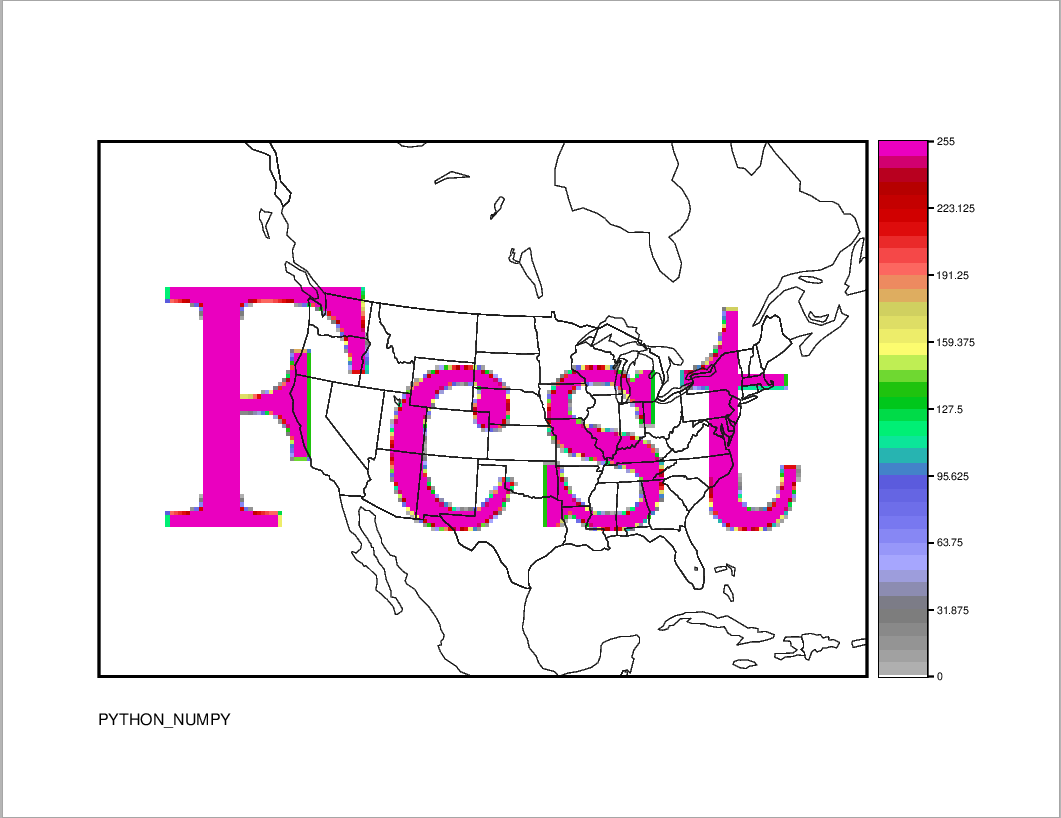

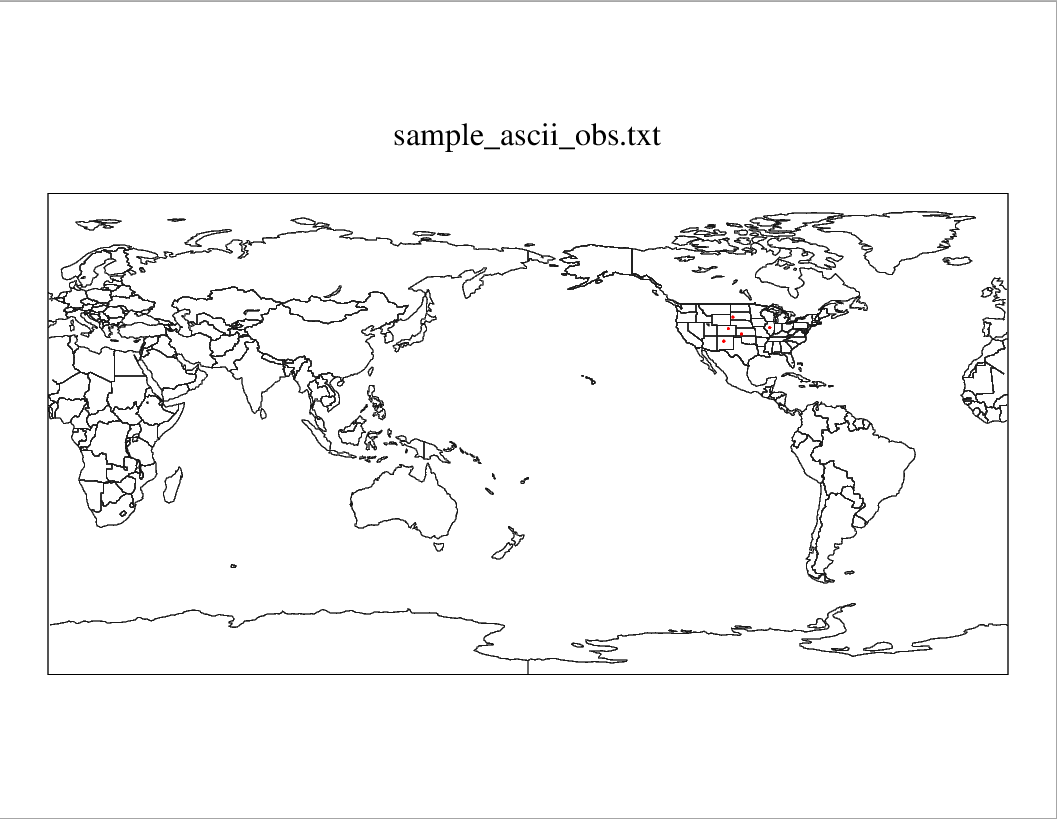

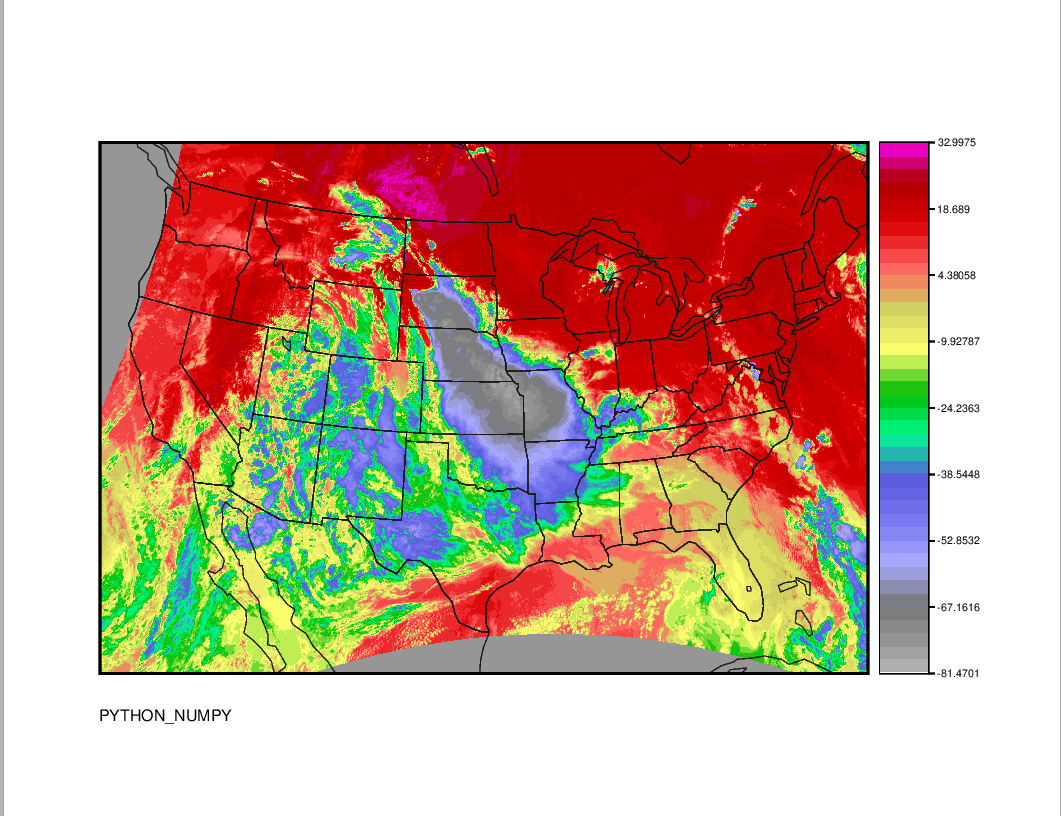

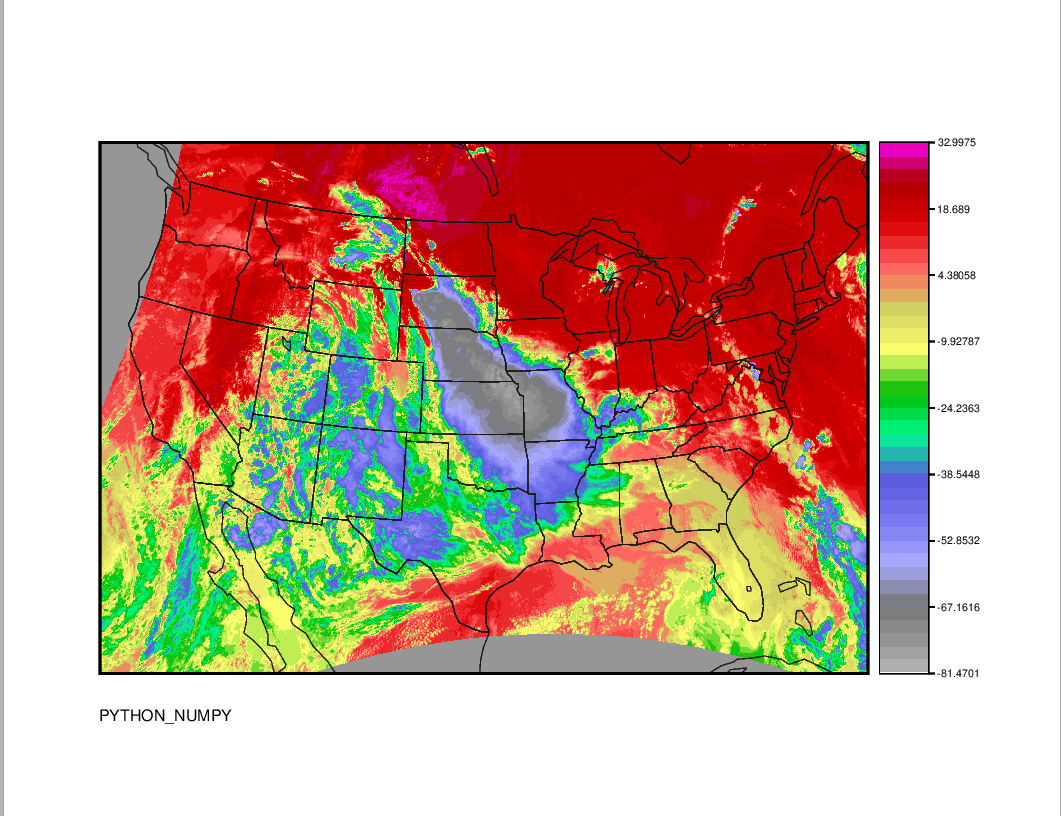

The resulting two files have a wealth of information and statistics. The netCDF output contains our visual confirmation that the forecast and observation input fields were interpreted correctly by MET, as well as the difference between the two fields which is provided below:

For the CNT line type output we review the .stat file and find something similar to the following:

The columns that are available in the CNT line type are listed in the MET User’s Guide guidance for CNT line type {provide link here}. After the declaration of the line type (CNT), the familiar TOTAL or matched pairs column, we find a wealth of statistics including the forecast and observation means, the forecast and observation standard deviations, ME, MSE, along with all of the other statistics discussed in this section, all with their appropriate lower and upper confidence intervals and the bootstrap confidence intervals. Note that because the bootstrap library’s n_rep variable was kept at its default value of 0, bootstrap methods were not used and appear as NA in the stat file. You’ll also note that the ranking statistics (SP_CORR and KT_CORR) are listed as NA because we did not set rank_corr_flag to TRUE in the Grid-Stat configuration file. This was done intentionally; in order to calculate ranking statistics MET needs to assign each and every matched pair a rank and then perform the calculations. With a large dataset with numerous matched pairs this can significantly increase runtime and be computationally intensive. Given our example had over 1.5 million matched pairs, these statistics are best left to a smaller domain. Let’s create that smaller domain in a METplus wrappers example!

METplus Wrapper Example of Continuous Forecast Verification

To achieve the same success as the previous example but utilizing METplus wrappers instead of MET, very few adjustments would need to be made. Because METplus wrappers have the helpful feature of chaining multiple tools together, we’ll start off by listing all of the tools we want to use. Recall that for this example, we want to include a smaller verification area to enable the rank correlation statistics as well. This results in the following process list:

The second listing of GridStat uses the instance feature to allow a second run of Grid-Stat with different settings. Now we need to set the _VAR1 settings appropriately:

FCST_VAR1_LEVELS = "(*,*)"

OBS_VAR1_NAME = P06M_NONE

OBS_VAR1_LEVELS = "({valid?fmt=%Y%m%d_%H%M%S},*,*)"

We’ve utilized a different setting, FCST_PCP_COMBINE_OUTPUT_NAME, to control what forecast variable name is verified. This way if a different forecast variable were to be used in a later run (assuming it had the same level dimensions as the precipitation), the forecast variable name would only need to be changed in one place instead of multiple. We also utilize METplus wrappers' ability to set an index based on a time, behaving similarly to the “@” usage in the MET example. Now we need to create all of the settings for PCPCombine:

<\br> FCST_PCP_COMBINE_INPUT_DATATYPE = NETCDF

FCST_PCP_COMBINE_CONSTANT_INIT = true

FCST_PCP_COMBINE_INPUT_ACCUMS = 1

FCST_PCP_COMBINE_INPUT_NAMES = P01M_NONE

FCST_PCP_COMBINE_INPUT_LEVELS = "(0,*,*)"

FCST_PCP_COMBINE_OUTPUT_ACCUM = 6

FCST_PCP_COMBINE_OUTPUT_NAME = APCP_06

From these settings, we see that all of the arguments from the command line for PCP-Combine are present: we will use the “add” method to loop over six netCDF files, each containing a variable field name of P01M_NONE and accumulation times of one hour. The output variable should be named APCP_06 and will be stored in the location as directed by FCST_PCP_COMBINE_OUTPUT_DIR and FCST_PCP_COMBINE_OUTPUT_TEMPLATE (not shown above).

Because the loop/timing information is controlled inside the configuration file for METplus wrappers (as opposed to MET’s non-looping option), that information must also be set accordingly, paying close attention that both instances of GridStat and PCPCombine will behave as expected:

INIT_TIME_FMT = %Y%m%d%H

INIT_BEG=2017050912

INIT_END=2017050912

INIT_INCREMENT=12H

LEAD_SEQ = 18

Recall that we need to perform the verification on an alternate grid for one of the instances, and a small subset of the larger area in the other. So in the first instance configuration file area (anywhere outside of the [rank] instance configuration file area) we add

GRID_STAT_REGRID_METHOD = NEAREST