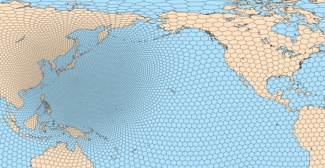

The Rapid Refresh Forecast System (RRFS) is a critical component of NOAA’s Unified Forecasting System (UFS) initiative which has been in development for several years and is planned for operational implementation in late 2024. The RRFS will provide the NWS an hourly updating, high-resolution ensemble capability that uses a state-of-the-art, convective-scale ensemble data assimilation and forecasting system with the Finite-Volume Cubed Sphere Dynamical core (FV3). Further, the RRFS will greatly simplify NCEP’s model production suite by subsuming several regional modeling systems such as the North American Mesoscale model (NAM), the Rapid Refresh (RAP), and the High-Resolution Rapid Refresh (HRRR), which is a significant step forward for the UFS vision to unify development efforts around a simplified and streamlined system. Since 2018, Spring Forecasting Experiments (SFEs) in NOAA’s Hazardous Weather Testbed have played an important role in evaluating convective scale FV3-based model configurations for severe weather forecasting applications. Each year, more and more model configurations utilizing the FV3 dynamical core have been evaluated during the SFE, and SFE 2022 was no exception. With contributions from multiple agencies, 59 FV3-based model configurations were contributed to the 2022 SFE, up from 24 FV3-based model configurations in 2021 and 10 FV3-based model configurations in 2020. This increase is in part due to multiple agencies, such as the University of Oklahoma’s Multi-scale data Assimilation and Predictability (MAP) group, running ensembles to determine how to best configure a future RRFS.

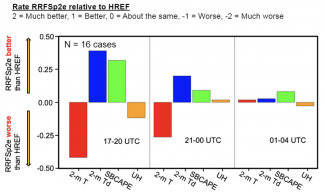

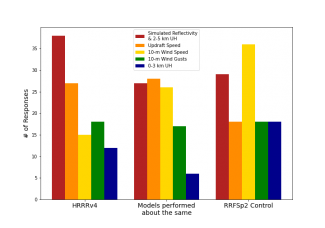

Feedback was provided to the developers through multiple methods during the SFEs. Formal evaluations were conducted, asking participants to subjectively evaluate convection-allowing model and ensemble performance in forecasting severe convective weather such as tornadoes, hail, and winds. Feedback was also collected on the specific aspects of model performance that the developers were interested in, such as how well models using different data assimilation schemes depicted ongoing storms an hour into the forecast. In 2022, for the first time in the SFE, blinded evaluations were conducted so participants did not know which model configuration was used. Blinding the evaluations and randomly displaying the configurations removed any bias participants had toward or away from certain configurations based on name alone.