As operational centers move inexorably toward ensemble-based probabilistic forecasting, the role of the DTC as a bridge between research and operations has expanded to include testing and evaluation of ensemble forecasting systems.

In 2010 the ensemble task area in the DTC was designed with the ultimate goal of providing an environment in which extensive testing and evaluation of ensemble-related techniques developed by the NWP community could be conducted. Because these results should be immediately relevant to the operational centers (e.g., NCEP/EMC and AFWA), the planning and execution of these DTC evaluation activities has been closely coordinated with the operational centers. All of the specific components of the ensemble system have been subject to evaluation, including ensemble design, post-processing, products, and verification. More information about the DTC Ensemble Task organization and goals can be found at: http://journals.ametsoc.org/doi/abs/10.1175/BAMS-D-11-00209.1

Recently, efforts of the DTC Ensemble team have included evaluation of the impact that changes in the National Centers for Environmental Prediction/Environmental Modeling Center (NCEP/EMC) Short-Range Ensemble Forecast (SREF) configuration have had on its performance. The focus has been on two areas: the impact of increased horizontal resolution and the impact due to changes in the model microphysical schemes. In an initial experiment, SREF performance using 16 km horizontal grid spacing (the current operational setting) was compared with the performance of SREF with potential future horizontal grid spacing of 9 km. In the second experiment the focus was on changes in microphysical parameterizations.

In the current operational version of SREF only one microphysical scheme (Ferrier) is used. That version has now been compared with results from an experimental ensemble configuration that includes two other microphysics options (called WSM6 and Thompson). Although these preliminary tests have used only SREF members from one WRF core (WRF-ARW), future tests will add NMMB members into the analysis. The sets of comparison ensemble systems each consisted of seven members: a control, and two pairs of three members with varying initial perturbations. This preliminary study was performed over the transition month of May 2013, and over the continental US domain. By good fortune, the time period captured one of the most active severe weather months in recent history, promising an interesting dataset for future in-depth studies.

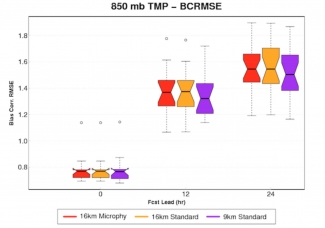

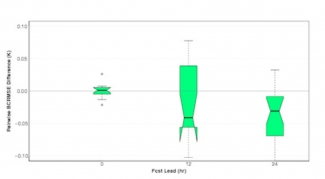

Verification for the set of runs was performed using the DTC’s Model Evaluation Tools (MET) for both single-value and probabilistic measures aggregated over the entire month of study. Some of the relevant results are illustrated in the accompanying figures, each of which displays arithmetic means from the corresponding ensemble system. The first figure shows box plots of bias corrected root mean square error (BCRMSE) with analysis and two lead times for 850 mb temperature for the operational 16 km SREF (yellow), a parallel configuration with a different combination of microphysics (red), and the experimental 9 km setting (purple). For this preliminary run, it appears that finer resolution improves SREF forecast performance more than changes in microphysics. Indeed, the pairwise differences between the 16 km and 9 km SREF forecasts in the second figure represent a comparison for the 24 hr lead time that is statistically significant, albeit for a limited data sample. Additional detailed analyses of an expanded set of these data are under way.