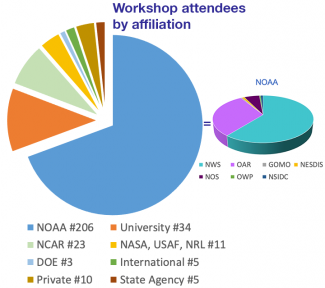

The Developmental Testbed Center (DTC), in collaboration with the National Oceanic and Atmospheric Administration (NOAA) and the Unified Forecast System's Verification and Validation Cross-Cutting Team (UFS-V&V), hosted a three-day workshop to identify key verification and validation metrics for UFS applications. The workshop was held remotely 22-24 February 2021. Registration for the event totaled 315 participants from across the research and operational community.

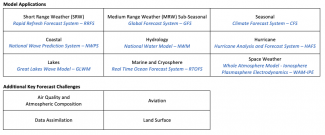

The goal of this workshop was to identify and prioritize key metrics to use during the evaluation of UFS research products, guiding their transition from research-to-operations (R2O). Because all UFS evaluation decisions affect a diverse set of users, workshop organizers invited members of the government, academic, and private sectors to participate. This outreach resulted in the participation of scientists not only from NOAA, but also from the National Center for Atmospheric Research (NCAR), National Aeronautics and Space Administration (NASA), US National Ice Center (USNIC), seventeen universities, seven commercial entities, and seven international forecast offices and universities. Ten NOAA research labs were represented, as well as all of the National Weather Service’s National Centers for Environmental Prediction (NCEP), five Regional Headquarters, and ten Weather Forecast offices (WFOs). Rounding out the government organizations included Department of Defense (DOD) and Department of Energy (DOE) entities along with several state government Departments of Environmental Protection.

In preparation for the workshop, a series of three pre-workshop surveys were distributed to interested parties between October 2020 and February 2021. Questions pertaining to fields and levels, temporal and spatial metadata, sources of truth (i.e. observations, analyses, reference models, climatologies), and preferred statistics were included in the surveys. The results were then used to prepare the list of candidate metrics, including their meta-data, for curation by the workshop breakout groups.

Keynote speakers, including Drs. Ricky Rood, Dorothy Koch, and Hendrik Tolman, along with the Workshop Co-Chairs, kicked off the workshop. The R2O process, in which metrics are used to advance the innovations towards progressively higher readiness levels, proceed through stages and gates, described here, as the tools are assessed and vetted for operations. Presentations included 1) a discussion of R2O stages and gates; 2) the results of the pre-workshop surveys; and 3) how the workshop would proceed. Online instantaneous surveys were used throughout the workshop to gather quantitative input from the participants. During the first two days, the breakout groups refined the results of the pre-workshop surveys. At the end of the second day, the participants were invited to fill out 13 online surveys (listed below) to prioritize the metrics for the full R2O stages and gates . On the last day, breakout groups discussed numerous ways to assign metrics to the R2O gates.

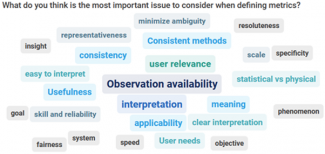

Three prominent themes emerged from the workshop. The first suggested that metrics used in the near term need to be tied to observation availability and should evolve as new observations become available. A second emphasized that metrics should be relevant to the user and easy to interpret. Lastly, the results of the ranking polls tended to place the sensible weather and upper-air fields as top priorities, leaving fields from the components of a fully coupled system (i.e. marine, cryosphere, land) nearer the bottom. Given this outcome, a tiger team of experts will be convened to help the UFS V&V team complete the consolidation and synthesis of the results to ensure component fields are also included across all gates.

These summary activities are wrapping up. The UFS V&V group is working with the chairs of other UFS working groups and application teams to finalize the metrics. The organizers intend to schedule a wrap-up webinar in mid-June to update the community on the final metrics. For more information and updates on the synthesis work, please visit the DTC UFS Evaluation Metrics Workshop website. Additionally, the Verification Post-Processing and Product Generation Branch at EMC has begun developing evaluation plans for the final R2O gate, transition to operations. Look for updates at the EMC Users Verification website.

The Workshop Organizing Committee included Tara Jensen (NCAR and DTC), Jason Levit (NOAA/EMC), Geoff Manikin (NOAA/EMC), Jason Otkin (UWisc CIMSS), Mike Baldwin (Purdue University), Dave Turner (NOAA/GSL), Deepthi Achuthavarier (NOAA/OSTI), Jack Settelmaier (NOAA/SRHQ), Burkely Gallo (NOAA/SPC), Linden Wolf (NOAA/OSTI), Sarah Lu (SUNY-Albany), Cristiana Stan (GMU), Yan Xue (OSTI), and Matt Janiga (NRL).