Robust testing and evaluation of research innovations is a critical component of the Research-to-Operations (R2O) process and is performed for NCEP by the Developmental Testbed Center (DTC).

At the foundation of the DTC testing and evaluation (T&E) system is the Model Evaluation Tools (MET), which is also supported to the community through the DTC. The verification team within the DTC has been working closely with DTC teams as well as the research and operational communities (e.g. NOAA HIWPP program and NCEP/EMC respectively) to enhance MET to better support both internal T&E activities and testing performed at NOAA Centers and Testbeds.

METv5.1 was released to the community in October 2015. It includes a multitude of enhancements to the already extensive capabilities. The additions can be grouped into new tools, enhanced controls over pre-existing capabilities, and new statistics. It may be fair to say there is something new for everyone.

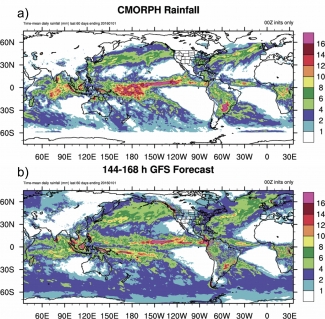

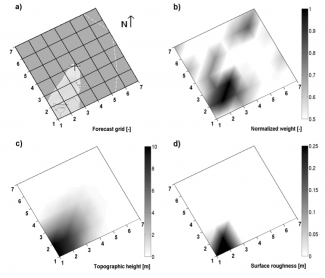

New tools: Sometimes through the development process, user needs drive the addition of new tools. This was the case for the METv5.1 release. The concept of automated regridding within the tools was first brought up during a discussion with the Science Advisory Board. The concept was embraced as a way to make the Mesoscale Model Evaluation Testbed (MMET) more accessible to researchers and was added. The MET team took it one step further and not only added the capability to all MET tools that ingest gridded data but also developed a stand-alone tool (regrid_data_plane) to facilitate regridding, especially of NetCDF files.

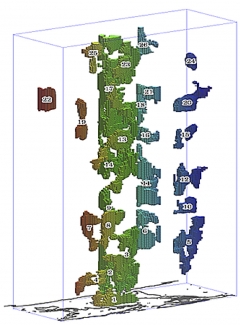

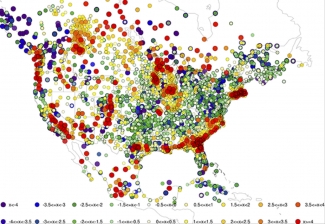

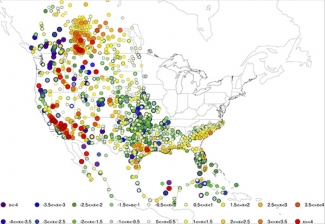

For those who use or would like to use the Method for Object-based Diagnostic Evaluation (MODE) tool in MET, a new tool (MODE-Time Domain or MTD) that tracks objects through time has been developed. In the past, many MET users have performed separate MODE runs at a series of forecast valid times and analyzed the resulting object attributes, matches and merges as functions of time in an effort to incorporate temporal information in assessments of forecast quality. MTD was developed as a way to address this need in a more systematic way. Most of the information obtained from such multiple coordinated MODE runs can be obtained more simply from MTD. As in MODE, MTD applies a convolution field and threshold to define the space-time objects. It also computes the single 3D object attributes (e.g. centroid, volume, and velocity) and paired 3D object attributes (e.g. centroid distance, volume ratio, speed difference).