This is a hard question to answer, largely because “WRF” means something different to each user with a specific model configuration for their application. With the numerous options available in WRF, it is difficult to test all possible combinations, and resulting improvements and/ or degradations of the system may differ for each particular configuration. Prior to a release, the WRF code is run through a large number of regression tests to ensure it successfully runs a wide variety of options; however, extensive testing to investigate the skill of the forecast is not widely addressed. In addition, code enhancements or additions that are meant to improve one aspect of the forecast may have an inadvertent negative impact on another.

In an effort to provide unbiased information regarding the progression of WRF code through time, the DTC has tested one particular configuration of the Advanced Research WRF (ARW) dynamic core for several releases of WRF (versions 3.4, 3.4.1, 3.5, 3.5.1, and 3.6). For each test, the end-to-end modeling system components were the same: WPS, WRF, the Unified Post Processor (UPP) and the Model Evaluation Tools (MET). Testing was conducted over two three-month periods (a warm season during July-September 2011 and a cool season during January-March 2012), effectively capturing model performance over a variety of weather regimes. To isolate the impacts of the WRF model code itself, 48-h cold start forecasts were initialized every 36h over a 15-km North American domain.

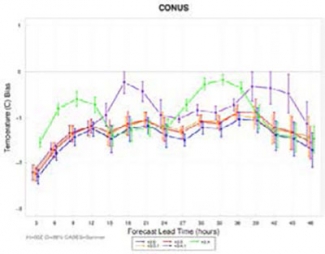

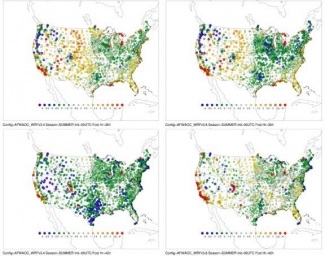

The particular physics suite used in these tests is the Air Force Weather Agency (AFWA) operational configuration, which includes WSM5 (microphysics), Dudhia/RRTM (short/long wave radiation), M-O (surface layer), Noah (land surface model), YSU (planetary boundary layer), and KF (cumulus). To highlight the differences in forecast performance with model progression, objective model verification statistics are produced for surface and upper air temperature, dew point temperature and wind speed for the full CONUS domain and 14 sub-regions across the U.S. Examples of the results (in this case, 2 m temperature bias) are shown in the figures. A consistent cold bias is seen for most lead times during the warm season for all versions (figure on page 1). While there was a significant degradation in performance during the overnight hours with versions 3.4.1 and newer, a significant improvement is noted for the most recent version (v3.6). Examining the distribution of 2 m temperature bias spatially by observation site (figure below), it is clear that for the 30-hour forecast lead time (valid at 06 UTC), v3.6 is noticeably colder over the eastern CONUS. However, for the 42-hour forecast lead time (valid at 18 UTC), v3.4 is significantly colder across much of the CONUS. For the full suite of verification results, please visit: WRF Version Testing website at www.dtcenter.org/eval/meso_mod/version_tne