Given its mission to facilitate the research to operations transition in numerical weather prediction, the DTC has a mandate to stay connected with both the research and operational NWP communities.

As a means to that end, the DTC Science Advisory Board (SAB) was established to provide (i) advice on strategic directions, (ii) recommendations for new code or new NWP innovations for testing and evaluation, and (iii) reviews of DTC Visitor Program proposals and recommendations for selection.

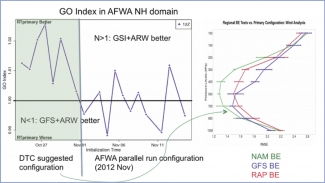

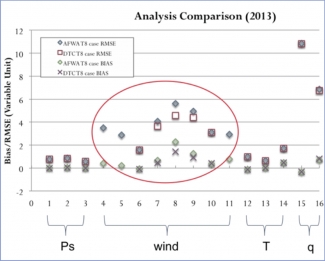

The third meeting of the SAB (the first with the new members announced in an earlier DTC Newsletter) was held recently (25-27 September 2013) in Boulder. To stimulate communication between the research and operational NWP communities, the DTC invited Geoff DiMego and Vijay Tallapragada to present future plans for mesoscale and hurricane modeling at the National Centers for Environmental Prediction’s (NCEP) Environmental Modeling Center (EMC), and Mike Horner to give an Air Force Weather Agency (AFWA) modeling update. Both informative presentations stimulated considerable discussion.

John Murphy, Chair of the DTC Executive Committee (EC), presented NWS’s view on research to operations and the important role of the DTC. Col. John Egentowich, also a member of DTC EC, spoke positively of the contributions of the DTC to Air Force weather prediction modeling. During summary and discussion periods, SAB members provided many valuable recommendations for the DTC to consider during their planning for the new DTC Annual Operating Plan (AOP) for 2014. These recommendations will be detailed in a DTC SAB Meeting Summary. Of these, several were of particular interest.

The SAB recommended that the DTC and EMC develop a community model-testing infrastructure at NCEP/EMC. The goal for such an infrastructure would be to allow visiting scientists easy access to EMC operational models, enabling them to collaborate with EMC scientists to perform model experiments using alternative approaches. Such an infrastructure would hopefully facilitate accelerated R2O transition in NWP.

The SAB voiced their belief that operational centers will have significantly more computing resources in the near future, putting nationwide high-resolution mesoscale ensemble forecasting within reach. Given that possibility, they recommended that DTC should help facilitate transition to cloud-permitting scale ensemble forecasting with multiple physics. The current members of the DTC Science Advisory Board can be found at http://www.dtcenter.org under governance.