Introduction

IntroductionSimplifying end-to-end numerical modeling using software containers

Software systems require substantial set-up to get all the necessary code, including external libraries, compiled on a specific platform. Recently, the concept of containers has been gaining popularity because they allow for software systems to be bundled (including operating system, libraries, code, and executables) and provided directly to users, eliminating possible frustrations with up-front system setup.

Using containers allows for efficient, lightweight, secure, and self-contained systems. Everything required to make a piece of software run is packaged into isolated containers, ready for development, shipment, and deployment. Using containers guarantees that software will always run the same, regardless of where it is deployed.

Ultimately, containers substantially reduce the spin-up time of setting up and compiling software systems and promote greater efficiency in getting to the end goal of producing model output and statistical analyses.

Advantages to using containers for NWP

- Reduces spin-up time to build necessary code components

- Highly portable

- Use in cloud computing

- Easily sharable with other collaborators

- Easy to replicate procedures and results

Who can benefit from using NWP containers?

- Graduate and undergraduate students

- University faculty

- Researchers

- Tutorial participants

Tutorial format

Throughout this tutorial, code blocks in BOLD white text with a black background should be copied from your browser and pasted on the command line.

echo "Let's Get Started"

Platform Options

Platform OptionsContainer software

While many software containerization platforms exist, two are supported for running the end-to-end containerized NWP system: Docker or Singularity.

Docker |

Singularity |

|---|---|

|

Docker was the first container platform explored by this project and, thus, has been tested robustly across local machines and on the cloud (AWS). Using Docker, the end-to-end containerized NWP system described here is fully functional on a local machine or on AWS. A few disadvantages to the Docker software are that root permissions are required to install and update, and it requires additional tools to run across multiple nodes, which are not needed nor supported for this particular application.

|

Singularity is also an option for running all but the final component (METviewer) of the end-to-end containerized NWP system by converting Docker images on Dockerhub to Singularity image files. Similar to Docker, installing and updating Singularity software requires root permissions. A few advantages to Singularity are that it was designed for High Performance Computing (HPC) platforms and can more efficiently run on multiple nodes on certain platforms. The functionality to run METviewer using Singularity is still a work in progress, so if there is a desire to create verification plots from the MET output, it will be necessary to use Docker for this step at this time.

|

Compute platform

There are two recommended methods for running this tutorial; follow the instructions that you find most useful below.

Running on a local machine |

Running on a cloud computing platform |

|---|---|

|

If you have access to a Linux/Unix based machine–whether a laptop, desktop, or compute cluster–it is likely that you will be able to run this entire tutorial on that machine. Click here for instructions for "Running On A Local Machine" |

This tutorial can also be run on the Amazon Web Services (AWS) cloud compute platform. We provide a pre-built image which should have all the software necessary for running the tutorial. Cloud computing costs will be incurred. Click here for instructions for "Running in the Cloud" |

Running on a local machine

Running on a local machineRunning tutorial on a local machine

To run this tutorial on a local machine, you will need to have access to a number of different utilities/software packages:

- A terminal or terminal emulator for running standard Linux/Unix commands (ls, cd, mkdir, etc.)

- untar and gzip

- A text editor such as vim, emacs, or nano

- Docker (see below)

- git (optional; see below)

More detailed instructions for certain requirements can be found below:

Installing container software

In order to run the NWP containers described in this tutorial, a containerization software (Docker or Singularity) will need to be available on your machine. To download and install the desired version software compatible with your system, please visit

- Docker: https://www.docker.com/get-started

- Singularity: https://sylabs.io/guides/3.6/user-guide/quick_start.html

In order to install Docker or Singularity on your machine, you will be required to have root access privileges. Once the software has been installed, Singularity can be run without root privileges, with a few exceptions.

Docker and Singularity are updated on a regular basis; we recommended applying all available updates.

Git version control software

The scripts and Dockerfiles used during this tutorial live in a git repository. You can download a project .tar file from the Github website, or, if you wish, you can clone the repository using git software. Specific instructions for doing this will be found on subsequent pages.

If you would like to use git and it is not installed git on your machine, please visit https://git-scm.com to learn more. Prior to using git for the first time, you will need to configure your git environment. For example:

git config --global user.email user@email.com

Running on the cloud

Running on the cloudAmazon Web Services (AWS)

This tutorial has been designed for use on Amazon Web Services (AWS) cloud computing. Other cloud computing platforms may be used, but the instructions below will have to be modified accordingly.

In order to complete this tutorial on AWS, one must launch an EC2 instance that is configured with the proper environment, software, and library dependencies. In addition to a number of library dependencies, the primary software and utilities include:

- Docker (required)

- Singularity (required)

- wgrib2

- ncview

- ImageMagick

Building the proper AWS environment can be achieved in two ways:

| Launch an EC2 instance from the DTC maintained AMI | Build & configure the EC2 instance from scratch |

|---|---|

| An AMI (Amazon Machine Image) is simply a saved copy of the EC2 environment that can be used to launch a new EC2 Instance with all of these software and tools pre-installed, allowing the user to quickly launch the proper EC2 Instance and begin the tutorial. Read more about AMIs here. The DTC maintains and provides a public AMI called "dtc-utility-base-env_v4.1.0" that contains all of these required tools. | Steps are provided to install all of the required software and tools from scratch. The user may then create an AMI if they choose, and may additionally choose to include tutorial specific content (e.g. data, container images, scripts) in their AMI as well, depending on user needs. |

Follow the instructions below for the preferred choice of acquiring the AWS environment:

Below you will find steps to create an EC2 Instance from the DTC AMI called "dtc-utility-base-env_v4.1.0", and login to your new instance from a terminal window.

See AWS documentation for more information

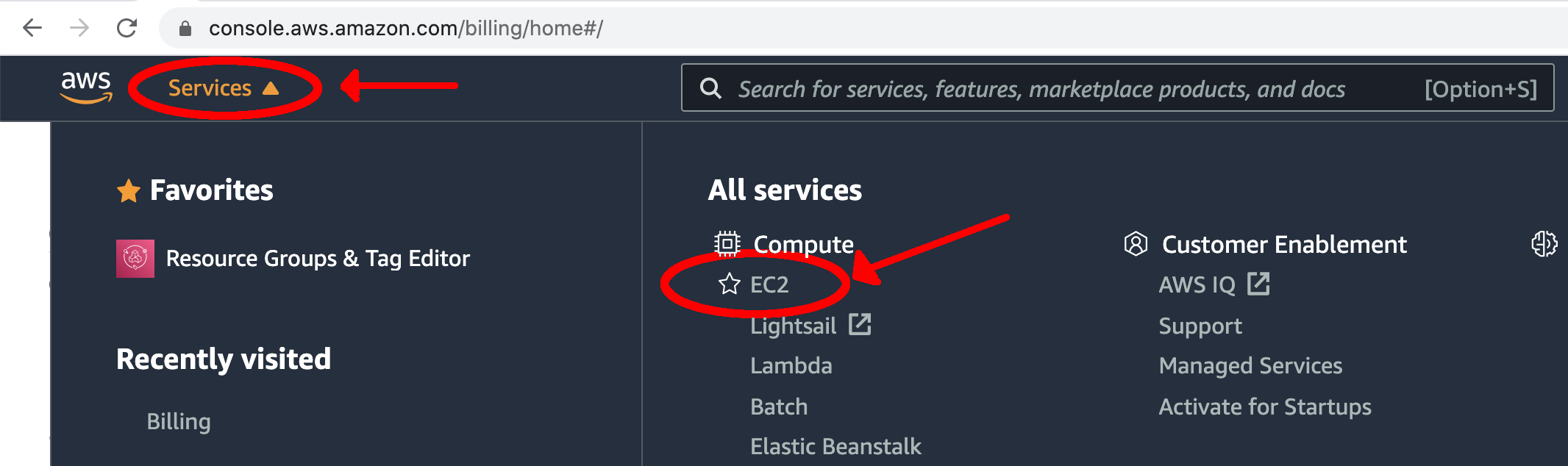

- Navigate to Amazon’s Elastic Compute Cloud (EC2) Management Console by clicking on the "Services" tab at the top. Under the "Compute" section, select "EC2".

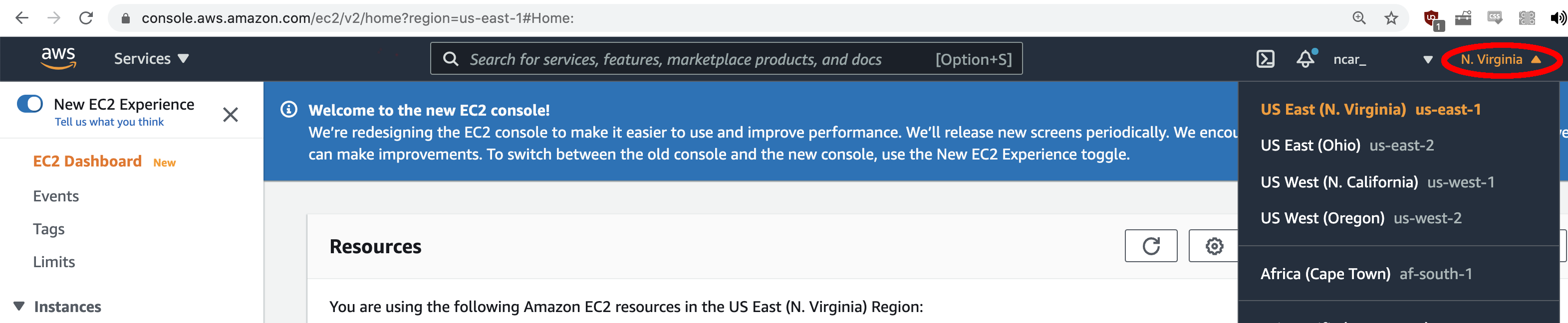

- Determine what region you are running under by looking in the top right corner of the screen. To use the DTC AMI, you need to use the "N. Virginia" region. If this is not what you see, use the drop-down arrow and select the "US East (N. Virginia)" region.

For this tutorial, you can use an environment that has already been established for you: an Amazon Machine Image (AMI).

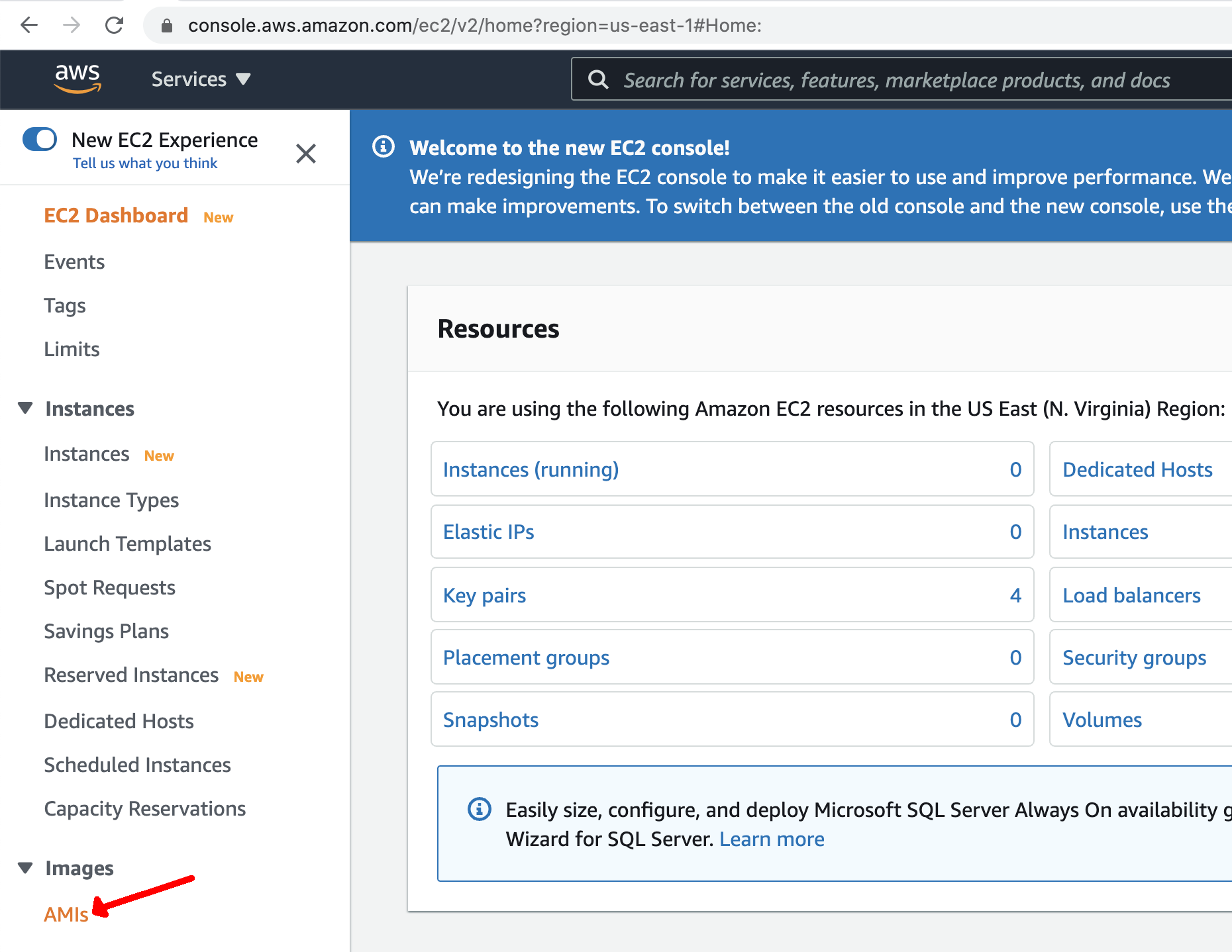

- Click the "AMIs" link on the left side "EC2 Dashboard"

You will initially not see any AMIs listed, since you do not own any images.

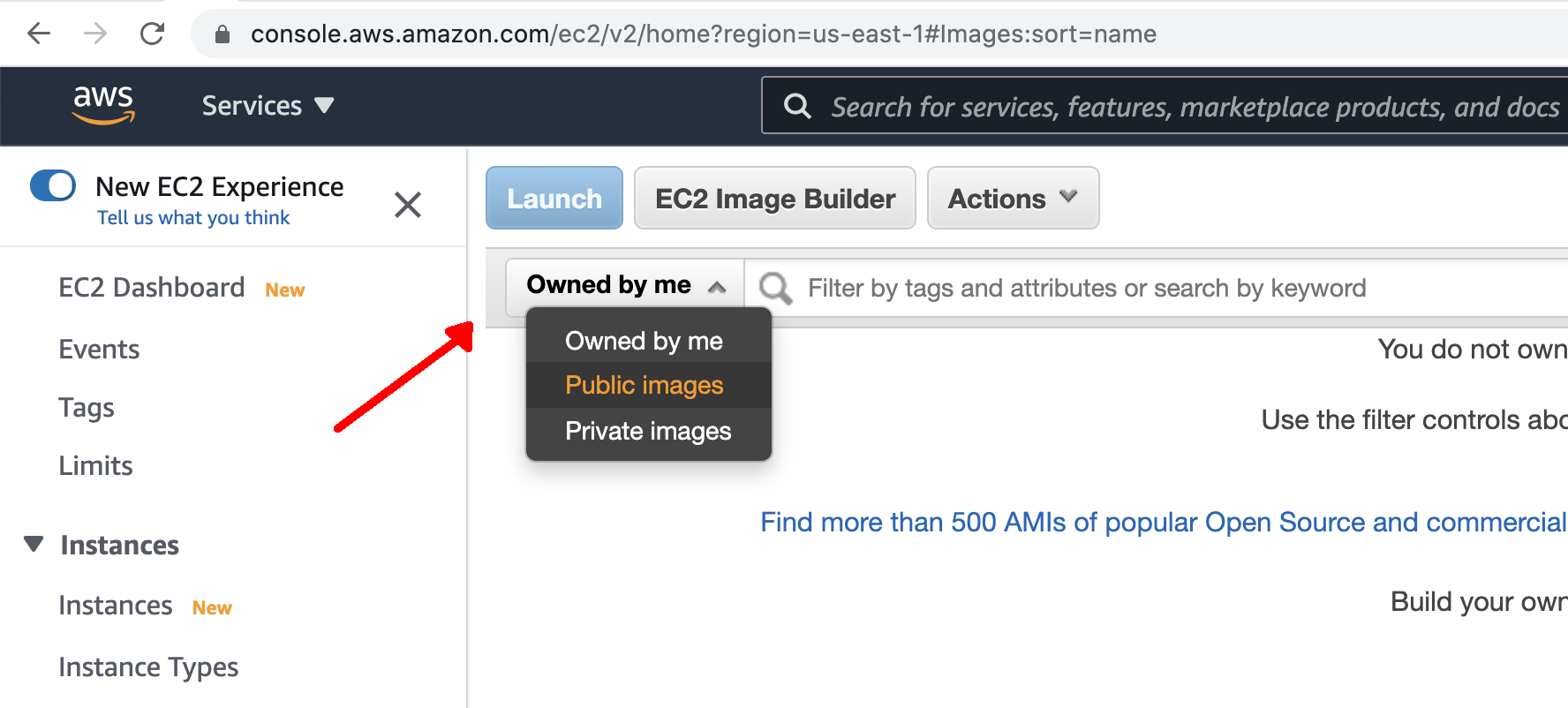

- Select "Public Images" from the dropdown as shown.

- In the search bar, enter in the name of the DTC AMI: "dtc-utility-base-env_v4.1.0" and press enter.

- Select the resulting AMI from the list window.

- Once selected, click the blue "Launch" button at the top of the page

After launching the AMI, the next step is to configure the instance prior to using it.

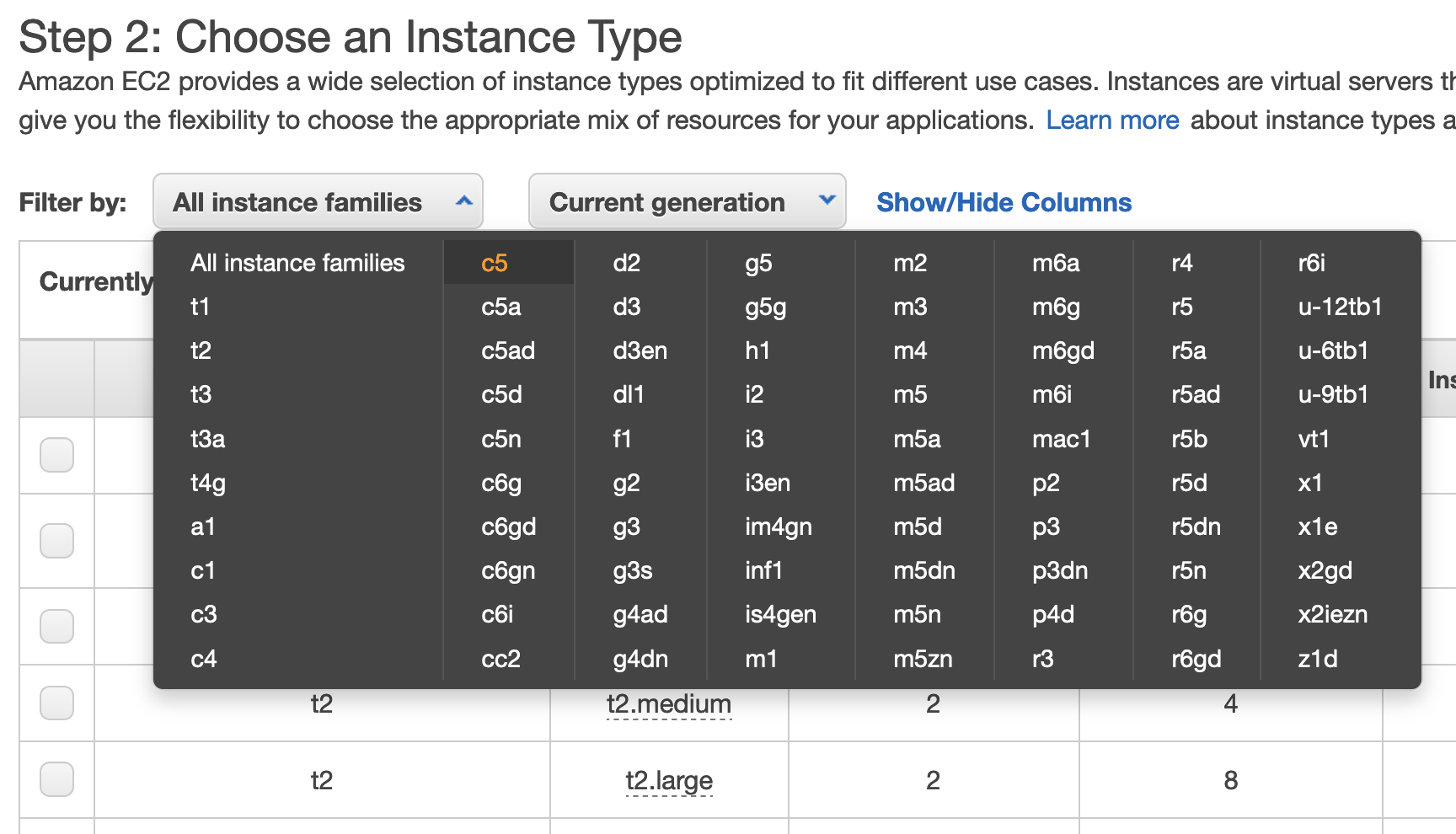

- From the "Filter by" dropdown list, select the "c5" option (a "compute-optimized" instance; see this page for more info on AWS instance types) to see a smaller list of possible selections.

- Scroll down and click the box next to the "c5.4xlarge" instance type and click the "Next: Configure Instance Details" button on the bottom right of the page.

- There is no need to further configure the instance details on the next page so simply click the button the bottom right of the page for "Next: Add Storage"

- If the storage size has not already been increased, change the storage from the default of 8 to 60 Gb and click the "Next: Add Tags" button on the bottom right of the page.

- Add a personal tag to identify what instance is yours.

- Select the blue link in the middle of the page that says "click to add a Name tag". This is a key-value pair where key=Name and value=your_personal_nametag

- On the next page the key will automatically be set to "Name" (leave this as is) and you simply assign a name of your choice to the Value in the second box. The value can be set to anything you will recognize and is unlikely to be used by anyone else. Note, it is not recommended to use any spaces in your name.

- NOTE: The key should literally be called "Name"; do not change the text in the left box

- Click the "Next: Configure Security Group" button on the bottom right of the page.

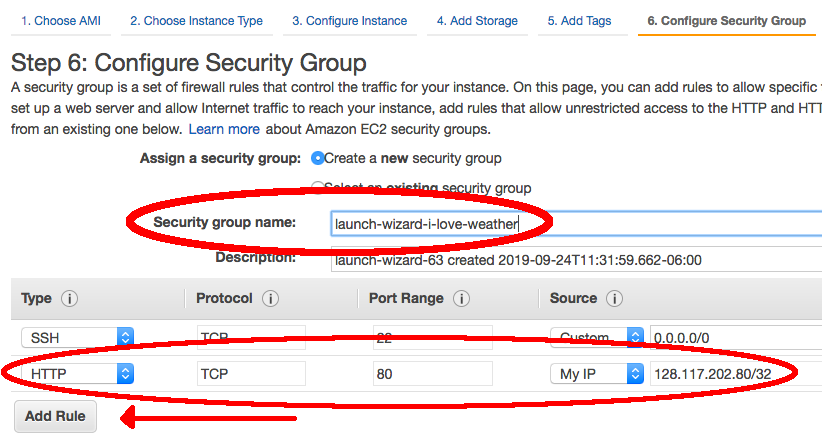

- Amazon requires us to set a unique security group name for each AWS instance. We will also need to set some rules in order to properly set up access to visualize verification output with the METviewer GUI. These steps will both be done on the "Configure Security Group" page.

- The field "Security group name" will be filled in with an automated value. If other tutorial users are attempting to launch an instance with this same automated value, it will fail, so you should replace the given value with any unique group name you desire.

- Select the button on the left hand side that says "Add Rule". Under the drop down menu, select "HTTP". The port range for HTTP is 80.

- Note: Rules with source of 0.0.0.0/0 allow all IP addresses to access your instance. You can modify setting security group rules to allow access from known IP addresses only by clicking the "Custom" drop down menu and selecting "My IP".

- Click the blue "Review and Launch" button at the bottom of the page to continue.

- You can review the selected configuration settings and select the blue "Launch" button in the bottom right corner.

- It will be necessary to create an ssh key pair to allow for SSH access into your instance.

- In the first drop down box, choose "create a new key pair".

- In the second box for the key pair name, choose any name you’d like but make sure it does not include any spaces. (e.g., your_name-aws-ssh)

- Click the "Download Key Pair" button and the key pair will be placed in your local Downloads directory, named: name_you_chose.pem (Note, Some systems will add .txt to the end of the *.pem file name so the file may actually be named name_you_chose.pem.txt)

Windows users: please review Windows information sheet

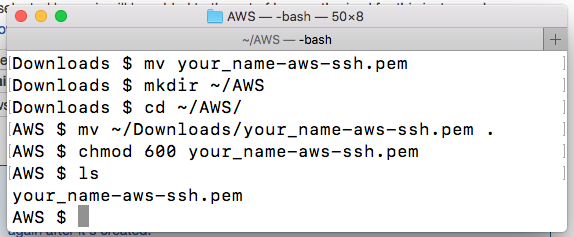

- To access your instance running in the cloud you first need to set up your ssh access from your remote system

- Open a terminal window and create a new directory to access your AWS cloud instance

mkdir ~/AWS - Go into the new directory and then move your *.pem file into it and change the permissions of the file

cd ~/AWS

mv ~/Downloads/name_you_chose.pem .

chmod 600 name_you_chose.pem

- Open a terminal window and create a new directory to access your AWS cloud instance

- Finally, back in your browser window:

- click "Launch Instances"

- click the blue "View Instances" button on the bottom right corner of the next page.

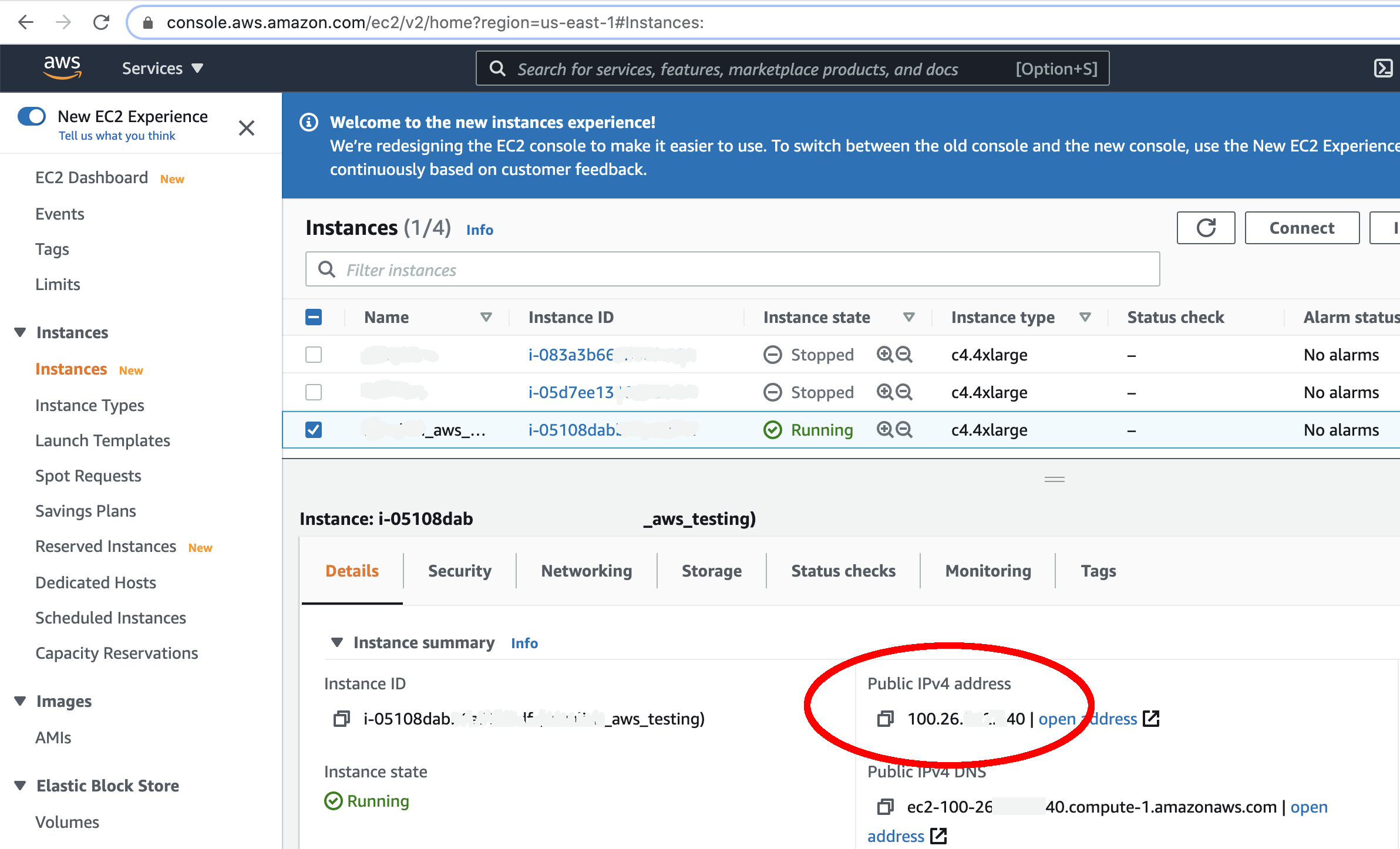

- Look for the instance with the name you gave it. As soon as you see a green dot and "Running" under the "Instance State" column, your instance is ready to use!

- You will need to obtain your IPV4 Public IP from the AWS "Active Instances" web page. This can be found from your AWS instances page as shown below:

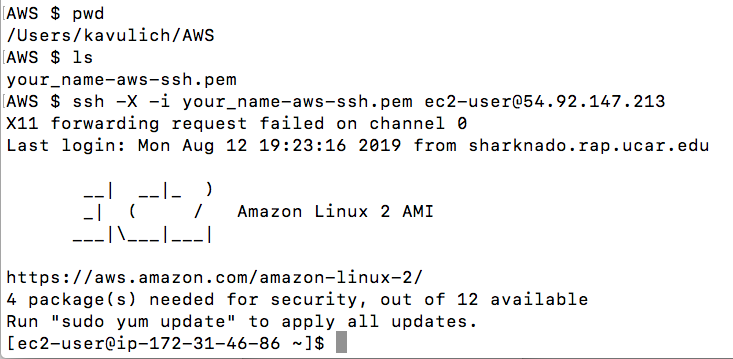

- From your newly created AWS directory, ssh into your instance. (Windows users: please review Windows information sheet)

ssh -X -i name_you_chose.pem ec2-user@IPV4_public_IPOR on a mac, change the “X” to a “Y”:

ssh -Y -i name_you_chose.pem ec2-user@IPV4_public_IP - The IPV4 public IP is something that is unique to your particular instance. If you stop/re-start your instance, this public IP will be different each time.

- Feel free to open multiple terminals and log into your instance (using the same credentials) several times if you so desire.

- From this point on, you will mostly be working in your terminal window; however, you may occasionally need to look back at the AWS web page, so be sure to keep the AWS console open in your browser.

- Once you are in your instance from the terminal window you should see the following set-up:

Below you will find the steps necessary to create an EC2 instance and install the libraries and software required to complete this tutorial, along with a number of helpful utilities to inspect output files created as part of this tutorial.

After completing these steps, you can create your own AMI for future use if you choose. You may also choose to complete the tutorial, or portions of the tutorial, and then create an AMI. For example, suppose you want to include the container images, tutorial repository contents, and input data in your AMI for future use. Simply complete the above steps to create the base environment and software, then complete the tutorial menu items: Repository, Downloading Input Data, and NWP Containers. Then create the AMI. Note that this is a completely customizable procedure and may benefit from some experience/knowledge with AWS AMIs and familiarity with this tutorial first.

Information on creating an AMI of your own can be found in the AWS documentation.

- Select: Amazon Linux 2 AMI (HVM), SSD Volume Type (64 bit/x86)

- Select: C5.4xlarge

- Select: Change storage to 60 GB

- Configure Security Group: Add rule for HTTP (Set Source to MyIP)

(use your own pem file and public IP address from the instance you launched in step 1):

mkdir /home/ec2-user/utilities

Install packages

sudo yum install -y libX11-devel.x86_64 Xaw3d-devel.x86_64 libpng-devel.x86_64 libcurl-devel.x86_64 expat-devel.x86_64 ksh.x86_64

Compile HDF5 from source

wget https://support.hdfgroup.org/ftp/HDF5/releases/hdf5-1.10/hdf5-1.10.5/src/hdf5-1.10.5.tar.gz

tar -xvzf hdf5-1.10.5.tar.gz; rm hdf5-1.10.5.tar.gz

cd hdf5-1.10.5

./configure --with-zlib=/usr/lib64 --prefix=/home/ec2-user/utilities

make install

Compile netCDF from source

wget ftp://ftp.unidata.ucar.edu/pub/netcdf/netcdf-c-4.7.1.tar.gz

tar -xvzf netcdf-c-4.7.1.tar.gz; rm netcdf-c-4.7.1.tar.gz

cd netcdf-c-4.7.1

./configure --prefix=/home/ec2-user/utilities CPPFLAGS=-I/home/ec2-user/utilities/include LDFLAGS=-L/home/ec2-user/utilities/lib

make install

Compile UDUNITS from source

wget ftp://ftp.unidata.ucar.edu/pub/udunits/udunits-2.2.28.tar.gz

tar -xvzf udunits-2.2.28.tar.gz; rm udunits-2.2.28.tar.gz

cd udunits-2.2.28

./configure -prefix=/home/ec2-user/utilities

make install

Compile ncview from source

wget ftp://cirrus.ucsd.edu/pub/ncview/ncview-2.1.7.tar.gz

tar -xvzf ncview-2.1.7.tar.gz; rm ncview-2.1.7.tar.gz

cd ncview-2.1.7

sudo ln -sf /usr/include/X11/Xaw3d /usr/include/X11/Xaw

./configure --with-nc-config=/home/ec2-user/utilities/bin/nc-config --prefix=/home/ec2-user/utilities --with-udunits2_incdir=/home/ec2-user/utilities/include --with-udunits2_libdir=/home/ec2-user/utilities/lib

make install

Install ImageMagick and x11

sudo yum install -y ImageMagick ImageMagick-devel

Install docker

sudo service docker start

sudo usermod -a -G docker ec2-user

Start Docker automatically upon instance creation

Install docker-compose

sudo chmod +x /usr/local/bin/docker-compose

- To ensure that Docker is correctly initialized, one time only you must:

- exit

- Log back in with same IP address

- (DO NOT terminate the instance)

ssh -Y -i file.pem ec2-user@pubIPaddress

Install wgrib2

wget ftp://ftp.cpc.ncep.noaa.gov/wd51we/wgrib2/wgrib2.tgz

tar -xvzf wgrib2.tgz; rm wgrib2.tgz

cd grib2

export CC=`which gcc`

export FC=`which gfortran`

make

make lib

Install Singularity prerequisites

sudo yum update -y

sudo yum install -y openssl-devel libuuid-devel libseccomp-devel wget squashfs-tools

export GO_VERSION=1.13 OS=linux ARCH=amd64

Install Go

sudo tar -C /usr/local -xzvf go${GO_VERSION}.${OS}-${ARCH}.tar.gz; sudo rm go${GO_VERSION}.${OS}-${ARCH}.tar.gz

echo 'export PATH=/usr/local/go/bin:$PATH' >> ~/.bashrc

source ~/.bashrc

Install Singularity

git clone https://github.com/sylabs/singularity.git

cd singularity

git checkout tags/v${SINGULARITY_VERSION}

sudo ./mconfig

sudo make -C builddir

sudo make -C builddir install

sed -i 's/^hstgo=/hstgo=\/usr\/local\/go\/bin\/go/g' mconfig

sudo ./mconfig

sudo make -C builddir

sudo make -C builddir install

Finally, you should enter your home directory and modify the .bashrc and .bash_profile files to properly set the environment each time you log in.

Edits to .bashrc are shown in bold

if [ -f /etc/bashrc ]; then

. /etc/bashrc

fi

# Uncomment the following line if you don't like systemctl's auto-paging feature:

# export SYSTEMD_PAGER=

# User specific aliases and functions

export PATH=/usr/local/go/bin:$PATH

export PROJ_DIR=/home/ec2-user

alias wgrib2="/home/ec2-user/utilities/grib2/wgrib2/wgrib2"

alias ncview="/home/ec2-user/utilities/bin/ncview"

alias ncdump="/home/ec2-user/utilities/bin/ncdump"

Additionally, you should add the following line to ~/.bash_profile:

Then, source the .bash_profile file to enable these new changes to the environment

Access AWS EC2 on Windows

Access AWS EC2 on WindowsAccessing an EC2 Instance on your Windows Machine

There are a few different ways to access a terminal-type environment on a Windows machine. Below are a few of the most common methods. Users can first create their EC2 Instance as described in the online procedures, then use one of the options below to access their instance on their Windows machine.

After downloading and installing WSL, you can access and run a native Linux environment directly on your Windows machine. In the WSL terminal window you can then run all of the Linux/Mac tutorial procedures as-is. https://docs.aws.amazon.com/AWSEC2/latest/UserGuide/WSL.html

Using PuTTY requires a user to convert their .pem key file to the PuTTY format .ppk file using the PuTTYgen application. Then use the PuTTY GUI to connect to the instance with the newly created .ppk file. This will launch a terminal window connected to your EC2 instance. https://docs.aws.amazon.com/AWSEC2/latest/UserGuide/putty.html

With MobaXTerm installed you can open a MobaXterm terminal window and connect directly using same provided instructions for Linux/Mac with your .pem file. You can also use the GUI to log into your instance as well. Examples can be found online demonstrating the GUI.

AWS Tips

AWS TipsBelow are several tips for running the NWP containers on AWS. This list will be updated as we uncover new tips and tricks!

Starting, stopping, and terminating images

When you are done working but would like to save where you left off (data output, script modifications, etc.), you should stop your instance. Stopped instances do not incur usage or data transfer fees; however, you will be charged for storage on any Amazon Elastic Block Storage (EBS) volumes. If the instance state remains active, you will incur the charges.

When you no longer need an instance, including all output, file modifications, etc., you can terminate an instance. Terminating an instance will stop you from incurring anymore charges. Keep in mind that once an instance has been terminated you can not reconnect to it or restart it.

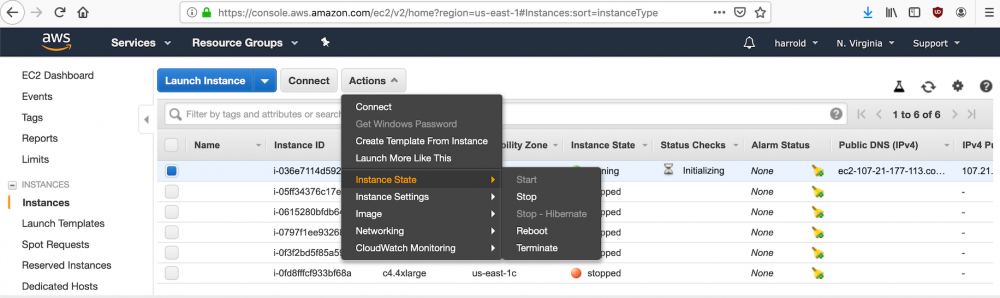

These actions can be carried out by first selecting "Instances" on the left hand side navigation bar. Next, select the instance you wish to either stop or terminate. Then, under the "Actions" button, select "Instance State" and choose your desired action.

Logging out and back into an instance

In the scenario where you need to log out or stop an instance, you can log back in and resume your work. However, you will need to reset the PROJ_DIR (Note: In the DTC private AMI, the PROJ_DIR is already set in the environment) and CASE_DIR environment variables to get your runs to work according to the instructions. Also, if you stop an instance and restart it, you will be assigned a new IPv4 Public IP that you will use when you ssh into your instance.

Transferring files to and from a local machine to an AWS instance

If you need to copy files between your local machine and AWS instance, follow these helpful examples.

Transfers to/from AWS is always initiated from your local machine/terminal window.

In the example below, we will copy namelist.wps from our local /home directory to ec2-user's /home directory. To copy files from your local machine to your instance:

In this example, we will copy wps_domain.png from ec2-user's /home directory to our local /home directory. To copy files from your instance to your local machine:

NOTE: Copying data out of an EC2 instance can incur high charges per GB, it is advised to only copy small files such as images and text files rather than large data files.

Container Software Commands and Tips

Container Software Commands and TipsThis page contains common commands and tips for Docker or Singularity (click link to jump to respective sections).

Docker

Common docker commands

Listed below are common Docker commands, some of which will be run during this tutorial. For more information on command-line interfaces and options, please visit: https://docs.docker.com/engine/reference/commandline/docker/

- Getting help:

- docker --help : lists all Docker commands

- docker run --help : lists all options for the run command

- Building or loading images:

- docker build -t my-name . : builds a Docker image from Dockerfile

- docker save my-name > my-name.tar : saves a Docker image to a tarfile

- docker load < my-name.tar.gz : loads a Docker image from a tarfile

- Listing images and containers:

- docker images : lists the images that currently exist, including the image names and ID's

- docker ps -a : lists the containers that currently exist, including the container names and ID's

- Deleting images and containers:

- docker rmi my-image-name: removes an image by name or ID

- docker rmi $(docker images -q) or docker rmi `docker images -q` : removes all existing images

- docker rm my-container-name : removes a container by name or ID

- docker rm $(docker ps -a -q) or docker rm `docker ps -aq` : removes all existing containers

- These commands can be forced with by adding -f

- Creating and running containers:

- docker run --rm -it \

--volumes-from container-name \

-v local-path:container-path \

--name my-container-name my-image-name : creates a container from an image, where:- --rm : automatically removes the container when it exits

- -it : creates an interactive shell in the container

- --volumes-from : mounts volumes from the specified container

- -v : defines a mapping between a local directory and a container directory

- --name : assigns a name to the container

- docker exec my-container-name : executes a command in a running container

- docker-compose up -d : defines and runs a multi-container Docker application

- docker run --rm -it \

Docker run commands for this tutorial

This tutorial makes use of docker run commands with several different arguments. Here is one example:

docker run --rm -it -e LOCAL_USER_ID=`id -u $USER` \

-v ${PROJ_DIR}/container-dtc-nwp/components/scripts/common:/home/scripts/common \

-v ${PROJ_DIR}/container-dtc-nwp/components/scripts/sandy_20121027:/home/scripts/case \

-v ${CASE_DIR}/wpsprd:/home/wpsprd -v ${CASE_DIR}/gsiprd:/home/gsiprd -v ${CASE_DIR}/wrfprd:/home/wrfprd \

--name run-sandy-wrf dtcenter/wps_wrf:${PROJ_VERSION} /home/scripts/common/run_wrf.ksh

The different parts of this command are detailed below:

-

docker run --rm -it

- As described above, this portion creates and runs a container from an image, creates an interactive shell, and automatically removes the container when finished

-

-e LOCAL_USER_ID=`id -u $USER`

- The `-e` flag sets an environment variable in the interactive shell. In this case, our containers have been built with a so-called "entrypoint" that automatically runs a script on execution that sets the UID within the container to the value of the LOCAL_USER_ID variable. In this case, we are using the command `id -u $USER` to output the UID of the user outside the container. This means that the UID outside the container will be the same as the UID inside the container, ensuring that any files created inside the container can be read outside the container, and vice versa

-

-v ${PROJ_DIR}/container-dtc-nwp/components/scripts/common:/home/scripts/common

- As described above, the `-v` flag mounts the directory ${PROJ_DIR}/container-dtc-nwp/components/scripts/common outside of the container to the location /home/scripts/common within the container. The other -v commands do the same

-

--name run-dtc-nwp-derecho

- Assigns the name "run-dtc-nwp-derecho" to the running container

-

dtcenter/wps_wrf:${PROJ_VERSION}

- The "${PROJ_VERSION}" tag of image "dtcenter/wps_wrf" will be used to create the container. This should be something like "4.0.0", and will have been set in the "Set Up Environment" step for each case

-

/home/scripts/common/run_wrf.ksh

- Finally, once the container is created (and the entrypoint script is run), the script "/home/scripts/common/run_wrf.ksh" (in the container's directory structure) will be run, which will set up the local environment and input files and run the WRF executable.

Common Docker problems

Docker is a complicated system, and will occasionally give you unintuitive or even confusing error messages. Here are a few that we have run into and the solutions that have worked for us:

-

Problem: When mounting a local directory, inside the container it is owned by root, and we can not read or write any files in that directory

-

Solution: Always specify the full path when mounting directories into your container.

-

When "bind mounting" a local directory into your container, you must specify a full path (e.g. /home/user/my_data_directory) rather than a relative path (e.g. my_data_directory). We are not exactly sure why this is the case, but we have reliably reproduced and solved this error many times in this way.

-

-

Problem: Log files for executables run with MPI feature large numbers of similar error messages:

-

Read -1, expected 682428, errno = 1

Read -1, expected 283272, errno = 1

Read -1, expected 20808, errno = 1

Read -1, expected 8160, errno = 1

Read -1, expected 390504, errno = 1

Read -1, expected 162096, errno = 1 -

etc. etc.

-

These errors are harmless, but their inclusion can mask the actual useful information in the log files, and in long WRF runs can cause the log files to swell to unmanageable sizes.

-

-

Solution: Add the flag "--cap-add=SYS_PTRACE" to your docker run command

-

For example:

docker run --cap-add=SYS_PTRACE --rm -it -e LOCAL_USER_ID=`id -u $USER` -v ${PROJ_DIR}/container-dtc-nwp/components/scripts/common:/home/scripts/common -v ${CASE_DIR}/wrfprd:/home/wrfprd -v ${CASE_DIR}/wpsprd:/home/wpsprd -v ${PROJ_DIR}/container-dtc-nwp/components/scripts/derecho_20120629:/home/scripts/case --name run-dtc-nwp-derecho dtcenter/wps_wrf:${PROJ_VERSION} /home/scripts/common/run_wrf.ksh -np 8

-

Singularity

Common Singularity commands

Listed below are common Singularity commands, some of which will be run during this tutorial. For more information on command-line interfaces and options, please visit: https://sylabs.io/guides/3.7/user-guide/quick_start.html#overview-of-the-singularity-interface

If a problem occurs during the building of a singularity image from dockerhub (for example, running out of disk space) it can result in a corrupted cache for that image causing it to not run properly, even if you re-pull the container. You can clear the cache using the command:

On the Cheyenne supercomputer, downloading and converting a singularity image file from Dockerhub may use too many resources and result in you being kicked off a login node. To avoid this, you can run an interactive job on the Casper machine using the `execdav` command (this example requests a 4-core job for 90 minutes)

Common Singularity problems

As with Docker, Singularity is a complicated system, and will occasionally give you unintuitive or even confusing error messages. Here are a few that we have run into and the solutions that have worked for us:

-

Problem: When running a singularity container, I get the following error at the end:

-

INFO: Cleaning up image...

ERROR: failed to delete container image tempDir /some_directory/tmp/rootfs-979715833: unlinkat /some_directory/tmp/rootfs-979715833/root/root/.tcshrc: permission denied

-

-

Solution: This problem is due to temporary files created on disk when running Singularity outside of "sandbox" mode. They can be safely ignored