METplus Practical Session Guide (Feb 2019)

METplus Practical Session Guide (Feb 2019)Welcome to the METplus Practical Session Guide

February 4 - 6, 2019, at NCAR in Boulder, Colorado in conjunction with the 2019 Winter WRF/MPAS Tutorial

The METplus practical consists of five sessions. Each session contains instructions for running individual MET tools directly on the command line followed by instructions for running the same tools as part of a METplus use case.

Please follow the link to the appropriate session:

- Session 1, Monday Afternoon

METplus Setup/Grid-to-Grid - Session 2, Tuesday Morning

Grid-to-Obs - Session 3, Tuesday Afternoon

Ensemble and PQPF - Session 4, Wednesday Morning

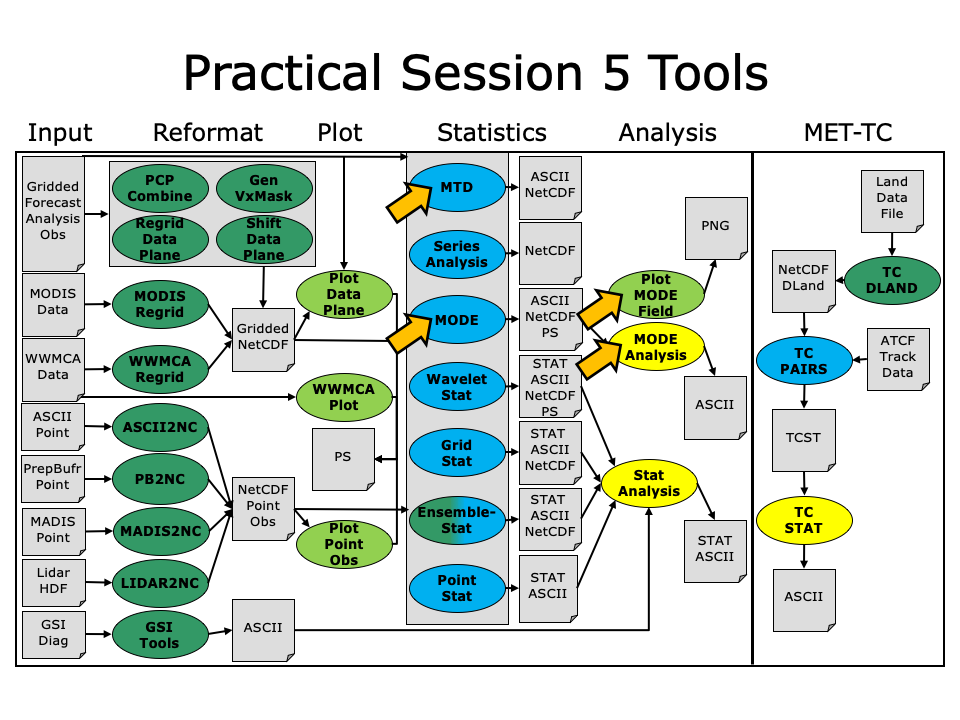

MODE and MTD - Session 5, Wednesday Afternoon

Trk&Int/Feature Relative

The Tutorial Schedule contains PDF's of the lecture slides and final versions will be posted here after the tutorial.

After completing these sessions, please fill out the METplus Tutorial Survey to receive your certificate of completion.

Session 1: METplus Setup/Grid-to-Grid

Session 1: METplus Setup/Grid-to-Grid admin Wed, 06/12/2019 - 16:55METplus Practical Session 1

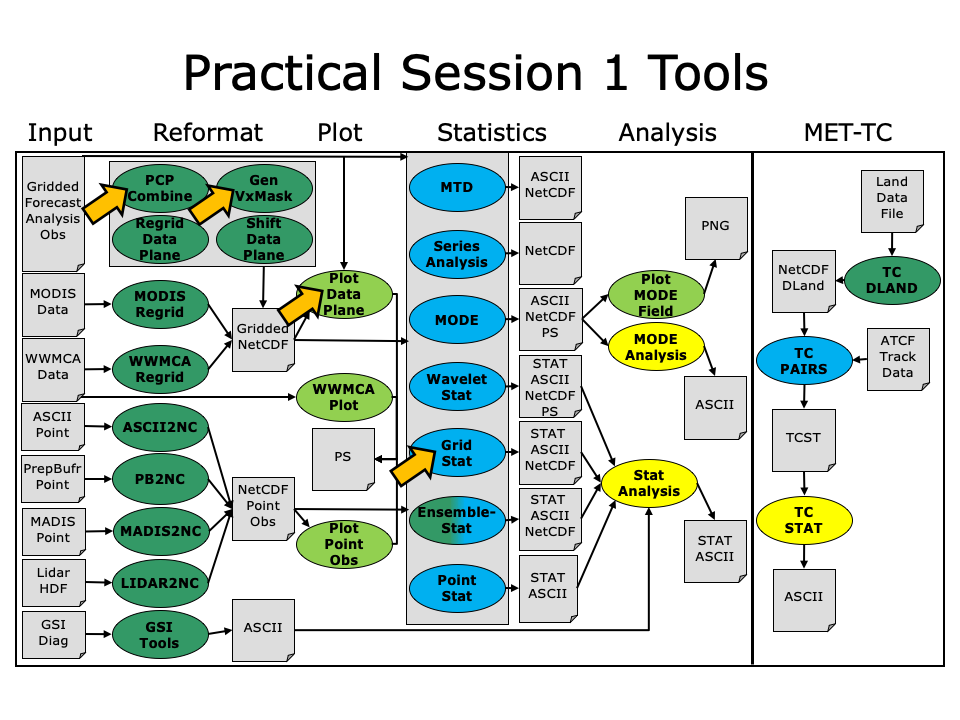

During the first METplus practical session, you will run the tools indicated below:

During this practical session, please work on the Session 1 exercises. You may navigate through this tutorial by following the arrows in the bottom-right or by using the left-hand menu navigation.

Each practical session builds on the output of previous practical sessions. So please work on them in the order listed.

Throughout this tutorial, text written in bold and italics should be copied from your browser and pasted on the command line. You can copy-and-paste the text using one of two methods:

-

- Use the mouse:

- Hold down the left mouse button to select the text

- Place the mouse in the desired location and click the middle mouse button once to paste the selected text

-

- Use the Copy and Paste options:

- Hold down the left mouse button to select the text

- Click the right mouse button and select the option for Copy

- Place the mouse in the desired location, click the right mouse button, and select the option for Paste

You may find it useful to refer to the MET Users Guide during the tutorial exercies:

okular /classroom/wrfhelp/MET/8.0/met-8.0/doc/MET_Users_Guide.pdf &

Click the forward arrow on the right to get started!

METplus Setup

METplus Setup admin Mon, 06/24/2019 - 15:58METplus Overview

METplus is a set of python modules that have been developed with the flexibility to run MET for various use cases or scenarios. The goal is to "simplify" the running of MET for scientists. Currently, the primary means of achieving this is through the use of METplus configuration files, aka. "conf files". It is designed to provide a framework in which additional MET use cases can be added. The conf file implementation utilizes a Python package called produtil that was developed by NOAA/NCEP/EMC for the HWRF system.

The METplus Python application is designed to be run as stand alone module files - calling one MET process; through a user defined process list - as in this tutorial; or within a workflow management system on an HPC cluster - which is TBD.

Please be sure to follow the instructions in order.

METplus Useful Links

The following links are just for reference, and not required for this practical session. A METplus release is available on GitHub, and provides sample data and instructions.

GitHub METplus links

METplus: Initial setup

METplus: Initial setup admin Mon, 06/24/2019 - 15:59Prequisites: Software

The following is a full list of software that is required to utilize all the functionality in METplus.

NOTE: We will not by running the cyclone plotter or grid plotting scripts in this tutorial. So the additional libraries mentioned below are not installed.

- Python 2.7.x

- Additional Python libraries: - required by the cyclone plotter and grid plotting scripts.

- numpy

- matplotlib - not installed, Is is not required for this tutorial.

- cartopy - not installed, It is not required for this tutorial.

- MET 8.1

- R - used by tcmpr_plotter_wrapper, Wraps the MET plot_tcmpr.R script

- ncap2 - NCO netCDF Operators

- wgrib2

- ncdump - NetCDF binaries

- convert - Utilitity from ImageMagick software suite

Getting The Software

For this tutorial we'll use the METplus v2.2 release. We will configure METplus to run the met-8.1 software, which has already been installed in a common location and is available through a module file.

Please use the following instructions to setup METplus on your tutorial machine:

- Unpack the release tarball and create a METplus symbolic link (copy and paste the following commands):

mkdir -p metplus_tutorial/output

tar -xvzf /classroom/wrfhelp/METplus/tar_files/METplus_v2.2.tar.gz -C metplus_tutorial/

ln -s metplus_tutorial/METplus-2.2 METplus

Sample Data Input and Location of Output

The Sample Input Data for running this METplus tutorial is provided in a shared location on-disk and does not require copying to your home directory. How to set the path to the sample input data and location of the output when running METplus will be explained later in the tutorial, utilizing METplus configuration files.

Just note that:

When running METplus use cases, all output is placed in your ${HOME}/metplus_tutorial/output directory.

When running MET on the command line, all output will be written to ${HOME}/metplus_tutorial/output/met_output

Your Runtime Environment

For this tutorial, the MET software has been setup as a loadable module file. We need to add the following command to your environment so the MET software is available when you login.

Edit the .usermodlogin file to configure your runtime environment to load the MET software:

Open the following file with the editor of your choice:

Add the following to the LAST LINE of the file:

Save and close that file, and then source it to apply the settings to the current shell. The next time you login, you do not have to source this file again.:

The system PATH environment variable is set to allow the METplus application to be run from any directory.

As a note, there is also an optional JLOGFILE environment variable (Job Log), which indicates where JLOGS will be saved. JLOGS provide an overview of the METplus python modules being run and is intended to be used when running METplus within a workflow management systems on an HPC cluster. If this environment variable is unset, then output will be directed to stdout (your display). JLOGFILE will not be defined during the tutorial, If interested, more information regarding the JLOGFILE can be found in the METplus Users Guide. You will now edit the system PATH.

Edit the .usermodrc file to configure your runtime environment for use with METplus:

open the following file with the editor of your choice:

Add the following to update your system PATH setting:

NOTE: If the PATH variable already exists in your .usermodrc file,

than make certain to ADD the line below after the existing setting.

csh:

bash:

Save and close that file, and then source it to apply the settings to the current shell. The next time you login, you do not have to source this file again.:

METplus: Directories and Configuration Files - Overview

METplus: Directories and Configuration Files - Overview admin Mon, 06/24/2019 - 15:59METplus directory structure

Brief description and overview of the METplus/ directory structure.

- doc/ - Doxygen files for building the html API documentation.

- doc/METplus_Users_Guide - LyX files and the user guide PDF file.

- internal_tests/ - for engineering tests

- parm/ - where config files live

- README.md - general README

- sorc/ - executables and documentation build system

- ush/ - python scripts

METplus default configuration files

The METplus default configuration files metplus_system.conf, metplus_data.conf, metplus_runtime.conf, and metplus_logging.conf are always read by default and in the order shown. Any additional configuration files passed in on the command line are than processed in the order in which they are specified. This allows for each successive conf file the ability to override variables defined in any previously processed conf files. It also allows for defining and setting up conf files from a general (settings used by all use cases, ie. MET install dir) to more specific (Plot type when running track and intensity plotter) structure. The idea is to created a hiearchy of conf files that is easier to maintain, read, and manage. It is important to note, running METplus creates a single configuration file, which can be viewed to understand the result of all the conf file processing.

TIP: The final metplus conf file is defined here:

metplus_runtime.conf:METPLUS_CONF={OUTPUT_BASE}/metplus_final.conf

Use this file to see the result of all the conf file processing, this can be very helpful when troubleshooting,

NOTE: The syntax for METplus configuration files MUST include a "[section]" header with the variable names and values on subsequent lines.

Unless otherwise indicated, all directories are relative to your ${HOME}/METplus directory.

The parm/metplus_config directory - there are four config files:

- metplus_system.conf

- contains "[dir]" and "[exe]" to set directory and executable paths

- any information specific to host machine

- metplus_data.conf

- Sample data or Model input location

- filename templates and regex for filenames

- metplus_runtime.conf

- contains "[config]" section

- var lists, stat lists, configurations, process list

- anything else needed at runtime

- metplus_logging.conf

- contains "[dir]" section for setting the logcation of log files.

- contains "[config]" section for setting various logging configuration options.

The parm/use_cases directory - The use cases:

This is where the use cases you will be running exist. The use case filenaming and directory structure is by convention. It is intended to simplify management of common configuration items for a specific use case. Under the use_cases directory is a subdirectory for each use case. Under that is an examples subdirectory and a more general configuration file that contains information that is shared by examples of the use case.

- For example, the track and intensity use case conf directory and file structure.

- track_and_intensity - directory

- track_and_intensity/track_and_intensity.conf - Any common conf file settings shared by track and intensity examples

- track_and_intensity/examples - directory to hold various track and intensity example conf files.

- track_and_intensity/examples/tcmpr_mean_median.conf - specific use case

Within each use case there is a met_config subdirectory that contains MET specific config files for that use case. The parm/use_cases/<use case>/met_config directory - store all your MET config files, e.g.:

- GridStatConfig_anom (for MET grid_stat)

- TCPairsETCConfig (for MET tc_pairs)

- SeriesAnalysisConfig_by_init (for MET series_analysis)

- SeriesAnalysisConfig_by_lead (for MET series_analysis)

METplus: CUSTOM Configuration File Settings

METplus: CUSTOM Configuration File Settings admin Mon, 06/24/2019 - 16:00Create a METplus custom conf file

In this section you will create a custom configuration file that you will use in each call to METplus.

The paths in this practical assume: You have installed METPlus under your $HOME directory and You are using the shared installation of MET. If not, than you need to adjust your paths accordingly.

Perform the following commands ...

Change to your ${HOME}/METplus/parm directory and copy your custom conf file.

cp /classroom/wrfhelp/METplus/mycustom.conf ${HOME}/METplus/parm/

NOTE: By placing your custom configuration file under the parm directory, you do not have to specify the path to mycustom.conf in the command when refering to it. The commands in this tutorial are setup that way, so make sure to indeed create this file under the METplus/parm directory.

NOTE: A METplus conf file is not a shell script.

You CAN NOT refer to environment variables as you would in a shell script, or as in the command above ie. ${HOME}.

Instead, in a conf file, you must define as {ENV[HOME]}

Reminder: Make certain to maintain the KEY = VALUE pairs under their respective current [sections] in the conf file.

Below is the exact content of your mycustom.conf file you just copied to your parm directory. It is instructive to REVIEW the settings to understand what changes were REQUIRED in order to run METplus with the default example use cases from a clean download of the software.

Also Note: [config] is not required if you aren't setting any values in this file for that section, but you can add it in case you want to add options in that section later - which you will be doing.

# directory to write output files and logs

OUTPUT_BASE = {ENV[HOME]}/metplus_tutorial/output

# directory containing input data

INPUT_BASE = /classroom/wrfhelp/METplus_Data

# location of MET installation

MET_INSTALL_DIR = /classroom/wrfhelp/MET/8.0

# directory to write temp files

TMP_DIR = {OUTPUT_BASE}/tmp

[config]

[exe]

# Non-MET executables

WGRIB2 = /usr/local/wgrib2/bin/wgrib2

CUT_EXE = /usr/bin/cut

TR_EXE = /usr/bin/tr

RM_EXE = /usr/bin/rm

NCAP2_EXE = /usr/local/nco-4.6.6-gcc/bin/ncap2

CONVERT_EXE = /usr/bin/convert

NCDUMP_EXE = /classroom/wrfhelp/MET/8.0/external_libs/bin/ncdump

EGREP_EXE = /usr/bin/egrep

METplus: Overriding Configuration File Settings

METplus: Overriding Configuration File Settings admin Mon, 06/24/2019 - 16:01Overriding conf files

This page is meant to be instructive and to reinforce the concept of default configuration variables and overriding conf variables. This page will override your previous set OUTPUT_BASE configuration variable, for your first use case.

The values in the default metplus_system.conf, metplus_data.conf, metplus_runtime.conf, and metplus_logging.conf configuration files can be overridden in the use case conf files and/or a user's custom configuration file. Recall the general use case layout:

- ${HOME}/METplus/parm/use_cases/<use_case>/<use_case>.conf

- ${HOME}/METplus/parm/use_cases/<use_case>/examples/<some_example>.conf

Some variables in the system conf are set to '/path/to' and must be overridden to run METplus, such as OUTPUT_BASE in metplus_system.conf.

Reminder: When adding variables to be overridden, make sure to place the variable under the appropriate section.

For example, [config], [dir], [exe]. If necessary, refer to the default appropriate parm/metplus_config conf files to determine the [section] that corresponds to the variable you are overriding. The value set will be the last one in the sequence of configuration files. See OUTPUT_BASE/metpus_final.conf to see what values were used for a given METplus run.

Example:

View the ${HOME}/METplus/parm/metplus_config/metplus_system.conf file and notice how OUTPUT_BASE = /path/to . This implies it is REQUIRED to be overridden to a valid path.

If you are NOT using a custom conf file, you will need to set the values in metplus_config. We recommend using a custom conf file to avoid conflicts if using a shared METplus installation. It is also beneficial when upgrading to a new version of METplus, as you will not have to scan the metplus_config directory to find all of the changes you have made.

METplus: How to Run

METplus: How to Run admin Mon, 06/24/2019 - 16:04Running METplus

Running METplus involves invoking the python script, master_metplus.py from any directory followed by a list of configuration files using the -c option for each additional conf file (conf files are specified relative to the METplus/parm directory).

Reminder: The default set of conf files are always read in and processed in the following order;

metplus_system.conf, metplus_data.conf, metplus_runtime.conf, metplus_logging.conf.

Example: Run the default configuration

If you have configured METplus correctly and run master_metplus.py with only your custom conf file, it will generate a usage statement to indicate that other config files are required to perform a useful tasks. It will generate an error statement if something is amiss.

Run master_metplus.py on the command line:

THE EXAMPLES BELOW SHOW THE SYNTAX FOR PASSING IN CONF FILES AND ARE NOT MEANT TO BE RUN.

However, feel free to run the command and become familiar with the errors that are generated when referring to a path and/or conf file that does not exist.

Remember: Additional conf files are processed after the metplus_config files in the order specified on the command line.

Order matters, since each successive conf file will override any variable defined in a previous conf file.

NOTE: The processing order allows for structuring your conf files from general (variables shared-by-all) to specific (variables shared-by-few).

Syntax: Running a use_case configuration

Syntax: Runing a use_case/examples configuration

Syntax: Runing a use_case/examples configuration with your CUSTOM conf.

MET Tool: PCP-Combine

MET Tool: PCP-Combine admin Mon, 06/24/2019 - 16:05We now shift to a dicussion of the MET PCP-Combine tool and will practice running it directly on the command line. Later in this session, we will run PCP-Combine as part of a METplus use case.

PCP-Combine Functionality

The PCP-Combine tool is used (if needed) to add, subtract, or sum accumulated precipitation from several gridded data files into a single NetCDF file containing the desired accumulation period. It's NetCDF output may be used as input to the MET statistics tools. PCP-Combine may be configured to combine any gridded data field you'd like. However, all gridded data files being combined must have already been placed on a common grid. The copygb utility is recommended for re-gridding GRIB files. In addition, the PCP-Combine tool will only sum model files with the same initialization time unless it is configured to ignore the initialization time.

PCP-Combine Usage

View the usage statement for PCP-Combine by simply typing the following:

| Usage: pcp_combine | ||

| [[-sum] sum_args] | [-add add_args] | [-subtract subtract_args] (Note: "|" means "or") |

||

| [[-sum] sum_args] | Precipitation from multiple files containing the same accumulation interval should be summed up using the arguments provided. | |

| [-add add_args] | Values from one or more files should be added together where the accumulation interval is specified separately for each input file. | |

| [-subtract subtract_args] | Values from exactly two files should be subtracted where the accumulation interval is specified separately for each input file. | |

| [-field string] | Overrides the default use of accumulated precipitation (optional). | |

| [-name variable_name] | Overrides the default NetCDF variable name to be written (optional). | |

| [-log file] | Outputs log messages to the specified file | |

| [-v level] | Level of logging | |

| [-compress level] | NetCDF file compression |

Use the -sum, -add, or -subtract command line option to indicate the operation to be performed. Each operation has its own set of required arguments.

PCP-Combine Tool: Run Sum Command

PCP-Combine Tool: Run Sum Command admin Mon, 06/24/2019 - 16:13Since PCP-Combine performs a simple operation and reformatting step, no configuration file is needed.

Start by making an output directory for PCP-Combine and changing directories:

cd ${HOME}/metplus_tutorial/output/met_output/pcp_combine

Now let's run PCP-Combine twice using some sample data that's included with the MET tarball:

-sum 20050807_000000 3 20050807_120000 12 \

sample_fcst_12L_2005080712V_12A.nc \

-pcpdir /classroom/wrfhelp/MET/8.0/met-8.0/data/sample_fcst/2005080700

pcp_combine \

-sum 00000000_000000 1 20050807_120000 12 \

sample_obs_12L_2005080712V_12A.nc \

-pcpdir /classroom/wrfhelp/MET/8.0/met-8.0/data/sample_obs/ST2ml

The "\" symbols in the commands above are used for ease of reading. They are line continuation markers enabling us to spread a long command line across multiple lines. They should be followed immediately by "Enter". You may copy and paste the command line OR type in the entire line with or without the "\".

Both commands run the sum command which searches the contents of the -pcpdir directory for the data required to create the requested accmululation interval.

In the first command, PCP-Combine summed up 4 3-hourly accumulation forecast files into a single 12-hour accumulation forecast. In the second command, PCP-Combine summed up 12 1-hourly accumulation observation files into a single 12-hour accumulation observation. PCP-Combine performs these tasks very quickly.

We'll use these PCP-Combine output files as input for Grid-Stat. So make sure that these commands have run successfully!

PCP-Combine Tool: Output

PCP-Combine Tool: Output admin Mon, 06/24/2019 - 16:13When PCP-Combine is finished, you may view the output NetCDF files it wrote using the ncdump and ncview utilities. Run the following commands to view contents of the NetCDF files:

ncview sample_obs_12L_2005080712V_12A.nc &

ncdump -h sample_fcst_12L_2005080712V_12A.nc

ncdump -h sample_obs_12L_2005080712V_12A.nc

The ncview windows display plots of the precipitation data in these files. The output of ncdump indicates that the gridded fields are named APCP_12, the GRIB code abbreviation for accumulated precipitation. The accumulation interval is 12 hours for both the forecast (3-hourly * 4 files = 12 hours) and the observation (1-hourly * 12 files = 12 hours).

Plot-Data-Plane Tool

The Plot-Data-Plane tool can be run to visualize any gridded data that the MET tools can read. It is a very helpful utility for making sure that MET can read data from your file, orient it correctly, and plot it at the correct spot on the earth. When using new gridded data in MET, it's a great idea to run it through Plot-Data-Plane first:

sample_fcst_12L_2005080712V_12A.nc \

sample_fcst_12L_2005080712V_12A.ps \

'name="APCP_12"; level="(*,*)";'

okular sample_fcst_12L_2005080712V_12A.ps &

Next try re-running the command list above, but add the convert(x)=x/25.4; function to the config string (Hint: after the level setting but before the last closing tick) to change units from millimeters to inches. What happened to the values in the colorbar?

Now, try re-running again, but add the censor_thresh=lt1.0; censor_val=0.0; options to the config string to reset any data values less 1.0 to a value of 0.0. How has your plot changed?

The convert(x) and censor_thresh/censor_val options can be used in config strings and MET config files to transform your data in simple ways.

PCP-Combine Tool: Add and Subtract Commands

PCP-Combine Tool: Add and Subtract Commands admin Mon, 06/24/2019 - 16:14We have run examples of the PCP-Combine -sum command, but the tool also supports the -add and -subtract commands. While the -sum command defines a directory to be searched, for -add and -subtract we tell PCP-Combine exactly which files to read and what data to process. The following command adds together 3-hourly precipitation from 4 forecast files, just like we did in the previous step with the -sum command:

/classroom/wrfhelp/MET/8.0/met-8.0/data/sample_fcst/2005080700/wrfprs_ruc13_03.tm00_G212 03 \

/classroom/wrfhelp/MET/8.0/met-8.0/data/sample_fcst/2005080700/wrfprs_ruc13_06.tm00_G212 03 \

/classroom/wrfhelp/MET/8.0/met-8.0/data/sample_fcst/2005080700/wrfprs_ruc13_09.tm00_G212 03 \

/classroom/wrfhelp/MET/8.0/met-8.0/data/sample_fcst/2005080700/wrfprs_ruc13_12.tm00_G212 03 \

add_APCP_12.nc

By default, PCP-Combine looks for accumulated precipitation, and the 03 tells it to look for 3-hourly accumulations. However, that 03 string can be replaced with a configuration string describing the data to be processed. The configuration string should be enclosed in single quotes. Below, we add together the U and V components of 10-meter wind from the same inpute file. You would not typically want to do this, but this demonstrates the functionality. We also use the -name command line option to define a descriptive output NetCDF variable name:

/classroom/wrfhelp/MET/8.0/met-8.0/data/sample_fcst/2005080700/wrfprs_ruc13_03.tm00_G212 'name="UGRD"; level="Z10";' \

/classroom/wrfhelp/MET/8.0/met-8.0/data/sample_fcst/2005080700/wrfprs_ruc13_03.tm00_G212 'name="VGRD"; level="Z10";' \

add_WINDS.nc \

-name UGRD_PLUS_VGRD

While the -add command can be run on one or more input files, the -subtract command requires exactly two. Let's rerun the wind example from above but do a subtraction instead:

/classroom/wrfhelp/MET/8.0/met-8.0/data/sample_fcst/2005080700/wrfprs_ruc13_03.tm00_G212 'name="UGRD"; level="Z10";' \

/classroom/wrfhelp/MET/8.0/met-8.0/data/sample_fcst/2005080700/wrfprs_ruc13_03.tm00_G212 'name="VGRD"; level="Z10";' \

subtract_WINDS.nc \

-name UGRD_MINUS_VGRD

Now run Plot-Data-Plane to visualize this output. Use the -plot_range option to specify a the desired plotting range, the -title option to add a title, and the -color_table option to switch from the default color table to one that's good for positive and negative values:

subtract_WINDS.nc \

subtract_WINDS.ps \

'name="UGRD_MINUS_VGRD"; level="(*,*)";' \

-plot_range -15 15 \

-title "10-meter UGRD minus VGRD" \

-color_table MET_BASE/colortables/NCL_colortables/posneg_2.ctable

okular subtract_WINDS.ps &

MET Tool: Gen-Vx-Mask

MET Tool: Gen-Vx-Mask admin Mon, 06/24/2019 - 16:06Gen-Vx-Mask Functionality

The Gen-Vx-Mask tool may be run to speed up the execution time of the other MET tools. Gen-Vx-Mask defines a bitmap masking region for your domain. It takes as input a gridded data file defining your domain and a second arguement to define the area of interest (varies by masking type). It writes out a NetCDF file containing a bitmap for that masking region. You can run Gen-Vx-Mask iteratively, passing its output back in as input, to define more complex masking regions.

You can then use the output of Gen-Vx-Mask to define masking regions in the MET statistics tools. While those tools can read ASCII lat/lon polyline files directly, they are able to process the output of Gen-Vx-Mask much more quickly than the original polyline. The idea is to define your masking region once for your domain with Gen-Vx-Mask and apply the output many times in the MET statistics tools.

Gen-Vx-Mask Usage

View the usage statement for Gen-Vx-Mask by simply typing the following:

| Usage: gen_vx_mask | ||

| input_file | Gridded data file defining the domain | |

| mask_file | Defines the masking region and varies by -type | |

| out_file | Output NetCDF mask file to be written | |

| [-type string] | Masking type: poly, box, circle, track, grid, data, solar_alt, solar_azi, lat, lon, shape | |

| [-input_field string] | Define field from input_file for grid point initialization values, rather than 0. | |

| [-mask_field string] | Define field from mask_file for data masking. | |

| [-complement, -union, -intersection, -symdiff] | Set logic for combining input_field initialization values with the current mask values. | |

| [-thresh string] | Define threshold for circle, track, data, solar_alt, solar_azi, lat, and lon masking types. | |

| [-height n, -width n] | Define dimensions for box masking. | |

| [-shapeno n] | Define the index of the shape for shapefile masking. | |

| [-value n] | Output mask value to be written, rather than 1. | |

| [-name str] | Specifies the name to be used for the mask. | |

| [-log file] | Outputs log messages to the specified file | |

| [-v level] | Level of logging | |

| [-compress level] | NetCDF compression level |

At a minimum, the input data_file, the input mask_poly polyline file, and the output netcdf_file must be passed on the command line.

Gen-Vx-Mask Tool: Run

Gen-Vx-Mask Tool: Run admin Mon, 06/24/2019 - 16:16Start by making an output directory for Gen-Vx-Mask and changing directories:

cd ${HOME}/metplus_tutorial/output/met_output/gen_vx_mask

Since Gen-Vx-Mask performs a simple masking step, no configuration file is needed.

We'll run the Gen-Vx-Mask tool to apply a polyline for the CONUS (Contiguous United States) to our model domain. Run Gen-Vx-Mask on the command line using the following command:

/classroom/wrfhelp/MET/8.0/met-8.0/data/sample_obs/ST2ml/ST2ml2005080712.Grb_G212 \

/classroom/wrfhelp/MET/8.0/met-8.0/data/poly/CONUS.poly \

CONUS_mask.nc \

-v 2

Re-run using verbosity level 3 and look closely at the log messages. How many grid points were included in this mask?

Gen-Vx-Mask should run very quickly since the grid is coarse (185x129 points) and there are 244 lat/lon points in the CONUS polyline. The more you increase the grid resolution and number of polyline points, the longer it will take to run. View the NetCDF bitmap file generated by executing the following command:

Notice that the bitmap has a value of 1 inside the CONUS polyline and 0 everywhere else. We'll use the CONUS mask we just defined in the next step.

You could trying running plot_data_plane to create a PostScript image of this masking region. Can you remember how?

Notice that there are several ways that gen_vx_mask can be run to define regions of interest, including the use of Shapefiles!

MET Tool: Grid-Stat

MET Tool: Grid-Stat admin Mon, 06/24/2019 - 16:06Grid-Stat Functionality

The Grid-Stat tool provides verification statistics for a matched forecast and observation grid. If the forecast and observation grids do not match, the regrid section of the configuration file controls how the data can be interpolated to a common grid. All of the forecast gridpoints in each spatial verification region of interest are matched to observation gridpoints. The matched gridpoints within each verification region are used to compute the verification statistics.

The output statistics generated by Grid-Stat include continuous partial sums and statistics, vector partial sums and statistics, categorical tables and statistics, probabilistic tables and statistics, neighborhood statistics, and gradient statistics. The computation and output of these various statistics types is controlled by the output_flag in the configuration file.

Grid-Stat Usage

View the usage statement for Grid-Stat by simply typing the following:

| Usage: grid_stat | ||

| fcst_file | Input gridded forecast file containing the field(s) to be verified. | |

| obs_file | Input gridded observation file containing the verifying field(s). | |

| config_file | GridStatConfig file containing the desired configuration settings. | |

| [-outdir path] | Overrides the default output directory (optional). | |

| [-log file] | Outputs log messages to the specified file | |

| [-v level] | Level of logging (optional). | |

| [-compress level] | NetCDF compression level (optional). |

The forecast and observation fields must be on the same grid. You can use copygb to regrid GRIB1 files, wgrib2 to regrid GRIB2 files, or the automated regridding within the regrid section of the MET config files.

At a minimum, the input gridded fcst_file, the input gridded obs_file, and the configuration config_file must be passed in on the command line.

Grid-Stat Tool: Configure

Grid-Stat Tool: Configure admin Mon, 06/24/2019 - 16:17Start by making an output directory for Grid-Stat and changing directories:

cd ${HOME}/metplus_tutorial/output/met_output/grid_stat

The behavior of Grid-Stat is controlled by the contents of the configuration file passed to it on the command line. The default Grid-Stat configuration file may be found in the data/config/GridStatConfig_default file. Prior to modifying the configuration file, users are advised to make a copy of the default:

Open up the GridStatConfig_tutorial file for editing with your preferred text editor.

The configurable items for Grid-Stat are used to specify how the verification is to be performed. The configurable items include specifications for the following:

- The forecast fields to be verified at the specified vertical level or accumulation interval

- The threshold values to be applied

- The areas over which to aggregate statistics - as predefined grids, configurable lat/lon polylines, or gridded data fields

- The confidence interval methods to be used

- The smoothing methods to be applied (as opposed to interpolation methods)

- The types of verification methods to be used

You may find a complete description of the configurable items in section 8.3.2 of the MET User's Guide. Please take some time to review them.

For this tutorial, we'll configure Grid-Stat to verify the 12-hour accumulated precipitation output of PCP-Combine. We'll be using Grid-Stat to verify a single field using NetCDF input for both the forecast and observation files. However, Grid-Stat may in general be used to verify an arbitrary number of fields. Edit the GridStatConfig_tutorial file as follows:

- Set:

fcst = { wind_thresh = [ NA ]; field = [ { name = "APCP_12"; level = [ "(*,*)" ]; cat_thresh = [ >0.0, >=5.0, >=10.0 ]; } ]; } obs = fcst;To verify the field of precipitation accumulated over 12 hours using the 3 thresholds specified.

- Set:

mask = { grid = []; poly = [ "../gen_vx_mask/CONUS_mask.nc", "MET_BASE/poly/NWC.poly", "MET_BASE/poly/SWC.poly", "MET_BASE/poly/GRB.poly", "MET_BASE/poly/SWD.poly", "MET_BASE/poly/NMT.poly", "MET_BASE/poly/SMT.poly", "MET_BASE/poly/NPL.poly", "MET_BASE/poly/SPL.poly", "MET_BASE/poly/MDW.poly", "MET_BASE/poly/LMV.poly", "MET_BASE/poly/GMC.poly", "MET_BASE/poly/APL.poly", "MET_BASE/poly/NEC.poly", "MET_BASE/poly/SEC.poly" ]; }To accumulate statistics over the Continental United States (CONUS) and the 14 NCEP verification regions in the United States defined by the polylines specified. To see a plot of these regions, execute the following command:

okular /classroom/wrfhelp/MET/8.0/share/met/poly/ncep_vx_regions.pdf& - In the boot dictionary, set:

n_rep = 500;

To turn on the computation of bootstrap confidence intervals using 500 replicates.

- In the nbrhd dictionary, set:

width = [ 3, 5 ]; cov_thresh = [ >=0.5, >=0.75 ];

To define two neighborhood sizes and two fractional coverage field thresholds.

- Set:

output_flag = { fho = NONE; ctc = BOTH; cts = BOTH; mctc = NONE; mcts = NONE; cnt = BOTH; sl1l2 = BOTH; sal1l2 = NONE; vl1l2 = NONE; val1l2 = NONE; pct = NONE; pstd = NONE; pjc = NONE; prc = NONE; eclv = NONE; nbrctc = BOTH; nbrcts = BOTH; nbrcnt = BOTH; grad = BOTH; }To compute contingency table counts (CTC), contingency table statistics (CTS), continuous statistics (CNT), scalar partial sums (SL1L2), neighborhood contingency table counts (NBRCTC), neighborhood contingency table statistics (NBRCTS), and neighborhood continuous statistics (NBRCNT).

Grid-Stat Tool: Run

Grid-Stat Tool: Run admin Mon, 06/24/2019 - 16:17Next, run Grid-Stat on the command line using the following command:

../pcp_combine/sample_fcst_12L_2005080712V_12A.nc \

../pcp_combine/sample_obs_12L_2005080712V_12A.nc \

GridStatConfig_tutorial \

-outdir . \

-v 2

Grid-Stat is now performing the verification tasks we requested in the configuration file. It should take a minute or two to run. The status messages written to the screen indicate progress.

In this example, Grid-Stat performs several verification tasks in evaluating the 12-hour accumulated precipiation field:

- For continuous statistics and partial sums (CNT and SL1L2), 15 output lines each:

(1 field * 15 masking regions) - For contingency table counts and statistics (CTC and CTS), 45 output lines each:

(1 field * 3 raw thresholds * 15 masking regions) - For neighborhood methods (NBRCNT, NBRCTC, and NBRCTS), 90 output lines each:

(1 field * 3 raw thresholds * 2 neighborhood sizes * 15 masking regions)

To greatly increase the runtime performance of Grid-Stat, you could disable the computation of bootstrap confidence intervals in the configuration file. Edit the tutorial/config/GridStatConfig_tutorial file as follows:

- In the boot dictionary, set:

n_rep = 0;

To disable the computation of bootstrap confidence intervals.

Now, try rerunning the Grid-Stat command listed above and notice how much faster it runs. While bootstrap confidence intervals are nice to have, they take a long time to compute, especially for gridded data.

Grid-Stat Tool: Output

Grid-Stat Tool: Output admin Mon, 06/24/2019 - 16:18The output of Grid-Stat is one or more ASCII files containing statistics summarizing the verification performed and a NetCDF file containing difference fields. In this example, the output is written to the current directory, as we requested on the command line. It should now contain 10 Grid-Stat output files beginning with the grid_stat_ prefix, one each for the CTC, CTS, CNT, SL1L2, GRAD, NBRCTC, NBRCTS, and NBRCNT ASCII files, a STAT file, and a NetCDF matched pairs file.

The format of the CTC, CTS, CNT, and SL1L2 ASCII files will be covered for the Point-Stat tool. The neighborhood method and gradient output are unique to the Grid-Stat tool.

- Rather than comparing forecast/observation values at individual grid points, the neighborhood method compares areas of forecast values to areas of observation values. At each grid box, a fractional coverage value is computed for each field as the number of grid points within the neighborhood (centered on the current grid point) that exceed the specified raw threshold value. The forecast/observation fractional coverage values are then compared rather than the raw values themselves.

- Gradient statistics are computed on the forecast and observation gradients in the X-direction.

Since the lines of data in these ASCII files are so long, we strongly recommend configuring your text editor to NOT use dynamic word wrapping. The files will be much easier to read that way.

Execute the following command to view the NetCDF output of Grid-Stat:

Click through the 2d vars variable names in the ncview window to see plots of the forecast, observation, and difference fields for each masking region. If you see a warning message about the min/max values being zero, just click OK.

Now dump the NetCDF header:

View the NetCDF header to see how the variable names are defined.

Notice how *MANY* variables there are, separate output for each of the masking regions defined. Try editing the config file again by setting apply_mask = FALSE; and gradient = TRUE; in the nc_pairs_flag dictionary. Re-run Grid-Stat and inspect the output NetCDF file. What affect did these changes have?

METplus Motivation

METplus Motivation admin Mon, 06/24/2019 - 16:19We have now successfully run the PCP-Combine and Grid-Stat tools to verify 12-hourly accumulated preciptation for a single output time. We did the following steps:

- Identified our forecast and observation datasets.

- Constructed PCP-Combine commands to put them into a common accumulation interval.

- Configured and ran Grid-Stat to compute our desired verification statistics.

Now that we've defined the logic for a single run, the next step would be writing a script to automate these steps for many model initializations and forecast lead times. Rather than every MET user rewriting the same type of scripts, use METplus to automate these steps in a use case!

METplus Use Case: Grid to Grid Anomaly

METplus Use Case: Grid to Grid Anomaly admin Mon, 06/24/2019 - 16:07The Grid to Grid Anomaly use case utilizes the MET grid_stat tool.

Optional: Refer to section 4.1 and 7.3 of the MET Users Guide for a description of the MET tools used in this use case.

Optional: Refer to section 4.4 (A-Z Config Glossary) of the METplus 2.0.4 Users Guide for a reference to METplus variables used in this use case.

Setup

Edit Custom Configuration File

Define a unique directory under output that you will use for this use case.

Edit ${HOME}/METplus/parm/mycustom.conf

Change OUTPUT_BASE to contain a subdirectory specific to the Grid to Grid use case.

... other entries ...

OUTPUT_BASE = {ENV[HOME]}/metplus_tutorial/output/grid_to_grid

Using your custom configuration file that you created in the METplus: CUSTOM Configuration File Settings section and the Grid to Grid use case configuration files that are distributed with METplus, you should be able to run the use case using the sample input data set without any other changes.

Review Use Case Configuration File: anom.conf

Open the file and look at all of the configuration variables that are defined.

Note that variables in anom.conf reference other config variables that have been defined in your mycustom.conf configuration file. For example:

This references INPUT_BASE which is set in your custom config file. METplus config variables can reference other config variables even if they are defined in a config file that is read afterwards.

Run METplus

Run the following command:

METplus is finished running when control returns to your terminal console.

Review the Output Files

You should have output files in the following directories:

- grid_stat_GFS_vs_ANLYS_240000L_20170613_000000V.stat

- grid_stat_GFS_vs_ANLYS_480000L_20170613_000000V.stat

- grid_stat_GFS_vs_ANLYS_240000L_20170613_060000V.stat

- grid_stat_GFS_vs_ANLYS_480000L_20170613_060000V.stat

Take a look at some of the files to see what was generated.

Review the Log Files

Log files for this run are found in $HOME/metplus_tutorial/output/grid_to_grid/logs. The filename contains a timestamp of the current day.

NOTE: If you ran METplus on a different day than today, the log file will correspond to the day you ran. Remove the date command and replace it with the date you ran if that is the case.

Review the Final Configuration File

The final configuration file is $HOME/metplus_tutorial/output/grid_to_grid/metplus_final.conf. This contains all of the configuration variables used in the run.

End of Practical Session 1: Additional Exercises

End of Practical Session 1: Additional Exercises admin Mon, 06/24/2019 - 16:08Congratulations! You have completed Session 1!

If you have extra time, you may want to try these additional MET exercises:

- Run Gen-Vx-Mask to create a mask for Eastern or Western United States using the polyline files in the data/poly directory. Re-run Grid-Stat using the output of Gen-Vx-Mask.

- Run Gen-Vx-Mask to exercise all the other masking types available.

- Reconfigure and re-run Grid-Stat with the fourier dictionary defined and the grad output line type enabled.

If you have extra time, you may want to try these additional METplus exercises. The answers are found on the next page.

EXERCISE 1.1: add_rh - Add another field to grid_stat

Instructions: Modify the METplus configuration files to add relative humidity (RH) at pressure levels 500 and 250 (P500 and P250) to the output.

Copy your custom configuration file and rename it to mycustom.add_rh.conf for this exercise.

Open anom.conf to remind yourself how fields are defined in METplus

Open mycustom.add_rh.conf with an editor and add the extra information.

HINT: The variables that you need to add must go under the [config] section.

You should also change OUTPUT_BASE to a new location so you can keep it separate from the other runs.

OUTPUT_BASE = {ENV[HOME]}/metplus_tutorial/output/exercises/add_rh

Rerun master_metplus passing in your new custom config file for this exercise

EXERCISE 1.2: log_test - Change the Logging Settings

Instructions: Modify the METplus configuration files to change the logging settings to see what is available.

cp $HOME/METplus/parm/mycustom.conf $HOME/METplus/parm/mycustom.log_test.conf

You should change OUTPUT_BASE to a new location so you can keep it separate from the other runs.

OUTPUT_BASE = {ENV[HOME]}/metplus_tutorial/output/exercises/log_test

Separate METplus Logs from MET Logs

[config]

LOG_MET_OUTPUT_TO_METPLUS = no

For this use case, two log files will be created: master_metplus.log.YYYYMMDD and grid_stat.log_YYYYMMDD

Use Time of Data Instead of Current Time

[config]

LOG_TIMESTAMP_USE_DATATIME = yes

For this use case, VALID_BEG = 2017061300, so the log files will have the format: master_metplus.log.20170613 instead of using today's date

Change Format of Time in Logfile Names

[config]

LOG_TIMESTAMP = %Y%m%d%H%M

For this use case, the log files will have the format: master_metplus.log.YYYYMMDDHH

Rerun master_metplus passing in your new custom config file for this exercise

master_metplus.py -c use_cases/grid_to_grid/examples/anom.conf -c mycustom.log_test.conf

ls $HOME/metplus_tutorial/output/exercises/log_test/logs

Look at metplus_config/metplus_logging.conf to see other logging configurations you can change and test them out!

Answers to Exercises from Session 1

Answers to Exercises from Session 1 cindyhg Tue, 06/25/2019 - 11:44Answers to Exercises from Session 1

These are the answers to the exercises from the previous page. Feel free to ask a MET representative if you have any questions!

ANSWER 1.1: add_rh - Add another field to grid_stat

Instructions: Modify the METplus configuration files to add relative humidity (RH) at pressure levels 500 and 250 (P500 and P250) to the output.

FCST_VAR5_NAME = RH

FCST_VAR5_LEVELS = P500, P250

Session 2: Grid-to-Obs

Session 2: Grid-to-Obs admin Wed, 06/12/2019 - 16:57METplus Practical Session 2

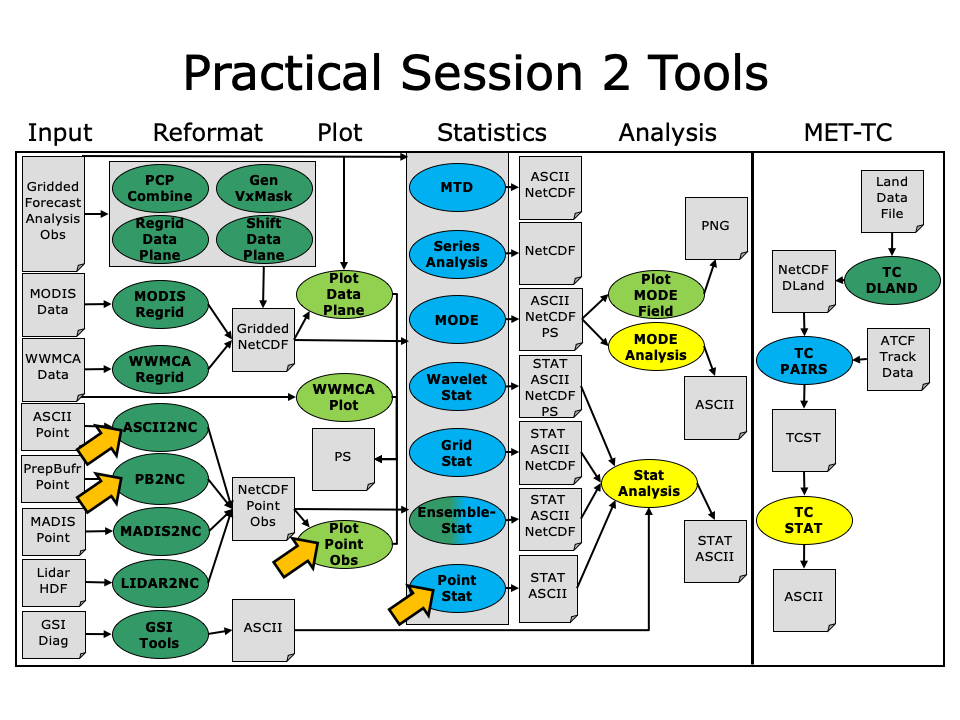

During the second METplus practical session, you will run the tools indicated below:

During this practical session, please work on the Session 2 exercises. You may navigate through this tutorial by following the arrows in the bottom-right or by using the left-hand menu navigation.

During this practical session, please work on the Session 2 exercises. You may navigate through this tutorial by following the arrows in the bottom-right or by using the left-hand menu navigation.

Since you already set up your runtime enviroment in Session 1, you should be ready to go!

The MET Users Guide can be viewed here:

okular /classroom/wrfhelp/MET/8.0/met-8.0/doc/MET_Users_Guide.pdf &

MET Tool: PB2NC

MET Tool: PB2NC cindyhg Tue, 06/25/2019 - 09:25PB2NC Tool: General

PB2NC Functionality

The PB2NC tool is used to stratify (i.e. subset) the contents of an input PrepBufr point observation file and reformat it into NetCDF format for use by the Point-Stat or Ensemble-Stat tool. In this session, we will run PB2NC on a PrepBufr point observation file prior to running Point-Stat. Observations may be stratified by variable type, PrepBufr message type, station identifier, a masking region, elevation, report type, vertical level category, quality mark threshold, and level of PrepBufr processing. Stratification is controlled by a configuration file and discussed on the next page.

The PB2NC tool may be run on both PrepBufr and Bufr observation files. As of met-6.1, support for Bufr is limited to files containing embedded tables. Support for Bufr files using external tables will be added in a future release.

For more information about the PrepBufr format, visit:

http://www.emc.ncep.noaa.gov/mmb/data_processing/prepbufr.doc/document.htm

For information on where to download PrepBufr files, visit:

http://www.dtcenter.org/met/users/downloads/observation_data.php

PB2NC Usage

View the usage statement for PB2NC by simply typing the following:

| Usage: pb2nc | ||

| prepbufr_file | input prepbufr path/filename | |

| netcdf_file | output netcdf path/filename | |

| config_file | configuration path/filename | |

| [-pbfile prepbufr_file] | additional input files | |

| [-valid_beg time] | Beginning of valid time window [YYYYMMDD_[HH[MMSS]]] | |

| [-valid_end time] | End of valid time window [YYYYMMDD_[HH[MMSS]]] | |

| [-nmsg n] | Number of PrepBufr messages to process | |

| [-index] | List available observation variables by message type (no output file) | |

| [-dump path] | Dump entire contents of PrepBufr file to directory | |

| [-log file] | Outputs log messages to the specified file | |

| [-v level] | Level of logging | |

| [-compression level] | NetCDF file compression |

At a minimum, the input prepbufr_file, the output netcdf_file, and the configuration config_file must be passed in on the command line. Also, you may use the -pbfile command line argument to run PB2NC using multiple input PrepBufr files, likely adjacent in time.

When running PB2NC on a new dataset, users are advised to run with the -index option to list the observation variables that are present in that file.

PB2NC Tool: Configure

PB2NC Tool: Configure cindyhg Tue, 06/25/2019 - 09:26PB2NC Tool: Configure

Start by making an output directory for PB2NC and changing directories:

cd ${HOME}/metplus_tutorial/output/met_output/pb2nc

The behavior of PB2NC is controlled by the contents of the configuration file passed to it on the command line. The default PB2NC configuration may be found in the data/config/PB2NCConfig_default file. Prior to modifying the configuration file, users are advised to make a copy of the default:

Open up the PB2NCConfig_tutorial_run1 file for editing with your preferred text editor.

The configurable items for PB2NC are used to filter out the PrepBufr observations that should be retained or derived. You may find a complete description of the configurable items in section 4.1.2 of the MET User's Guide or in the configuration README file.

For this tutorial, edit the PB2NCConfig_tutorial_run1 file as follows:

- Set:

message_type = [ "ADPUPA", "ADPSFC" ];

To retain only those 2 message types. Message types are described in:

http://www.emc.ncep.noaa.gov/mmb/data_processing/prepbufr.doc/table_1.htm - Set:

obs_window = {

beg = -30*60;

end = 30*60;

}So that only observations within 30 minutes of the file time will be retained.

- Set:

mask = {

grid = "G212";

poly = "";

}To retain only those observations residing within NCEP Grid 212, on which the forecast data resides.

- Set:

obs_bufr_var = [ "QOB", "TOB", "UOB", "VOB", "D_WIND", "D_RH" ];To retain observations for specific humidity, temperature, the u-component of wind, and the v-component of wind and to derive observation values for wind speed and relative humidity.

While we are request these observation variable names from the input file, the following corresponding strings will be written to the output file: SPFH, TMP, UGRD, VGRD, WIND, RH. This mapping of input PrepBufr variable names to output variable names is specified by the obs_prepbufr_map config file entry. This enables the new features in the current version of MET to be backward compatible with earlier versions.

Next, save the tutorial/config/PB2NCConfig_tutorial_run1 file and exit the text editor.

PB2NC Tool: Run

PB2NC Tool: Run cindyhg Tue, 06/25/2019 - 09:27PB2NC Tool: Run

Next, run PB2NC on the command line using the following command:

/classroom/wrfhelp/MET/8.0/met-8.0/data/sample_obs/prepbufr/ndas.t00z.prepbufr.tm12.20070401.nr \

tutorial_pb_run1.nc \

PB2NCConfig_tutorial_run1 \

-v 2

PB2NC is now filtering the observations from the PrepBufr file using the configuration settings we specified and writing the output to the NetCDF file name we chose. This should take a few minutes to run. As it runs, you should see several status messages printed to the screen to indicate progress. You may use the -v command line option to turn off (-v 0) or change the amount of log information printed to the screen.

Inspect the PB2NC status messages and note that 69833 PrepBufr messages were filtered down to 8394 messages which produced 52491 actual and derived observations. If you'd like to filter down the observations further, you may want to narrow the time window or modify other filtering criteria. We will do that after inspecting the resultant NetCDF file.

PB2NC Tool: Output

PB2NC Tool: Output cindyhg Tue, 06/25/2019 - 09:29PB2NC Tool: Output

When PB2NC is finished, you may view the output NetCDF file it wrote using the ncdump utility. Run the following command to view the header of the NetCDF output file:

In the NetCDF header, you'll see that the file contains nine dimensions and nine variables. The obs_arr variable contains the actual observation values. The obs_qty variable contains the corresponding quality flags. The four header variables (hdr_typ, hdr_sid, hdr_vld, hdr_arr) contain information about the observing locations.

The obs_var, obs_unit, and obs_desc variables describe the observation variables contained in the output. The second entry of the obs_arr variable (i.e. var_id) lists the index into these array for each observation. For example, for observations of temperature, you'd see TMP in obs_var, KELVIN in obs_unit, and TEMPERATURE OBSERVATION in obs_desc. For observations of temperature in obs_arr, the second entry (var_id) would list the index of that temperature information.

Inspect the output of ncdump before continuing.

Plot-Point-Obs

The plot_point_obs tool plots the location of these NetCDF point observations. Just like plot_data_plane is useful to visualize gridded data, run plot_point_obs to make sure you have point observations where you expect. Run the following command:

tutorial_pb_run1.nc \

tutorial_pb_run1.ps

Display the output PostScript file by running the following command:

Each red dot in the plot represents the location of at least one observation value. The plot_point_obs tool has additional command line options for filtering which observations get plotted and the area to be plotted. View its usage statement by running the following command:

By default, the points are plotted on the full globe. Next, try rerunning plot_point_obs using the -data_file option to specify the grid over which the points should be plotted:

tutorial_pb_run1.nc \

tutorial_pb_run1_zoom.ps \

-data_file /classroom/wrfhelp/MET/8.0/met-8.0/data/sample_fcst/2007033000/nam.t00z.awip1236.tm00.20070330.grb

MET extracts the grid information from the first record of that GRIB file and plots the points on that domain. Display the output PostScript file by running the following command:

The plot_data_plane tool can be run on the NetCDF output of any of the MET point observation pre-processing tools (pb2nc, ascii2nc, madis2nc, and lidar2nc).

PB2NC Tool: Reconfigure and Rerun

PB2NC Tool: Reconfigure and Rerun cindyhg Tue, 06/25/2019 - 09:31PB2NC Tool: Reconfigure and Rerun

Now we'll rerun PB2NC, but this time we'll tighten the observation acceptance criteria. Start by making a copy of the configuration file we just used:

Open up the PB2NCConfig_tutorial_run2 file and edit it as follows:

- Set:

message_type = [];

To retain all message types.

- Set:

obs_window = {

beg = -25*60;

end = 25*60;

}So that only observations 25 minutes before and 25 minutes after the top of the hour are retained.

- Set:

quality_mark_thresh = 1;To retain only the observations marked "Good" by the NCEP quality control system.

Next, run PB2NC again but change the output name using the following command:

/classroom/wrfhelp/MET/8.0/met-8.0/data/sample_obs/prepbufr/ndas.t00z.prepbufr.tm12.20070401.nr \

tutorial_pb_run2.nc \

PB2NCConfig_tutorial_run2 \

-v 2

Inspect the PB2NC status messages and note that 4000 observations were retained rather than 52491 in the previous example. The majority of the observations were rejected because their valid time no longer fell inside the tighter obs_window setting.

When configuring PB2NC for your own projects, you should err on the side of keeping more data rather than less. As you'll see, the grid-to-point verification tools (Point-Stat and Ensemble-Stat) allow you to further refine which point observations are actually used in the verification. However, keeping a lot of point observations that you'll never actually use will make the data files larger and slightly slow down the verification. For example, if you're using a Global Data Assimilation (GDAS) PREPBUFR file to verify a model over Europe, it would make sense to only keep those point observations that fall within your model domain.

MET Tool: ASCII2NC

MET Tool: ASCII2NC cindyhg Tue, 06/25/2019 - 09:32ASCII2NC Tool: General

ASCII2NC Functionality

The ASCII2NC tool reformats ASCII point observations into the intermediate NetCDF format that Point-Stat and Ensemble-Stat read. ASCII2NC simply reformats the data and does much less filtering of the observations than PB2NC does. ASCII2NC supports a simple 11-column format, described below, the Little-R format often used in data assimilation, surface radiation (SURFRAD) data, Western Wind and Solar Integration Studay (WWSIS) data, and AErosol RObotic NEtwork (Aeronet) data. Future version of MET may be enhanced to support additional commonly used ASCII point observation formats based on community input.

MET Point Observation Format

The MET point observation format consists of one observation value per line. Each input observation line should consist of the following 11 columns of data:

- Message_Type

- Station_ID

- Valid_Time in YYYYMMDD_HHMMSS format

- Lat in degrees North

- Lon in degrees East

- Elevation in meters above sea level

- Variable_Name for this observation (or GRIB_Code for backward compatibility)

- Level as the pressure level in hPa or accumulation interval in hours

- Height in meters above sea level or above ground level

- QC_String quality control string

- Observation_Value

It is the user's responsibility to get their ASCII point observations into this format.

ASCII2NC Usage

View the usage statement for ASCII2NC by simply typing the following:

| Usage: ascii2nc | ||

| ascii_file1 [...] | One or more input ASCII path/filename | |

| netcdf_file | Output NetCDF path/filename | |

| [-format ASCII_format] | Set to met_point, little_r, surfrad, wwsis, or aeronet | |

| [-config file] | Configuration file to specify how observation data should be summarized | |

| [-mask_grid string] | Named grid or a gridded data file for filtering point observations spatially | |

| [-mask_poly file] | Polyline masking file for filtering point observations spatially | |

| [-mask_sid file|list] | Specific station ID's to be used in an ASCII file or comma-separted list | |

| [-log file] | Outputs log messages to the specified file | |

| [-v level] | Level of logging | |

| [-compress level] | NetCDF compression level |

At a minimum, the input ascii_file and the output netcdf_file must be passed on the command line. ASCII2NC interrogates the data to determine it's format, but the user may explicitly set it using the -format command line option. The -mask_grid, -mask_poly, and -mask_sid options can be used to filter observations spatially.

ASCII2NC Tool: Run

ASCII2NC Tool: Run cindyhg Tue, 06/25/2019 - 09:33ASCII2NC Tool: Run

Start by making an output directory for ASCII2NC and changing directories:

cd ${HOME}/metplus_tutorial/output/met_output/ascii2nc

Since ASCII2NC performs a simple reformatting step, typically no configuration file is needed. However, when processing high-frequency (1 or 3-minute) SURFRAD data, a configuration file may be used to define a time window and summary metric for each station. For example, you might compute the average observation value +/- 15 minutes at the top of each hour for each station. In this example, we will not use a configuration file.

The sample ASCII observations in the MET tarball are still identified by GRIB code rather than the newer variable name option. Dump that file and notice that the GRIB codes in the seventh column could be replaced by corresponding variable names. For example, 52 corresponds to RH:

Run ASCII2NC on the command line using the following command:

/classroom/wrfhelp/MET/8.0/met-8.0/data/sample_obs/ascii/sample_ascii_obs.txt \

tutorial_ascii.nc \

-v 2

ASCII2NC should perform this reformatting step very quickly since the sample file only contains data for 5 stations.

ASCII2NC Tool: Output

ASCII2NC Tool: Output cindyhg Tue, 06/25/2019 - 09:37ASCII2NC Tool: Output

When ASCII2NC is finished, you may view the output NetCDF file it wrote using the ncdump utility. Run the following command to view the header of the NetCDF output file:

The NetCDF header should look nearly identical to the output of the NetCDF output of PB2NC. You can see the list of stations for which we have data by inspecting the hdr_sid_table variable:

Feel free to inspect the contents of the other variables as well.

This ASCII data only contains observations at a few locations. Use the plot_point_obs to plot the locations, increasing the level of verbosity to 3 to see more detail:

tutorial_ascii.nc \

tutorial_ascii.ps \

-data_file /classroom/wrfhelp/MET/8.0/met-8.0/data/sample_fcst/2007033000/nam.t00z.awip1236.tm00.20070330.grb \

-v 3

Next, we'll use the NetCDF output of PB2NC and ASCII2NC to perform Grid-to-Point verification using the Point-Stat tool.

MET Tool: Point-Stat

MET Tool: Point-Stat cindyhg Tue, 06/25/2019 - 09:38Point-Stat Tool: General

Point-Stat Functionality

The Point-Stat tool provides verification statistics for comparing gridded forecasts to observation points, as opposed to gridded analyses like Grid-Stat. The Point-Stat tool matches gridded forecasts to point observation locations using one or more configurable interpolation methods. The tool then computes a configurable set of verification statistics for these matched pairs. Continuous statistics are computed over the raw matched pair values. Categorical statistics are generally calculated by applying a threshold to the forecast and observation values. Confidence intervals, which represent a measure of uncertainty, are computed for all of the verification statistics.

Point-Stat Usage

View the usage statement for Point-Stat by simply typing the following:

| Usage: point_stat | ||

| fcst_file | Input gridded file path/name | |

| obs_file | Input NetCDF observation file path/name | |

| config_file | Configuration file | |

| [-point_obs file] | Additional NetCDF observation files to be used (optional) | |

| [-obs_valid_beg time] | Sets the beginning of the matching time window in YYYYMMDD[_HH[MMSS]] format (optional) | |

| [-obs_valid_end time] | Sets the end of the matching time window in YYYYMMDD[_HH[MMSS]] format (optional) | |

| [-outdir path] | Overrides the default output directory (optional) | |

| [-log file] | Outputs log messages to the specified file (optional) | |

| [-v level] | Level of logging (optional) |

At a minimum, the input gridded fcst_file, the input NetCDF obs_file (output of PB2NC, ASCII2NC, MADIS2NC, and LIDAR2NC, last two not covered in these exercises), and the configuration config_file must be passed in on the command line. You may use the -point_obs command line argument to specify additional NetCDF observation files to be used.

Point-Stat Tool: Configure

Point-Stat Tool: Configure cindyhg Tue, 06/25/2019 - 09:40Point-Stat Tool: Configure

Start by making an output directory for Point-Stat and changing directories:

cd ${HOME}/metplus_tutorial/output/met_output/point_stat

The behavior of Point-Stat is controlled by the contents of the configuration file passed to it on the command line. The default Point-Stat configuration file may be found in the data/config/PointStatConfig_default file.

The configurable items for Point-Stat are used to specify how the verification is to be performed. The configurable items include specifications for the following:

- The forecast fields to be verified at the specified vertical levels.

- The type of point observations to be matched to the forecasts.

- The threshold values to be applied.

- The areas over which to aggregate statistics - as predefined grids, lat/lon polylines, or individual stations.

- The confidence interval methods to be used.

- The interpolation methods to be used.

- The types of verification methods to be used.

Let's customize the configuration file. First, make a copy of the default:

Next, open up the PointStatConfig_tutorial_run1 file for editing and modify it as follows:

- Set:

fcst = {

wind_thresh = [ NA ];

message_type = [ "ADPUPA" ];field = [

{

name = "TMP";

level = [ "P850-1050", "P500-850" ];

cat_thresh = [ <=273, >273 ];

}

];

}

obs = fcst;To verify temperature over two different pressure ranges against ADPUPA observations using the thresholds specified.

- Set:

ci_alpha = [ 0.05, 0.10 ];To compute confidence intervals using both a 5% and a 10% level of certainty.

- Set:

output_flag = {

fho = BOTH;

ctc = BOTH;

cts = STAT;

mctc = NONE;

mcts = NONE;

cnt = BOTH;

sl1l2 = STAT;

sal1l2 = NONE;

vl1l2 = NONE;

val1l2 = NONE;

pct = NONE;

pstd = NONE;

pjc = NONE;

prc = NONE;

ecnt = NONE;

eclv = BOTH;

mpr = BOTH;

}To indicate that the forecast-hit-observation (FHO) counts, contingency table counts (CTC), contingency table statistics (CTS), continuous statistics (CNT), partial sums (SL1L2), economic cost/loss value (ECLV), and the matched pair data (MPR) line types should be output. Setting SL1L2 and CTS to STAT causes those lines to only be written to the output .stat file, while setting others to BOTH causes them to be written to both the .stat file and the optional LINE_TYPE.txt file.

- Set:

output_prefix = "run1";To customize the output file names for this run.

Note in this configuration file that in the mask dictionary, the grid entry is set to FULL. This instructs Point-Stat to compute statistics over the entire input model domain. Setting grid to FULL has this special meaning.

Next, save the PointStatConfig_tutorial_run1 file and exit the text editor.

Point-Stat Tool: Run

Point-Stat Tool: Run cindyhg Tue, 06/25/2019 - 09:41Point-Stat Tool: Run

Next, run Point-Stat to compare a GRIB forecast to the NetCDF point observation output of the ASCII2NC tool. Run the following command line:

/classroom/wrfhelp/MET/8.0/met-8.0/data/sample_fcst/2007033000/nam.t00z.awip1236.tm00.20070330.grb \

../ascii2nc/tutorial_ascii.nc \

PointStatConfig_tutorial_run1 \

-outdir . \

-v 2

Point-Stat is now performing the verification tasks we requested in the configuration file. It should take less than a minute to run. You should see several status messages printed to the screen to indicate progress.

Now try rerunning the command listed above, but increase the verbosity level to 3 (-v 3). Notice the more detailed information about which observations were used for each verification task. If you run Point-Stat and get fewer matched pairs than you expected, try using the -v 3 option to see why the observations were rejected.

Users often write MET-Help to ask why they got zero matched pairs from Point-Stat. The first step is always rerunning Point-Stat using verbosity level 3 or higher to list the counts of reasons for why observations were not used!

Point-Stat Tool: Output

Point-Stat Tool: Output cindyhg Tue, 06/25/2019 - 09:42Point-Stat Tool: Output

The output of Point-Stat is one or more ASCII files containing statistics summarizing the verification performed. Since we wrote output to the current directory, it should now contain 6 ASCII files that begin with the point_stat_ prefix, one each for the FHO, CTC, CNT, ECLV, and MPR types, and a sixth for the STAT file. The STAT file contains all of the output statistics while the other ASCII files contain the exact same data organized by line type.

Since the lines of data in these ASCII files are so long, we strongly recommend configuring your text editor to NOT use dynamic word wrapping. The files will be much easier to read that way:

- In the kwrite editor, select Settings->Configure Editor, de-select Dynamic Word Wrap and click OK.

- In the vi editor, type the command :set nowrap. To set this as the default behavior, run the following command:

echo "set nowrap" >> ~/.exrc

Open up the point_stat_run1_360000L_20070331_120000V_ctc.txt CTC file using the text editor of your choice and note the following:

- This is a simple ASCII file consisting of several rows of data.

- Each row contains data for a single verification task.

- The FCST_LEAD, FCST_VALID_BEG, and FCST_VALID_END columns indicate the timing information of the forecast field.

- The OBS_LEAD, OBS_VALID_BEG, and OBS_VALID_END columns indicate the timing information of the observation field.

- The FCST_VAR, FCST_LEV, OBS_VAR, and OBS_LEV columns indicate the two parts of the forecast and observation fields set in the configure file.

- The OBTYPE column indicates the PrepBufr message type used for this verification task.

- The VX_MASK column indicates the masking region over which the statistics were accumulated.

- The INTERP_MTHD and INTERP_PNTS columns indicate the method used to interpolate the forecast data to the observation location.

- The FCST_THRESH and OBS_THRESH columns indicate the thresholds applied to FCST_VAR and OBS_VAR.

- The COV_THRESH column is not applicable here and will always have NA when using point_stat.

- The ALPHA column indicates the alpha used for confidence intervals.

- The LINE_TYPE column indicates that these are CTC contingency table count lines.

- The remaining columns contain the counts for the contingency table computed by applying the threshold to the forecast/observation matched pairs. The FY_OY (forecast: yes, observation: yes), FY_ON (forecast: yes, observation: no), FN_OY (forecast: no, observation: yes), and FN_ON (forecast: no, observation: no) columns indicate those counts.

Next, answer the following questions about this contingency table output:

- What do you notice about the structure of the contingency table counts with respect to the two thresholds used? Does this make sense?

- Does the model appear to resolve relatively cold surface temperatures?

- Based on these observations, are temperatures >273 relatively rare or common in the P850-500 range? How can this affect the ability to get a good score using contingency table statistics? What about temperatures <=273 at the surface?

Close that file, open up the point_stat_run1_360000L_20070331_120000V_cnt.txt CNT file, and note the following:

- The first 21 columns, prior to the LINE_TYPE, contain the same data as the previous file we viewed.

- The LINE_TYPE column indicates that these are CNT continuous lines.

- The remaining columns contain continuous statistics derived from the raw forecast/observation pairs. See section 4.3.3 of the MET User's Guide for a thorough description of the output.

- Again, confidence intervals are given for each of these statistics as described above.

Next, answer the following questions about these continuous statistics:

- What conclusions can you draw about the model's performance at each level using continuous statistics? Justify your answer. Did you use a single metric in your evaluation? Why or why not?

- Comparing the first line with an alpha value of 0.05 to the second line with an alpha value of 0.10, how does the level of confidence change the upper and lower bounds of the confidence intervals (CIs)?

- Similarily, comparing the first line with few numbers of matched pairs in the TOTAL column to the third line with more, how does the sample size affect how you interpret your results?

Close that file, open up the point_stat_run1_360000L_20070331_120000V_fho.txt FHO file, and note the following:

- The first 21 columns, prior to the LINE_TYPE, contain the same data as the previous file we viewed.

- The LINE_TYPE column indicates that these are FHO forecast-hit-observation rate lines.

- The remaining columns are similar to the contingency table output and contain the total number of matched pairs, the forecast rate, the hit rate, and observation rate.

- The forecast, hit, and observation rates should back up your answer to the third question about the contingency table output.

Close that file, open up the point_stat_run1_360000L_20070331_120000V_mpr.txt CTS file, and note the following:

- The first 21 columns, prior to the LINE_TYPE, contain the same data as the previous file we viewed.

- The LINE_TYPE column indicates that these are MPR matched pair lines.

- The remaining columns are similar to the contingency table output and contain the total number of matched pairs, the matched pair index, the latitude, longitude, and elevation of the observation, the forecasted value, the observed value, and the climatologic value (if applicable).

- There is a lot of data here and it is recommended that the MPR line_type is used only to verify the tool is working properly.

Point-Stat Tool: Reconfigure

Point-Stat Tool: Reconfigure cindyhg Tue, 06/25/2019 - 09:44Point-Stat Tool: Reconfigure

Now we'll reconfigure and rerun Point-Stat. Start by making a copy of the configuration file we just used:

This time, we'll use two dictionary entries to specify the forecast field in order to set different thresholds for each vertical level. Point-Stat may be configured to verify as many or as few model variables and vertical levels as you desire. Edit the PointStatConfig_tutorial_run2 file as follows:

- Set:

fcst = {

wind_thresh = [ NA ];

message_type = [ "ADPUPA", "ADPSFC" ];field = [

{

name = "TMP";

level = [ "Z2" ];

cat_thresh = [ >273, >278, >283, >288 ];

},

{name = "TMP";

level = [ "P750-850" ];

cat_thresh = [ >278 ];

}

];

}

obs = fcst;To verify 2-meter temperature and temperature fields between 750hPa and 850hPa, using the thresholds specified, and by comparing against the two message types.

- Set:

mask = {

grid = [ "G212" ];

poly = [ "MET_BASE/poly/EAST.poly",

"MET_BASE/poly/WEST.poly" ];

sid = [];

llpnt = [];

}To compute statistics over the NCEP Grid 212 region and over the Eastern and Western United States, as defined by the polylines specified.

- Set:

interp = {

vld_thresh = 1.0;type = [

{

method = NEAREST;

width = 1;

},

{

method = DW_MEAN;

width = 5;

}

];

}To indicate that the forecast values should be interpolated to the observation locations using the nearest neighbor method and by computing a distance-weighted average of the forecast values over the 5 by 5 box surrounding the observation location.

- Set:

output_flag = {

fho = BOTH;

ctc = BOTH;

cts = BOTH;

mctc = NONE;

mcts = NONE;

cnt = BOTH;

sl1l2 = BOTH;

sal1l2 = NONE;

vl1l2 = NONE;

val1l2 = NONE;

pct = NONE;

pstd = NONE;

pjc = NONE;

prc = NONE;

ecnt = NONE;

eclv = BOTH;

mpr = BOTH;

}To switch the SL1L2 and CTS output to BOTH and generate the optional ASCII output files for them.

- Set:

output_prefix = "run2";To customize the output file names for this run.

Let's look at our configuration selections and figure out the number of verification tasks Point-Stat will perform:

- 2 fields: TMP/Z2 and TMP/P750-850

- 2 observing message types: ADPUPA and ADPSFC

- 3 masking regions: G212, EAST.poly, and WEST.poly

- 2 interpolations: UW_MEAN width 1 (nearest-neighbor) and DW_MEAN width 5

Multiplying 2 * 2 * 3 * 2 = 24. So in this example, Point-Stat will accumulate matched forecast/observation pairs into 24 groups. However, some of these groups will result in 0 matched pairs being found. To each non-zero group, the specified threshold(s) will be applied to compute contingency tables.

Can you diagnose why some of these verification tasks resulted in zero matched pairs? (Hint: Reread the tip two pages back!)

Point-Stat Tool: Rerun

Point-Stat Tool: Rerun cindyhg Tue, 06/25/2019 - 09:45Point-Stat Tool: Rerun

Next, run Point-Stat to compare a GRIB forecast to the NetCDF point observation output of the PB2NC tool, as opposed to the much smaller ASCII2NC output we used in the first run. Run the following command line:

/classroom/wrfhelp/MET/8.0/met-8.0/data/sample_fcst/2007033000/nam.t00z.awip1236.tm00.20070330.grb \

../pb2nc/tutorial_pb_run1.nc \

PointStatConfig_tutorial_run2 \

-outdir . \

-v 2

Point-Stat is now performing the verification tasks we requested in the configuration file. It should take a minute or two to run. You should see several status messages printed to the screen to indicate progress. Note the number of matched pairs found for each verification task, some of which are 0.

Plot-Data-Plane Tool