Session 2: Grid-to-Obs

Session 2: Grid-to-Obs admin Wed, 06/12/2019 - 16:57METplus Practical Session 2

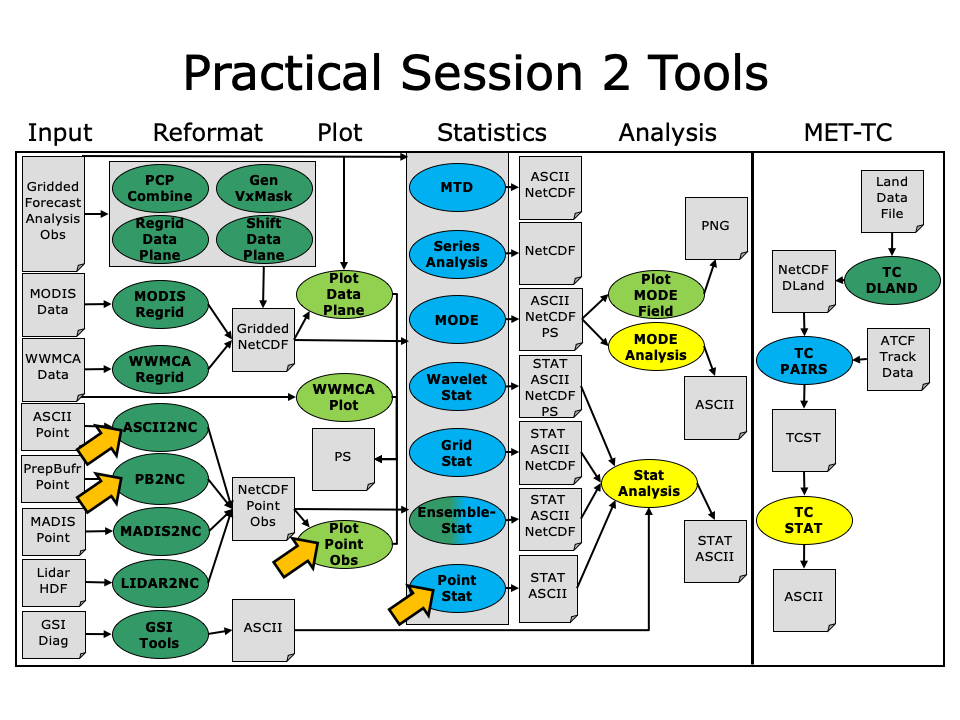

During the second METplus practical session, you will run the tools indicated below:

During this practical session, please work on the Session 2 exercises. You may navigate through this tutorial by following the arrows in the bottom-right or by using the left-hand menu navigation.

During this practical session, please work on the Session 2 exercises. You may navigate through this tutorial by following the arrows in the bottom-right or by using the left-hand menu navigation.

Since you already set up your runtime enviroment in Session 1, you should be ready to go!

The MET Users Guide can be viewed here:

okular /classroom/wrfhelp/MET/8.0/met-8.0/doc/MET_Users_Guide.pdf &

MET Tool: PB2NC

MET Tool: PB2NC cindyhg Tue, 06/25/2019 - 09:25PB2NC Tool: General

PB2NC Functionality

The PB2NC tool is used to stratify (i.e. subset) the contents of an input PrepBufr point observation file and reformat it into NetCDF format for use by the Point-Stat or Ensemble-Stat tool. In this session, we will run PB2NC on a PrepBufr point observation file prior to running Point-Stat. Observations may be stratified by variable type, PrepBufr message type, station identifier, a masking region, elevation, report type, vertical level category, quality mark threshold, and level of PrepBufr processing. Stratification is controlled by a configuration file and discussed on the next page.

The PB2NC tool may be run on both PrepBufr and Bufr observation files. As of met-6.1, support for Bufr is limited to files containing embedded tables. Support for Bufr files using external tables will be added in a future release.

For more information about the PrepBufr format, visit:

http://www.emc.ncep.noaa.gov/mmb/data_processing/prepbufr.doc/document.htm

For information on where to download PrepBufr files, visit:

http://www.dtcenter.org/met/users/downloads/observation_data.php

PB2NC Usage

View the usage statement for PB2NC by simply typing the following:

| Usage: pb2nc | ||

| prepbufr_file | input prepbufr path/filename | |

| netcdf_file | output netcdf path/filename | |

| config_file | configuration path/filename | |

| [-pbfile prepbufr_file] | additional input files | |

| [-valid_beg time] | Beginning of valid time window [YYYYMMDD_[HH[MMSS]]] | |

| [-valid_end time] | End of valid time window [YYYYMMDD_[HH[MMSS]]] | |

| [-nmsg n] | Number of PrepBufr messages to process | |

| [-index] | List available observation variables by message type (no output file) | |

| [-dump path] | Dump entire contents of PrepBufr file to directory | |

| [-log file] | Outputs log messages to the specified file | |

| [-v level] | Level of logging | |

| [-compression level] | NetCDF file compression |

At a minimum, the input prepbufr_file, the output netcdf_file, and the configuration config_file must be passed in on the command line. Also, you may use the -pbfile command line argument to run PB2NC using multiple input PrepBufr files, likely adjacent in time.

When running PB2NC on a new dataset, users are advised to run with the -index option to list the observation variables that are present in that file.

PB2NC Tool: Configure

PB2NC Tool: Configure cindyhg Tue, 06/25/2019 - 09:26PB2NC Tool: Configure

Start by making an output directory for PB2NC and changing directories:

cd ${HOME}/metplus_tutorial/output/met_output/pb2nc

The behavior of PB2NC is controlled by the contents of the configuration file passed to it on the command line. The default PB2NC configuration may be found in the data/config/PB2NCConfig_default file. Prior to modifying the configuration file, users are advised to make a copy of the default:

Open up the PB2NCConfig_tutorial_run1 file for editing with your preferred text editor.

The configurable items for PB2NC are used to filter out the PrepBufr observations that should be retained or derived. You may find a complete description of the configurable items in section 4.1.2 of the MET User's Guide or in the configuration README file.

For this tutorial, edit the PB2NCConfig_tutorial_run1 file as follows:

- Set:

message_type = [ "ADPUPA", "ADPSFC" ];To retain only those 2 message types. Message types are described in:

http://www.emc.ncep.noaa.gov/mmb/data_processing/prepbufr.doc/table_1.htm - Set:

obs_window = {

beg = -30*60;

end = 30*60;

}So that only observations within 30 minutes of the file time will be retained.

- Set:

mask = {

grid = "G212";

poly = "";

}To retain only those observations residing within NCEP Grid 212, on which the forecast data resides.

- Set:

obs_bufr_var = [ "QOB", "TOB", "UOB", "VOB", "D_WIND", "D_RH" ];To retain observations for specific humidity, temperature, the u-component of wind, and the v-component of wind and to derive observation values for wind speed and relative humidity.

While we are request these observation variable names from the input file, the following corresponding strings will be written to the output file: SPFH, TMP, UGRD, VGRD, WIND, RH. This mapping of input PrepBufr variable names to output variable names is specified by the obs_prepbufr_map config file entry. This enables the new features in the current version of MET to be backward compatible with earlier versions.

Next, save the tutorial/config/PB2NCConfig_tutorial_run1 file and exit the text editor.

PB2NC Tool: Run

PB2NC Tool: Run cindyhg Tue, 06/25/2019 - 09:27PB2NC Tool: Run

Next, run PB2NC on the command line using the following command:

/classroom/wrfhelp/MET/8.0/met-8.0/data/sample_obs/prepbufr/ndas.t00z.prepbufr.tm12.20070401.nr \

tutorial_pb_run1.nc \

PB2NCConfig_tutorial_run1 \

-v 2

PB2NC is now filtering the observations from the PrepBufr file using the configuration settings we specified and writing the output to the NetCDF file name we chose. This should take a few minutes to run. As it runs, you should see several status messages printed to the screen to indicate progress. You may use the -v command line option to turn off (-v 0) or change the amount of log information printed to the screen.

Inspect the PB2NC status messages and note that 69833 PrepBufr messages were filtered down to 8394 messages which produced 52491 actual and derived observations. If you'd like to filter down the observations further, you may want to narrow the time window or modify other filtering criteria. We will do that after inspecting the resultant NetCDF file.

PB2NC Tool: Output

PB2NC Tool: Output cindyhg Tue, 06/25/2019 - 09:29PB2NC Tool: Output

When PB2NC is finished, you may view the output NetCDF file it wrote using the ncdump utility. Run the following command to view the header of the NetCDF output file:

In the NetCDF header, you'll see that the file contains nine dimensions and nine variables. The obs_arr variable contains the actual observation values. The obs_qty variable contains the corresponding quality flags. The four header variables (hdr_typ, hdr_sid, hdr_vld, hdr_arr) contain information about the observing locations.

The obs_var, obs_unit, and obs_desc variables describe the observation variables contained in the output. The second entry of the obs_arr variable (i.e. var_id) lists the index into these array for each observation. For example, for observations of temperature, you'd see TMP in obs_var, KELVIN in obs_unit, and TEMPERATURE OBSERVATION in obs_desc. For observations of temperature in obs_arr, the second entry (var_id) would list the index of that temperature information.

Inspect the output of ncdump before continuing.

Plot-Point-Obs

The plot_point_obs tool plots the location of these NetCDF point observations. Just like plot_data_plane is useful to visualize gridded data, run plot_point_obs to make sure you have point observations where you expect. Run the following command:

tutorial_pb_run1.nc \

tutorial_pb_run1.ps

Display the output PostScript file by running the following command:

Each red dot in the plot represents the location of at least one observation value. The plot_point_obs tool has additional command line options for filtering which observations get plotted and the area to be plotted. View its usage statement by running the following command:

By default, the points are plotted on the full globe. Next, try rerunning plot_point_obs using the -data_file option to specify the grid over which the points should be plotted:

tutorial_pb_run1.nc \

tutorial_pb_run1_zoom.ps \

-data_file /classroom/wrfhelp/MET/8.0/met-8.0/data/sample_fcst/2007033000/nam.t00z.awip1236.tm00.20070330.grb

MET extracts the grid information from the first record of that GRIB file and plots the points on that domain. Display the output PostScript file by running the following command:

The plot_data_plane tool can be run on the NetCDF output of any of the MET point observation pre-processing tools (pb2nc, ascii2nc, madis2nc, and lidar2nc).

PB2NC Tool: Reconfigure and Rerun

PB2NC Tool: Reconfigure and Rerun cindyhg Tue, 06/25/2019 - 09:31PB2NC Tool: Reconfigure and Rerun

Now we'll rerun PB2NC, but this time we'll tighten the observation acceptance criteria. Start by making a copy of the configuration file we just used:

Open up the PB2NCConfig_tutorial_run2 file and edit it as follows:

- Set:

message_type = [];To retain all message types.

- Set:

obs_window = {

beg = -25*60;

end = 25*60;

}So that only observations 25 minutes before and 25 minutes after the top of the hour are retained.

- Set:

quality_mark_thresh = 1;To retain only the observations marked "Good" by the NCEP quality control system.

Next, run PB2NC again but change the output name using the following command:

/classroom/wrfhelp/MET/8.0/met-8.0/data/sample_obs/prepbufr/ndas.t00z.prepbufr.tm12.20070401.nr \

tutorial_pb_run2.nc \

PB2NCConfig_tutorial_run2 \

-v 2

Inspect the PB2NC status messages and note that 4000 observations were retained rather than 52491 in the previous example. The majority of the observations were rejected because their valid time no longer fell inside the tighter obs_window setting.

When configuring PB2NC for your own projects, you should err on the side of keeping more data rather than less. As you'll see, the grid-to-point verification tools (Point-Stat and Ensemble-Stat) allow you to further refine which point observations are actually used in the verification. However, keeping a lot of point observations that you'll never actually use will make the data files larger and slightly slow down the verification. For example, if you're using a Global Data Assimilation (GDAS) PREPBUFR file to verify a model over Europe, it would make sense to only keep those point observations that fall within your model domain.

MET Tool: ASCII2NC

MET Tool: ASCII2NC cindyhg Tue, 06/25/2019 - 09:32ASCII2NC Tool: General

ASCII2NC Functionality

The ASCII2NC tool reformats ASCII point observations into the intermediate NetCDF format that Point-Stat and Ensemble-Stat read. ASCII2NC simply reformats the data and does much less filtering of the observations than PB2NC does. ASCII2NC supports a simple 11-column format, described below, the Little-R format often used in data assimilation, surface radiation (SURFRAD) data, Western Wind and Solar Integration Studay (WWSIS) data, and AErosol RObotic NEtwork (Aeronet) data. Future version of MET may be enhanced to support additional commonly used ASCII point observation formats based on community input.

MET Point Observation Format

The MET point observation format consists of one observation value per line. Each input observation line should consist of the following 11 columns of data:

- Message_Type

- Station_ID

- Valid_Time in YYYYMMDD_HHMMSS format

- Lat in degrees North

- Lon in degrees East

- Elevation in meters above sea level

- Variable_Name for this observation (or GRIB_Code for backward compatibility)

- Level as the pressure level in hPa or accumulation interval in hours

- Height in meters above sea level or above ground level

- QC_String quality control string

- Observation_Value

It is the user's responsibility to get their ASCII point observations into this format.

ASCII2NC Usage

View the usage statement for ASCII2NC by simply typing the following:

| Usage: ascii2nc | ||

| ascii_file1 [...] | One or more input ASCII path/filename | |

| netcdf_file | Output NetCDF path/filename | |

| [-format ASCII_format] | Set to met_point, little_r, surfrad, wwsis, or aeronet | |

| [-config file] | Configuration file to specify how observation data should be summarized | |

| [-mask_grid string] | Named grid or a gridded data file for filtering point observations spatially | |

| [-mask_poly file] | Polyline masking file for filtering point observations spatially | |

| [-mask_sid file|list] | Specific station ID's to be used in an ASCII file or comma-separted list | |

| [-log file] | Outputs log messages to the specified file | |

| [-v level] | Level of logging | |

| [-compress level] | NetCDF compression level |

At a minimum, the input ascii_file and the output netcdf_file must be passed on the command line. ASCII2NC interrogates the data to determine it's format, but the user may explicitly set it using the -format command line option. The -mask_grid, -mask_poly, and -mask_sid options can be used to filter observations spatially.

ASCII2NC Tool: Run

ASCII2NC Tool: Run cindyhg Tue, 06/25/2019 - 09:33ASCII2NC Tool: Run

Start by making an output directory for ASCII2NC and changing directories:

cd ${HOME}/metplus_tutorial/output/met_output/ascii2nc

Since ASCII2NC performs a simple reformatting step, typically no configuration file is needed. However, when processing high-frequency (1 or 3-minute) SURFRAD data, a configuration file may be used to define a time window and summary metric for each station. For example, you might compute the average observation value +/- 15 minutes at the top of each hour for each station. In this example, we will not use a configuration file.

The sample ASCII observations in the MET tarball are still identified by GRIB code rather than the newer variable name option. Dump that file and notice that the GRIB codes in the seventh column could be replaced by corresponding variable names. For example, 52 corresponds to RH:

Run ASCII2NC on the command line using the following command:

/classroom/wrfhelp/MET/8.0/met-8.0/data/sample_obs/ascii/sample_ascii_obs.txt \

tutorial_ascii.nc \

-v 2

ASCII2NC should perform this reformatting step very quickly since the sample file only contains data for 5 stations.

ASCII2NC Tool: Output

ASCII2NC Tool: Output cindyhg Tue, 06/25/2019 - 09:37ASCII2NC Tool: Output

When ASCII2NC is finished, you may view the output NetCDF file it wrote using the ncdump utility. Run the following command to view the header of the NetCDF output file:

The NetCDF header should look nearly identical to the output of the NetCDF output of PB2NC. You can see the list of stations for which we have data by inspecting the hdr_sid_table variable:

Feel free to inspect the contents of the other variables as well.

This ASCII data only contains observations at a few locations. Use the plot_point_obs to plot the locations, increasing the level of verbosity to 3 to see more detail:

tutorial_ascii.nc \

tutorial_ascii.ps \

-data_file /classroom/wrfhelp/MET/8.0/met-8.0/data/sample_fcst/2007033000/nam.t00z.awip1236.tm00.20070330.grb \

-v 3

Next, we'll use the NetCDF output of PB2NC and ASCII2NC to perform Grid-to-Point verification using the Point-Stat tool.

MET Tool: Point-Stat

MET Tool: Point-Stat cindyhg Tue, 06/25/2019 - 09:38Point-Stat Tool: General

Point-Stat Functionality

The Point-Stat tool provides verification statistics for comparing gridded forecasts to observation points, as opposed to gridded analyses like Grid-Stat. The Point-Stat tool matches gridded forecasts to point observation locations using one or more configurable interpolation methods. The tool then computes a configurable set of verification statistics for these matched pairs. Continuous statistics are computed over the raw matched pair values. Categorical statistics are generally calculated by applying a threshold to the forecast and observation values. Confidence intervals, which represent a measure of uncertainty, are computed for all of the verification statistics.

Point-Stat Usage

View the usage statement for Point-Stat by simply typing the following:

| Usage: point_stat | ||

| fcst_file | Input gridded file path/name | |

| obs_file | Input NetCDF observation file path/name | |

| config_file | Configuration file | |

| [-point_obs file] | Additional NetCDF observation files to be used (optional) | |

| [-obs_valid_beg time] | Sets the beginning of the matching time window in YYYYMMDD[_HH[MMSS]] format (optional) | |

| [-obs_valid_end time] | Sets the end of the matching time window in YYYYMMDD[_HH[MMSS]] format (optional) | |

| [-outdir path] | Overrides the default output directory (optional) | |

| [-log file] | Outputs log messages to the specified file (optional) | |

| [-v level] | Level of logging (optional) |

At a minimum, the input gridded fcst_file, the input NetCDF obs_file (output of PB2NC, ASCII2NC, MADIS2NC, and LIDAR2NC, last two not covered in these exercises), and the configuration config_file must be passed in on the command line. You may use the -point_obs command line argument to specify additional NetCDF observation files to be used.

Point-Stat Tool: Configure

Point-Stat Tool: Configure cindyhg Tue, 06/25/2019 - 09:40Point-Stat Tool: Configure

Start by making an output directory for Point-Stat and changing directories:

cd ${HOME}/metplus_tutorial/output/met_output/point_stat

The behavior of Point-Stat is controlled by the contents of the configuration file passed to it on the command line. The default Point-Stat configuration file may be found in the data/config/PointStatConfig_default file.

The configurable items for Point-Stat are used to specify how the verification is to be performed. The configurable items include specifications for the following:

- The forecast fields to be verified at the specified vertical levels.

- The type of point observations to be matched to the forecasts.

- The threshold values to be applied.

- The areas over which to aggregate statistics - as predefined grids, lat/lon polylines, or individual stations.

- The confidence interval methods to be used.

- The interpolation methods to be used.

- The types of verification methods to be used.

Let's customize the configuration file. First, make a copy of the default:

Next, open up the PointStatConfig_tutorial_run1 file for editing and modify it as follows:

- Set:

fcst = {

wind_thresh = [ NA ];

message_type = [ "ADPUPA" ];field = [

{

name = "TMP";

level = [ "P850-1050", "P500-850" ];

cat_thresh = [ <=273, >273 ];

}

];

}

obs = fcst;To verify temperature over two different pressure ranges against ADPUPA observations using the thresholds specified.

- Set:

ci_alpha = [ 0.05, 0.10 ];To compute confidence intervals using both a 5% and a 10% level of certainty.

- Set:

output_flag = {

fho = BOTH;

ctc = BOTH;

cts = STAT;

mctc = NONE;

mcts = NONE;

cnt = BOTH;

sl1l2 = STAT;

sal1l2 = NONE;

vl1l2 = NONE;

val1l2 = NONE;

pct = NONE;

pstd = NONE;

pjc = NONE;

prc = NONE;

ecnt = NONE;

eclv = BOTH;

mpr = BOTH;

}To indicate that the forecast-hit-observation (FHO) counts, contingency table counts (CTC), contingency table statistics (CTS), continuous statistics (CNT), partial sums (SL1L2), economic cost/loss value (ECLV), and the matched pair data (MPR) line types should be output. Setting SL1L2 and CTS to STAT causes those lines to only be written to the output .stat file, while setting others to BOTH causes them to be written to both the .stat file and the optional LINE_TYPE.txt file.

- Set:

output_prefix = "run1";To customize the output file names for this run.

Note in this configuration file that in the mask dictionary, the grid entry is set to FULL. This instructs Point-Stat to compute statistics over the entire input model domain. Setting grid to FULL has this special meaning.

Next, save the PointStatConfig_tutorial_run1 file and exit the text editor.

Point-Stat Tool: Run

Point-Stat Tool: Run cindyhg Tue, 06/25/2019 - 09:41Point-Stat Tool: Run

Next, run Point-Stat to compare a GRIB forecast to the NetCDF point observation output of the ASCII2NC tool. Run the following command line:

/classroom/wrfhelp/MET/8.0/met-8.0/data/sample_fcst/2007033000/nam.t00z.awip1236.tm00.20070330.grb \

../ascii2nc/tutorial_ascii.nc \

PointStatConfig_tutorial_run1 \

-outdir . \

-v 2

Point-Stat is now performing the verification tasks we requested in the configuration file. It should take less than a minute to run. You should see several status messages printed to the screen to indicate progress.

Now try rerunning the command listed above, but increase the verbosity level to 3 (-v 3). Notice the more detailed information about which observations were used for each verification task. If you run Point-Stat and get fewer matched pairs than you expected, try using the -v 3 option to see why the observations were rejected.

Users often write MET-Help to ask why they got zero matched pairs from Point-Stat. The first step is always rerunning Point-Stat using verbosity level 3 or higher to list the counts of reasons for why observations were not used!

Point-Stat Tool: Output

Point-Stat Tool: Output cindyhg Tue, 06/25/2019 - 09:42Point-Stat Tool: Output

The output of Point-Stat is one or more ASCII files containing statistics summarizing the verification performed. Since we wrote output to the current directory, it should now contain 6 ASCII files that begin with the point_stat_ prefix, one each for the FHO, CTC, CNT, ECLV, and MPR types, and a sixth for the STAT file. The STAT file contains all of the output statistics while the other ASCII files contain the exact same data organized by line type.

Since the lines of data in these ASCII files are so long, we strongly recommend configuring your text editor to NOT use dynamic word wrapping. The files will be much easier to read that way:

- In the kwrite editor, select Settings->Configure Editor, de-select Dynamic Word Wrap and click OK.

- In the vi editor, type the command :set nowrap. To set this as the default behavior, run the following command:

echo "set nowrap" >> ~/.exrc

Open up the point_stat_run1_360000L_20070331_120000V_ctc.txt CTC file using the text editor of your choice and note the following:

- This is a simple ASCII file consisting of several rows of data.

- Each row contains data for a single verification task.

- The FCST_LEAD, FCST_VALID_BEG, and FCST_VALID_END columns indicate the timing information of the forecast field.

- The OBS_LEAD, OBS_VALID_BEG, and OBS_VALID_END columns indicate the timing information of the observation field.

- The FCST_VAR, FCST_LEV, OBS_VAR, and OBS_LEV columns indicate the two parts of the forecast and observation fields set in the configure file.

- The OBTYPE column indicates the PrepBufr message type used for this verification task.

- The VX_MASK column indicates the masking region over which the statistics were accumulated.

- The INTERP_MTHD and INTERP_PNTS columns indicate the method used to interpolate the forecast data to the observation location.

- The FCST_THRESH and OBS_THRESH columns indicate the thresholds applied to FCST_VAR and OBS_VAR.

- The COV_THRESH column is not applicable here and will always have NA when using point_stat.

- The ALPHA column indicates the alpha used for confidence intervals.

- The LINE_TYPE column indicates that these are CTC contingency table count lines.

- The remaining columns contain the counts for the contingency table computed by applying the threshold to the forecast/observation matched pairs. The FY_OY (forecast: yes, observation: yes), FY_ON (forecast: yes, observation: no), FN_OY (forecast: no, observation: yes), and FN_ON (forecast: no, observation: no) columns indicate those counts.

Next, answer the following questions about this contingency table output:

- What do you notice about the structure of the contingency table counts with respect to the two thresholds used? Does this make sense?

- Does the model appear to resolve relatively cold surface temperatures?

- Based on these observations, are temperatures >273 relatively rare or common in the P850-500 range? How can this affect the ability to get a good score using contingency table statistics? What about temperatures <=273 at the surface?

Close that file, open up the point_stat_run1_360000L_20070331_120000V_cnt.txt CNT file, and note the following:

- The first 21 columns, prior to the LINE_TYPE, contain the same data as the previous file we viewed.

- The LINE_TYPE column indicates that these are CNT continuous lines.

- The remaining columns contain continuous statistics derived from the raw forecast/observation pairs. See section 4.3.3 of the MET User's Guide for a thorough description of the output.

- Again, confidence intervals are given for each of these statistics as described above.

Next, answer the following questions about these continuous statistics:

- What conclusions can you draw about the model's performance at each level using continuous statistics? Justify your answer. Did you use a single metric in your evaluation? Why or why not?

- Comparing the first line with an alpha value of 0.05 to the second line with an alpha value of 0.10, how does the level of confidence change the upper and lower bounds of the confidence intervals (CIs)?

- Similarily, comparing the first line with few numbers of matched pairs in the TOTAL column to the third line with more, how does the sample size affect how you interpret your results?

Close that file, open up the point_stat_run1_360000L_20070331_120000V_fho.txt FHO file, and note the following:

- The first 21 columns, prior to the LINE_TYPE, contain the same data as the previous file we viewed.

- The LINE_TYPE column indicates that these are FHO forecast-hit-observation rate lines.

- The remaining columns are similar to the contingency table output and contain the total number of matched pairs, the forecast rate, the hit rate, and observation rate.

- The forecast, hit, and observation rates should back up your answer to the third question about the contingency table output.

Close that file, open up the point_stat_run1_360000L_20070331_120000V_mpr.txt CTS file, and note the following:

- The first 21 columns, prior to the LINE_TYPE, contain the same data as the previous file we viewed.

- The LINE_TYPE column indicates that these are MPR matched pair lines.

- The remaining columns are similar to the contingency table output and contain the total number of matched pairs, the matched pair index, the latitude, longitude, and elevation of the observation, the forecasted value, the observed value, and the climatologic value (if applicable).

- There is a lot of data here and it is recommended that the MPR line_type is used only to verify the tool is working properly.

Point-Stat Tool: Reconfigure

Point-Stat Tool: Reconfigure cindyhg Tue, 06/25/2019 - 09:44Point-Stat Tool: Reconfigure

Now we'll reconfigure and rerun Point-Stat. Start by making a copy of the configuration file we just used:

This time, we'll use two dictionary entries to specify the forecast field in order to set different thresholds for each vertical level. Point-Stat may be configured to verify as many or as few model variables and vertical levels as you desire. Edit the PointStatConfig_tutorial_run2 file as follows:

- Set:

fcst = {

wind_thresh = [ NA ];

message_type = [ "ADPUPA", "ADPSFC" ];field = [

{

name = "TMP";

level = [ "Z2" ];

cat_thresh = [ >273, >278, >283, >288 ];

},

{name = "TMP";

level = [ "P750-850" ];

cat_thresh = [ >278 ];

}

];

}

obs = fcst;To verify 2-meter temperature and temperature fields between 750hPa and 850hPa, using the thresholds specified, and by comparing against the two message types.

- Set:

mask = {

grid = [ "G212" ];

poly = [ "MET_BASE/poly/EAST.poly",

"MET_BASE/poly/WEST.poly" ];

sid = [];

llpnt = [];

}To compute statistics over the NCEP Grid 212 region and over the Eastern and Western United States, as defined by the polylines specified.

- Set:

interp = {

vld_thresh = 1.0;type = [

{

method = NEAREST;

width = 1;

},

{

method = DW_MEAN;

width = 5;

}

];

}To indicate that the forecast values should be interpolated to the observation locations using the nearest neighbor method and by computing a distance-weighted average of the forecast values over the 5 by 5 box surrounding the observation location.

- Set:

output_flag = {

fho = BOTH;

ctc = BOTH;

cts = BOTH;

mctc = NONE;

mcts = NONE;

cnt = BOTH;

sl1l2 = BOTH;

sal1l2 = NONE;

vl1l2 = NONE;

val1l2 = NONE;

pct = NONE;

pstd = NONE;

pjc = NONE;

prc = NONE;

ecnt = NONE;

eclv = BOTH;

mpr = BOTH;

}To switch the SL1L2 and CTS output to BOTH and generate the optional ASCII output files for them.

- Set:

output_prefix = "run2";To customize the output file names for this run.

Let's look at our configuration selections and figure out the number of verification tasks Point-Stat will perform:

- 2 fields: TMP/Z2 and TMP/P750-850

- 2 observing message types: ADPUPA and ADPSFC

- 3 masking regions: G212, EAST.poly, and WEST.poly

- 2 interpolations: UW_MEAN width 1 (nearest-neighbor) and DW_MEAN width 5

Multiplying 2 * 2 * 3 * 2 = 24. So in this example, Point-Stat will accumulate matched forecast/observation pairs into 24 groups. However, some of these groups will result in 0 matched pairs being found. To each non-zero group, the specified threshold(s) will be applied to compute contingency tables.

Can you diagnose why some of these verification tasks resulted in zero matched pairs? (Hint: Reread the tip two pages back!)

Point-Stat Tool: Rerun

Point-Stat Tool: Rerun cindyhg Tue, 06/25/2019 - 09:45Point-Stat Tool: Rerun

Next, run Point-Stat to compare a GRIB forecast to the NetCDF point observation output of the PB2NC tool, as opposed to the much smaller ASCII2NC output we used in the first run. Run the following command line:

/classroom/wrfhelp/MET/8.0/met-8.0/data/sample_fcst/2007033000/nam.t00z.awip1236.tm00.20070330.grb \

../pb2nc/tutorial_pb_run1.nc \

PointStatConfig_tutorial_run2 \

-outdir . \

-v 2

Point-Stat is now performing the verification tasks we requested in the configuration file. It should take a minute or two to run. You should see several status messages printed to the screen to indicate progress. Note the number of matched pairs found for each verification task, some of which are 0.

Plot-Data-Plane Tool

In this step, we have verified 2-meter temperature. The Plot-Data-Plane tool within MET provides a way to visualize the gridded data fields that MET can read. Run this utility to plot the 2-meter temperature field:

/classroom/wrfhelp/MET/8.0/met-8.0/data/sample_fcst/2007033000/nam.t00z.awip1236.tm00.20070330.grb \

nam.t00z.awip1236.tm00.20070330_TMPZ2.ps \

'name="TMP"; level="Z2";'

Plot-Data-Plane requires an input gridded data file, an output postscript image file name, and a configuration string defining which 2-D field is to be plotted. View the output by running:

View the usage for Plot-Data-Plane by running it with no arguments or using the --help option:

Now rerun this Plot-Data-Plane command but...

- Set the title to 2-m Temperature.

- Set the plotting range as 250 to 305.

- Use the color table named MET_BASE/colortables/NCL_colortables/wgne15.ctable

Next, we'll take a look at the Point-Stat output we just generated.

See the usage statement for all MET tools using the --help command line option or with no options at all.

Point-Stat Tool: Output

Point-Stat Tool: Output cindyhg Tue, 06/25/2019 - 09:46Point-Stat Tool: Output

The format for the CTC, CTS, and CNT line types are the same. However, the numbers will be different as we used a different set of observations for the verification.

Open up the point_stat_run2_360000L_20070331_120000V_cts.txt CTS file, and note the following:

- The first 21 columns, prior to the LINE_TYPE, contain the same data as the previous file.

- The LINE_TYPE column indicates that these are CTS lines.

- The remaining columns contain statistics derived from the threshold contingency table counts. See section 7.3.3 of the MET User's Guide for a thorough description of the output.

- Confidence intervals are given for each of these statistics, computed using either one or two methods. The columns ending in _NCL(normal confidence lower) and _NCU (normal confidence upper) give lower and upper confidence limits computed using assumptions of normality. The columns ending in _BCL (bootstrap confidence lower) and _BCU (bootstrap confidence upper) give lower and upper confidence limits computed using bootstrapping.

Close that file, open up the point_stat_run2_360000L_20070331_120000V_sl1l2.txt SL1L2 partial sums file, and note the following:

- The first 21 columns, prior to the LINE_TYPE, contain the same data as previously specified.

- The LINE_TYPE column indicates these are SL1L2 partial sums lines.

Lastly, the point_stat_run2_360000L_20070331_120000V.stat file contains all of the same data we just viewed but in a single file. The Stat-Analysis tool, which we'll use later in this tutorial, searches for the .stat output files by default but can also read the .txt output files.

Use Case: Grid-to-Obs

Use Case: Grid-to-Obs cindyhg Tue, 06/25/2019 - 09:48METplus Use Case: Grid to Obs Upper Air

The Grid to Obs Upper Air use case utilizes the MET PB2NC and Point-Stat tools.

The default statistics created by this use case only dump the partial sums, so we will be also modifying the METplus "MET" specific configuration file to add the continuous statistics to the output.

There is a little more setup in this use case, which will be instructive and demonstrate the basic structure, flexibility and setup of METplus configuration.

Optional, Refer to section 4.1 and 7.3 of the MET Users Guide for a description of the MET tools used in this use case.

Optional, Refer to the METplus 2.0.4 Users Guide for a reference to METplus variables used in this use case.

Setup

Lets start by defining where to send the output by setting the OUTPUT_BASE configuration variable.

Edit Custom Configuration File

Define a unique directory under output that you will use for this use case.

Edit ${HOME}/METplus/parm/mycustom.conf

Change OUTPUT_BASE to contain a subdirectory specific to the Grid to Obs use case.

... other entries ...

OUTPUT_BASE = {ENV[HOME]}/metplus_tutorial/output/grid_to_obs

Since pb2nc takes 20 minutes to run on the sample data for this use case, we have pregenerated the netcdf output and will be copying this data to the OUTPUT_BASE directory.

Run the following commands:

cp -r /classroom/wrfhelp/METplus_Data/grid_to_obs/netcdf/* ${HOME}/metplus_tutorial/output/grid_to_obs/

We will also need to change the METplus PROCESS_LIST configuration variable for this use case to only run PointStat.

Edit ${HOME}/METplus/parm/use_cases/grid_to_obs/examples/upper_air.conf

Make the following changes to the existing PROCESS_LIST line. Be sure to maintain and keep the changes under the [config]section identifier in the file.

... other entries ...

#PROCESS_LIST = PB2NC, PointStat

PROCESS_LIST = PointStat

NOTE : PROCESS_LIST defines the processes that will be run for the use case.

Consider, Instead of changing the PROCESS_LIST in the upper_air.conf file you could have added it under a [config] section in your mycustom.conf file. HOWEVER, that would than have applied to all subsequent calls and use cases, so after running this use case, you would than have to comment out that change unless you were running a similar use case and wanted the same PROCESS_LIST.

Lastly, lets add the the continuous statistics to the Point-Stat output.

Edit the METplus MET Configuration File

Edit ${HOME}/METplus/parm/use_cases/grid_to_obs/met_config/PointStatConfig_upper_air

You will need to edit the cnt flag and add the vcnt flag, as follows, in the Statistical Output types section.

... other entries ...

cnt = STAT;

vcnt = STAT;

Review: Take a look at the settings in the Upper Air configuration file.

Run METplus: Run Grid to Obs Upper Air use case.

Run the following command:

Review Output files:

The following is the statistical ouput and files are generated from the command:

- point_stat_000000L_20170601_000000V.stat

- point_stat_000000L_20170602_000000V.stat

- point_stat_000000L_20170603_000000V.stat

- point_stat_240000L_20170602_000000V.stat

- point_stat_240000L_20170603_000000V.stat

- point_stat_480000L_20170603_000000V.stat

METplus Use Case: Grid to Obs CONUS Surface

METplus Use Case: Grid to Obs CONUS Surface cindyhg Tue, 06/25/2019 - 09:53METplus Use Case: Grid to Obs CONUS Surface

The Grid to Obs CONUS Surface use case utilizes the MET PB2NC and Point-Stat tools.

The default statistics created by this use case only dump the partial sums, so we will be also modifying the METplus "MET" specific configuration file to add the continuous statistics to the output.

There is a little more setup in this use case, which will be instructive and demonstrate the basic structure, flexibility and setup of METplus configuration.

Optional, Refer to section 4.1 and 7.3 of the MET Users Guide for a description of the MET tools used in this use case.

Optional, Refer to the METplus 2.0.4 Users Guide for a reference to METplus variables used in this use case.

Setup

Lets start by defining where to send the output by setting the OUTPUT_BASE configuration variable.

Edit Custom Configuration File

Define a unique directory under output that you will use for this use case.

Edit ${HOME}/METplus/parm/mycustom.conf

Change OUTPUT_BASE to contain a subdirectory specific to the Grid to Obs use case.

... other entries ...

OUTPUT_BASE = {ENV[HOME]}/metplus_tutorial/output/grid_to_obs

Since pb2nc takes 20 minutes to run on the sample data for this use case, we have pregenerated the netcdf output and will be copying this data to the OUTPUT_BASE directory.

Run the following commands:

Note: If you already ran this command in the previous use case and do not need to copy the data again.

cp -r /classroom/wrfhelp/METplus_Data/grid_to_obs/netcdf/* ${HOME}/metplus_tutorial/output/grid_to_obs/

We will also need to change the METplus PROCESS_LIST configuration variable for this use case to only run PointStat.

Edit ${HOME}/METplus/parm/use_cases/grid_to_obs/examples/conus_surface.conf

Note: If you use vi to edit this file, you may receive a modeline complaint opening the file, just hit return and ignore.

Make the following changes to the existing PROCESS_LIST line. Be sure to maintain and keep the changes under the [config]section identifier in the file.

... other entries ...

#PROCESS_LIST = PB2NC, PointStat

PROCESS_LIST = PointStat

NOTE : PROCESS_LIST defines the processes that will be run for the use case.

Consider, Instead of changing the PROCESS_LIST in the conus_surface.conf file you could have added it under a [config]section in your mycustom.conf file. HOWEVER, that would than have applied to all subsequent calls and use cases, so after running this use case, you would than have to comment out that change unless you were running a similar use case and wanted the same PROCESS_LIST.

Lastly, lets add the the continuous statistics to the Point-Stat output.

Edit the METplus MET Configuration File

Edit ${HOME}/METplus/parm/use_cases/grid_to_obs/met_config/PointStatConfig_conus_sfc

You will need to edit the cnt flag and add the vcnt flag, as follows, in the Statistical Output types section.

... other entries ...

cnt = STAT;

vcnt = STAT;

Review: Take a look at the settings in the CONUS Surface configuration file.

Run METplus: Run Grid to Obs CONUS Surface use case.

Run the following command:

Review Output files:

The following is the statistical ouput and files are generated from the command:

- point_stat_000000L_20170601_000000V.stat

- point_stat_000000L_20170602_000000V.stat

- point_stat_000000L_20170603_000000V.stat

- point_stat_240000L_20170602_000000V.stat

- point_stat_240000L_20170603_000000V.stat

- point_stat_480000L_20170603_000000V.stat

Additional Exercises

Additional Exercises cindyhg Tue, 06/25/2019 - 09:55End of Practical Session 2

Congratulations! You have completed Session 2!

If you have extra time, you may want to try this additional METplus exercise.

EXERCISE 2.1: Rerun Point-Stat - Rerun Point-Stat to produce additional continuous statistics file types.

Instructions: Modify the METplus MET configuration file for Upper Air to write Continuous statistics (cnt) and the Vector Continuous Statistics (vcnt) line types to both the stat file and its own file.

To do this, you will edit your PointStatConfig_upper_air METPlus MET configuration file as below.

... other entries ...

cnt = BOTH;

vcnt = BOTH;

Rerun master_metplus

Review the additional output files generated under ...