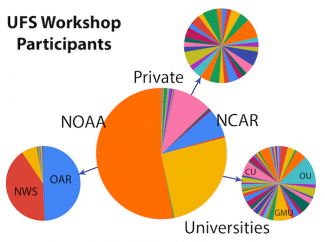

The community aspect of NOAA’s Unified Forecast System (UFS) is off to a strong start with the release of the UFS Medium-Range Weather Application v1.0 on 11 March 2020. The planning and preparations for this release were truly a community effort that convened a multi-institutional team of scientists and software engineers from NOAA’s Environmental Modeling Center (EMC), NOAA research laboratories (Global Systems Laboratory [GSL], National Severe Storms Laboratory [NSSL], Physical Sciences Laboratory [PSL], and Geophysical Fluid Dynamics Laboratory [GFDL]), Cooperative Institutes (Cooperative Institute for Research in Environmental Sciences [CIRES] and Cooperative Institute for Research in the Atmosphere [CIRA]), the Developmental Testbed Center (DTC), the National Center for Atmospheric Research (NCAR) and George Mason University (GMU). This multi-institutional Release Team was assembled in September 2019, and charged with developing a streamlined project plan for the first public release of UFS, and then overseeing and executing this project plan. The aim was to build a well documented UFS modeling system that the community can download, set up, and run in multiple computing environments.

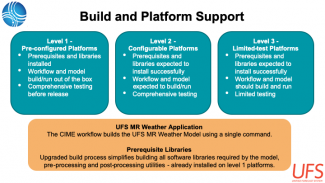

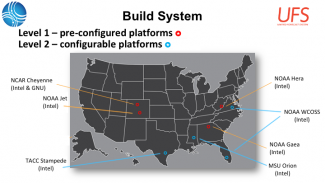

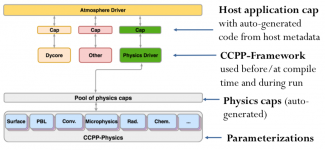

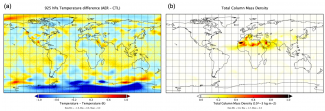

The UFS is undergoing rapid development across multiple fronts to achieve its vision of meeting the needs for applications spanning from local to global domains and predictive time scales from sub-hourly analyses to seasonal predictions; therefore, an important first step was to define the scope of this initial release. The team quickly converged on a plan that focused on global configurations for four different resolutions and two supported physics suites: the operational GFSv15 suite and an experimental physics suite under development for GFSv16. To provide the community with some flexibility on which forecast cycles the model could be run, the team decided it would be important to include the capability to initialize the model using more widely available GRIB2 output. The team also prioritized the portability of this complex software system, testing on multiple platforms, providing a robust, user-friendly workflow, assembling documentation, and establishing a support mechanism.