Ensembles are useful forecast tools because they account for uncertainties in initial conditions, lateral boundary conditions, and/or model physics, and they provide probabilistic information to users. However, many ensembles suffer from under-dispersion, sub-optimal reliability, and systematic biases. Machine learning (ML) can help remedy these shortcomings by post-processing raw ensemble output. Conceptually, ML identifies (nonlinear and linear) patterns in historical numerical weather prediction (NWP) data during training and uses those patterns to make predictions about the future. My work seeks to answer questions related to the operational implementation of ML for post-processing ensemble-based probabilistic quantitative precipitation forecasts (PQPFs). These questions include how ML-based post-processing impacts different types of ensembles, compares to other post-processing techniques, performs at various precipitation thresholds, and functions with different amounts of training data.

During the first part of my visit, my work has used a random forest (RF) algorithm to create 24-h PQPFs from two multi-physics, multi-model ensembles: the 8-member convection-allowing High-Resolution Ensemble Forecast System Version 2 (HREFv2) and the 26-member convection-parameterizing Short-Range Ensemble Forecast System (SREF). RF-based PQPFs from each ensemble are compared against raw ensemble and spatially-smoothed PQPFs for a 496-day dataset spanning April 2017 – November 2018.

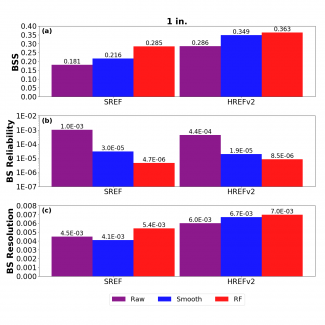

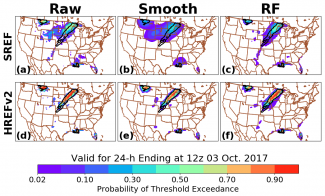

Preliminary results suggest that RF-based PQPFs are more accurate when compared to the raw and smoothed ensemble forecasts (Fig. 1). An example set of forecasts for the 1-inch threshold is shown in Fig. 2. Notably, the RF PQPFs have nearly perfect reliability without sacrificing resolution, as sometimes occurs with spatial smoothing (e.g., Fig. 2b). The RF technique performs best for the SREF, presumably because it has more systematic biases than the HREFv2, and for lower precipitation thresholds, since there are more examples of observations exceeding these thresholds (i.e., the RF has more positive training examples to work with).

Once an RF has undergone training, it is computationally inexpensive to run in real-time. After data preprocessing, a real-time forecast can be generated in less than a minute on a single processor. Including preprocessing, the forecast takes about 30 minutes to generate. Real-time RF PQPFs are currently being produced for the 00Z HREFv2 initialization and can be accessed at https://www.spc.noaa.gov/exper/href/ under the precipitation tab.

Future work will add temporal resolution to the ML-based forecasts and will compare the benefits of ML-based post-processing for formally-designed ensembles, whose members use the same physical parameterizations and produce equally-likely solutions (e.g., the NCAR ensemble), and informally-designed ensembles, whose members use different physical parameterizations and produce unequally-likely solutions (e.g., the Storm Scale Ensemble of Opportunity). I am grateful to the DTC Visitor Program for supporting this work.